Virtual viewpoint hole filling method based on deep background modeling

A technology for virtual viewpoint and background modeling, which is applied in the field of virtual viewpoint hole filling based on depth background modeling, which can solve problems such as unstable foreground, poor depth map quality, and unnatural filling results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

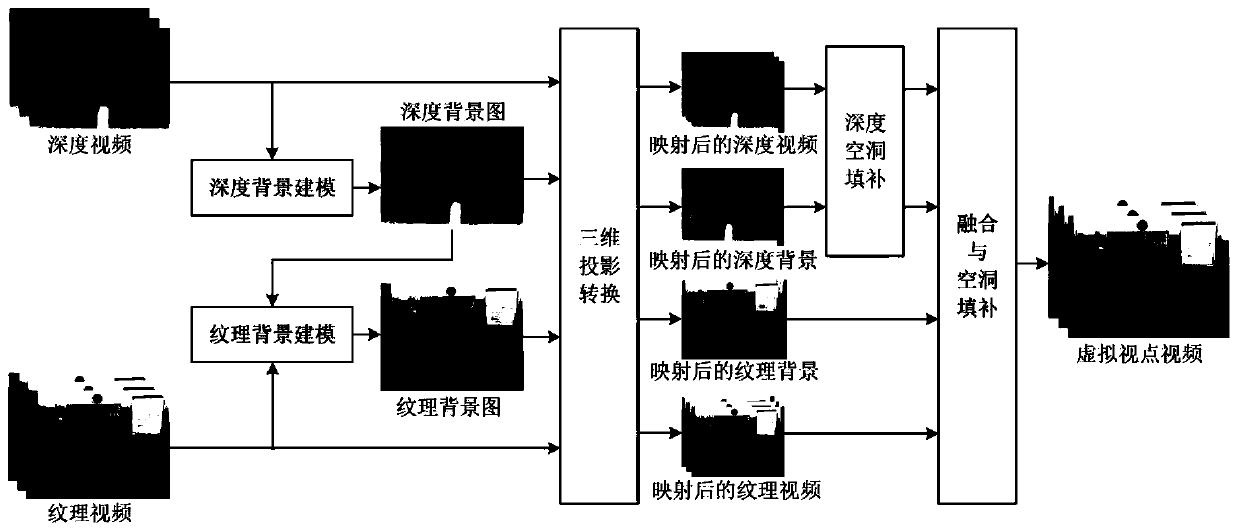

[0053] like figure 1 As shown, a virtual viewpoint hole filling method based on depth background modeling includes the following steps:

[0054] Step 1, input depth video and texture video of reference viewpoint;

[0055] Step 2. Perform depth background modeling on the depth video of the reference viewpoint to obtain a depth background image;

[0056] Among them, the method of extracting the depth background image from the depth video of the reference viewpoint can use the existing method, for example: the background depth in the paper "DIBR Hole Filling Method Based on Background Extraction and Partition Restoration" published by the author Chen Yue et al. The image extraction method can also adopt the following methods. In this embodiment, the method for obtaining the depth background image includes the following steps:

[0057] Step 2-1...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com