Color consistency adjusting method for real-time video fusion

A real-time video and adjustment method technology, applied in the field of enhanced virtual environment, can solve problems such as poor robustness and inconsistent texture of spliced models

- Summary

- Abstract

- Description

- Claims

- Application Information

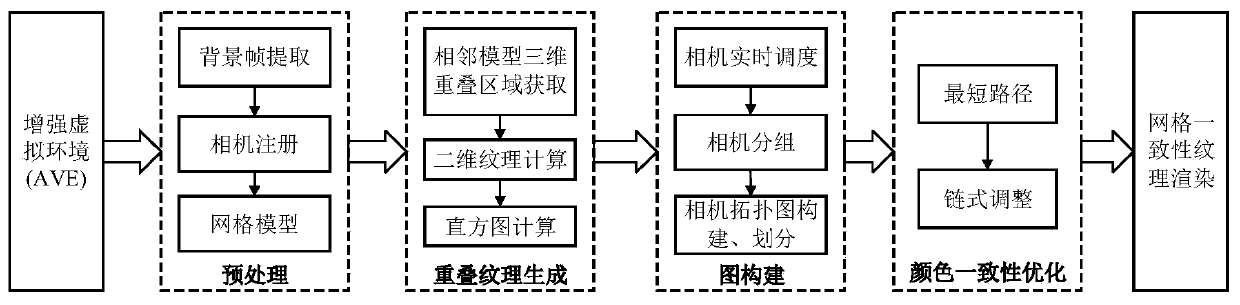

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0082] Below in conjunction with accompanying drawing, the present invention is described in further detail, before introducing the specific implementation method of the present invention, at first some basic concepts are explained:

[0083] (1) Fusion of virtual and real: Fusion display of virtual 3D models and real pictures or videos;

[0084] (2) Image modeling: collect images in the real scene, and model the scene based on a single image;

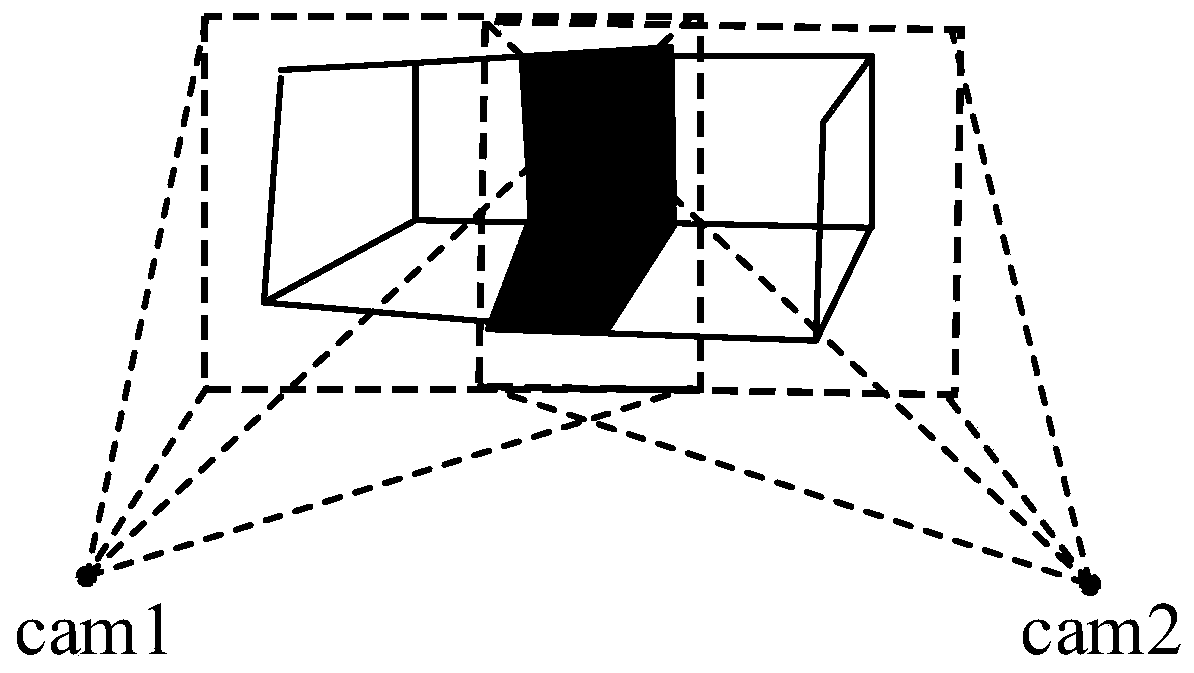

[0085] (3) Three-dimensional overlapping area: the model obtained based on image modeling is called a video model, each model is composed of multiple mesh patches, and the overlapping mesh patches of adjacent models are the three-dimensional overlapping area;

[0086] (4) Channel: the representation form of the complete image, each pixel in the image is described by three values of RGB, and the image corresponds to three channels of R, G, and B;

[0087] (5) Histogram: used to count the proportion of each intensity in the image area,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com