Extraction type unsupervised text abstraction method

An unsupervised, extractive technology, applied in the field of text summarization, which can solve the problems of inaccuracy, reduced efficiency, and path dependence of automatic text summarization, and achieve the effect of shortening reading time, improving efficiency, and compressing redundancy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0039] The present invention provides an extractive unsupervised text summarization method, the steps are as follows:

[0040] S1. Divide the text into several constituent units (words, sentences) and establish a graph model;

[0041] S2. Use the voting mechanism to sort the important components in the text, and only use the information of a single document itself to realize keyword extraction and abstract;

[0042] Among them, the process of building a model and determining the weight is as follows:

[0043] S201. Preprocessing: dividing the content of the input text or text set into sentences to obtain

[0044] T=[S 1 , S 2 ,...,S m ];

[0045] S202, construct graph G=(V, E), wherein V is a sentence set, perform word segmentation on sentences and remove stop words, and obtain

[0046] S i =[t i,1 , t i,2 ,...,t i,n ];

[0047] Among them, t i,j ∈ S j are reserved candidate keywords;

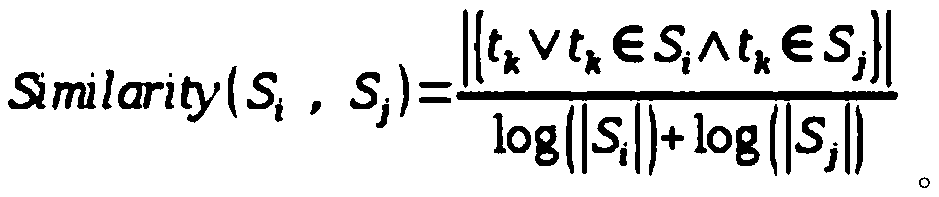

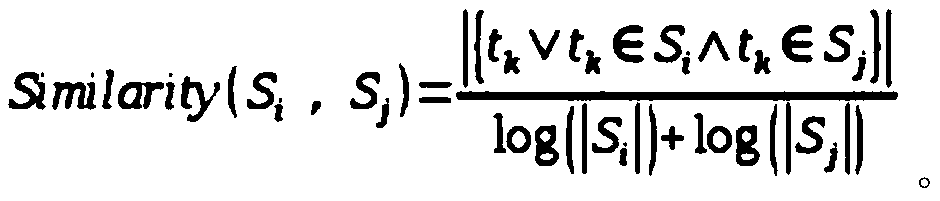

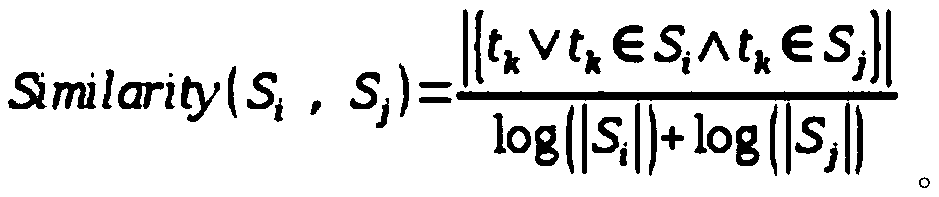

[0048] S203. Sentence similarity calculation: construct the edge set E in the...

Embodiment 2

[0062] The present invention provides an extractive unsupervised text summarization method, the steps are as follows:

[0063] S1. Divide the text into several constituent units (words, sentences) and establish a graph model;

[0064] S2. Use the voting mechanism to sort the important components in the text, and only use the information of a single document itself to realize keyword extraction and abstract;

[0065] Among them, the process of building a model and determining the weight is as follows:

[0066] S201. Preprocessing: dividing the content of the input text or text set into sentences to obtain

[0067] T=[S 1 , S 2 ,...,S m ];

[0068] S202, construct graph G=(V, E), wherein V is a sentence set, perform word segmentation on sentences and remove stop words, and obtain

[0069] S i =[t i,1 , t i,2 ,...,t i,n ];

[0070] Among them, t i,j ∈ S j are reserved candidate keywords;

[0071] S203. Sentence similarity calculation: construct the edge set E in the g...

Embodiment 3

[0086] The present invention provides an extractive unsupervised text summarization method, the steps are as follows:

[0087] S1. Divide the text into several constituent units (words, sentences) and establish a graph model;

[0088] S2. Use the voting mechanism to sort the important components in the text, and only use the information of a single document itself to realize keyword extraction and abstract;

[0089] Among them, the process of building a model and determining the weight is as follows:

[0090] S201. Preprocessing: dividing the content of the input text or text set into sentences to obtain

[0091] T=[S 1 , S 2 ,...,S m ];

[0092] S202, construct graph G=(V, E), wherein V is a sentence set, perform word segmentation on sentences and remove stop words, and obtain

[0093] S i =[t i,1 , t i,2 ,...,t i,n ];

[0094] Among them, t i,j ∈ S j are reserved candidate keywords;

[0095] S203. Sentence similarity calculation: construct the edge set E in the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com