A vision-based user specific behavior analysis method and a self-service medical terminal

A behavioral analysis and self-service medical technology, applied in the fields of instruments, character and pattern recognition, computer parts, etc., can solve the problems of inability to record emergencies and automatic forensics.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

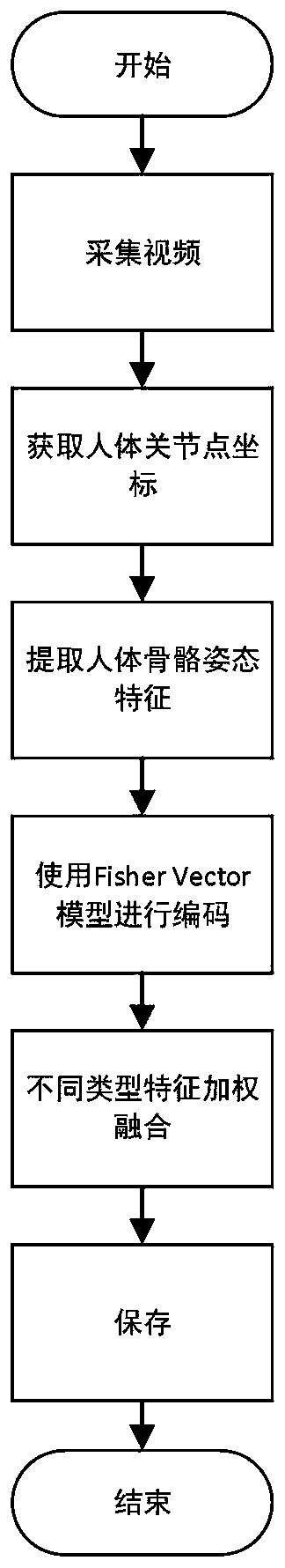

[0076] One: Collect video and obtain coordinates of human body joints.

[0077] Use convolutional neural network (CNN) to obtain the position coordinates of human body joints in each frame of the video. Let the jth joint coordinates of the i-th frame be p ij That is (x ij ,y ij );

[0078] 2. Extract the pose features of the human skeleton, and calculate the 10 pose spatiotemporal features of the sample, not limited to the 10 pose spatiotemporal features described below.

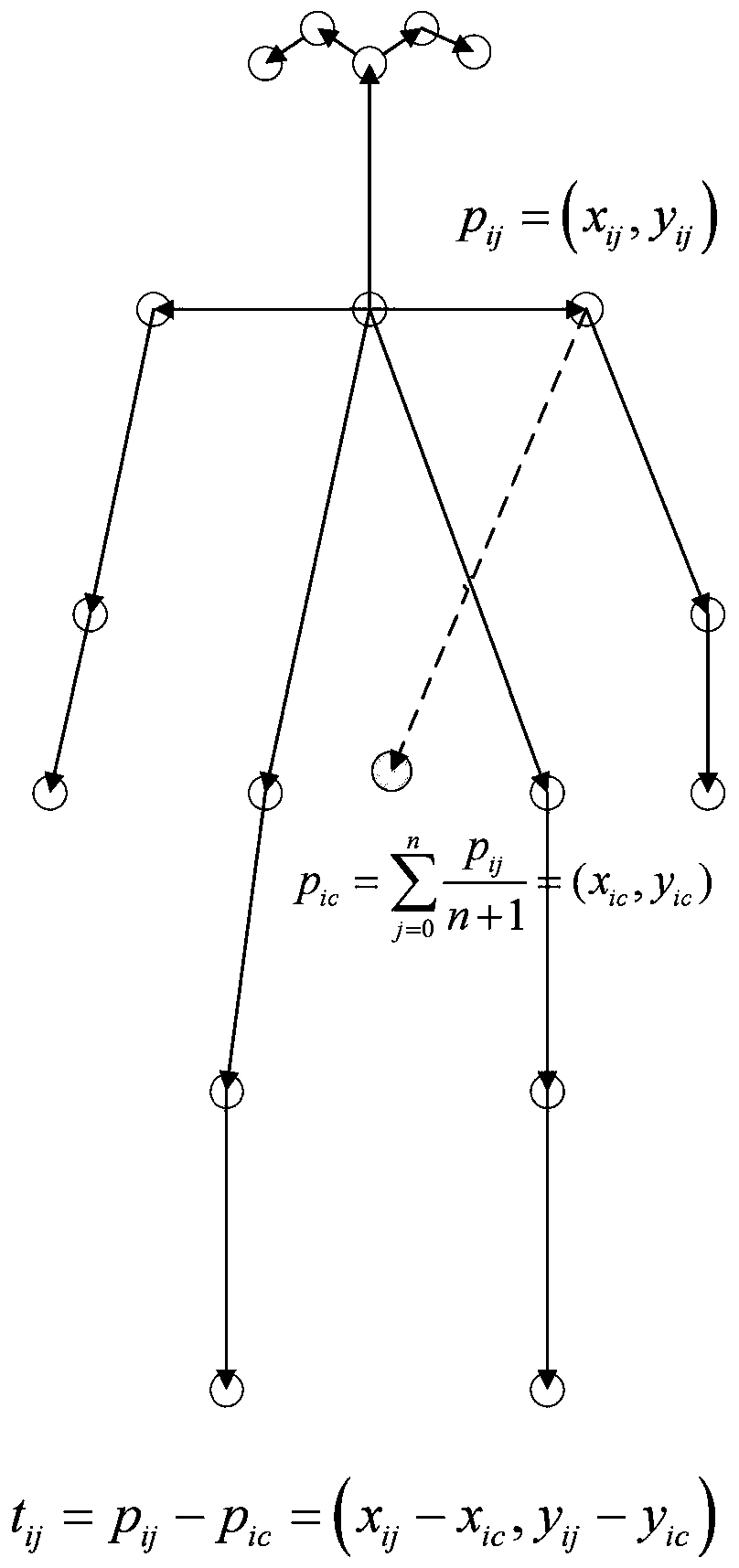

[0079] Feature 1: Vector matrix of joint positions relative to the center of mass of the human body

[0080] Define the center of gravity of all relevant nodes as the center of mass of the human body, and the coordinates of the center of mass are:

[0081]

[0082] That is (x ic ,y ic ), the feature matrix of joint coordinate trajectory is T=(t ij ) N×K , where t ij =p ij -p ic =(x ij -x ic ,y ij -y ic ),Such as image 3 It is a schematic diagram of the coordinates of the center of mass of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com