A method and system for monitoring movement track based on livestock identity

A technology of moving track and livestock, applied in the direction of location information-based services, character and pattern recognition, collaborative operation devices, etc., can solve the problems of large footprint, increase the threshold for using feeding stations, and lengthen the time, etc., to achieve individual The effects of streamlining livestock breeding management, streamlining manpower, and enriching data collection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

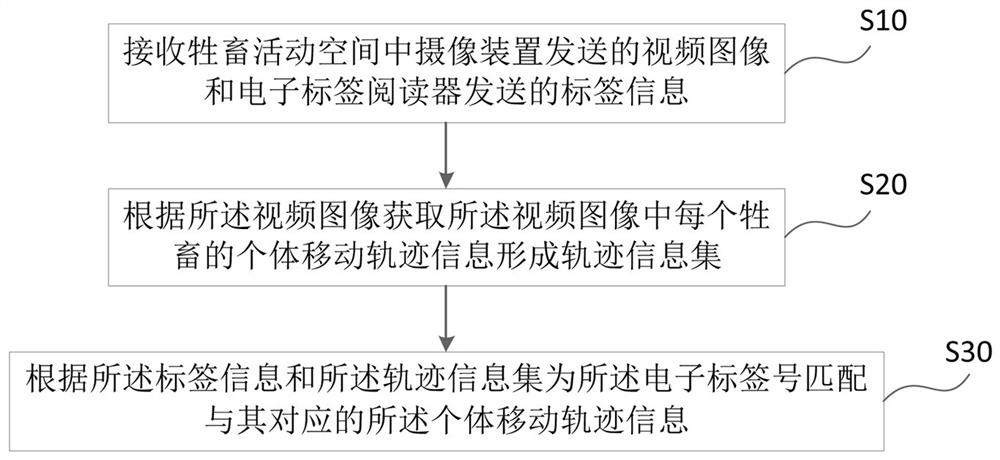

[0058] Such as figure 1 As shown, the present embodiment provides a method for monitoring the moving trajectory based on the identity of livestock, and the method is applied to a comprehensive processing device, and the method includes the following steps:

[0059] S10: Receive the video image sent by the camera device in the livestock activity space and the tag information sent by the electronic tag reader; the tag information includes the electronic tag number, the time when the electronic tag number is recognized, and the information of the electronic tag reader in one-to-one correspondence Information collection; the electronic tag reader information includes the identity information or geographic location information of the electronic tag reader;

[0060] In this step, the camera device and the electronic tag reader are related equipment pre-set in the livestock activity space; both play the role of collecting data.

[0061] Specifically, the camera device is set to be a...

Embodiment 2

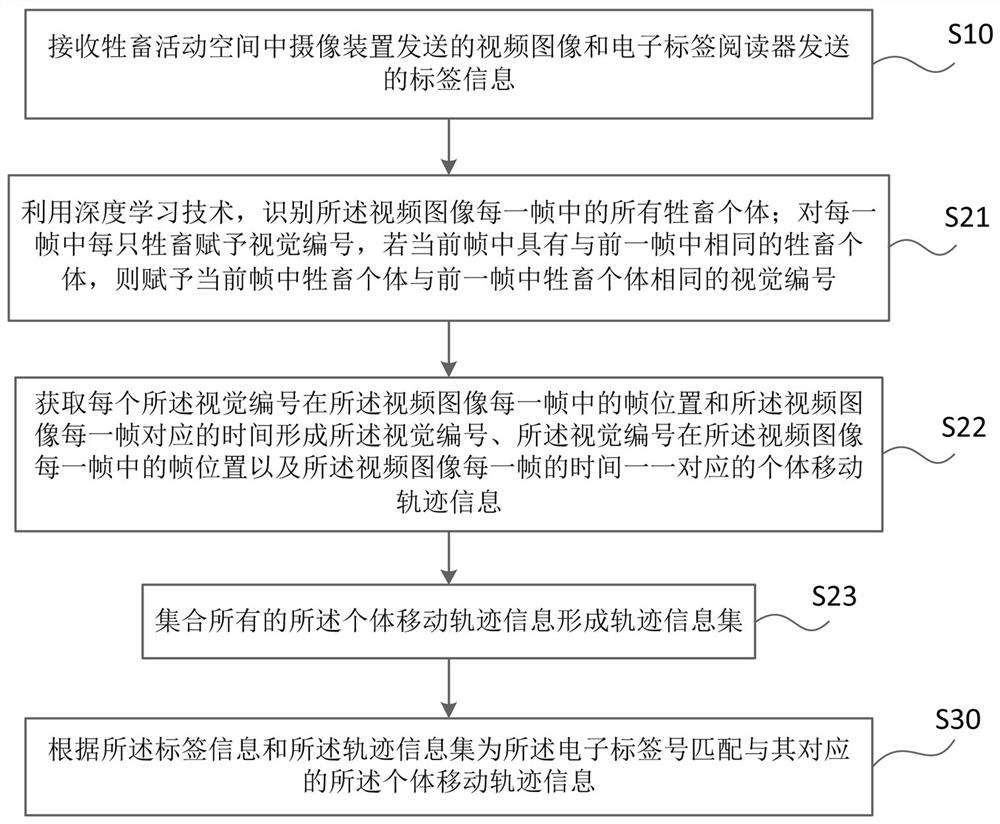

[0076] Such as figure 2 As shown, the difference between this embodiment and Embodiment 1 is that the step S20 of this embodiment includes the following steps:

[0077] S21: Use deep learning technology to identify all individual livestock in each frame of the video image; assign a visual number to each individual livestock in each frame, if the current frame has the same individual livestock as in the previous frame, assign The same visual number of the livestock individual in the current frame as in the previous frame;

[0078] In this step, use deep learning technology to obtain image features, such as using convolutional neural network (CNN) to obtain high-level features of images, available deep learning models such as Fast R-CNN, YOLO, SSD, etc., perform detailed image segmentation , target key point detection and other image recognition processing to identify all individual livestock in each frame of the video image one by one; Identify the livestock targets in 2500 ...

Embodiment 3

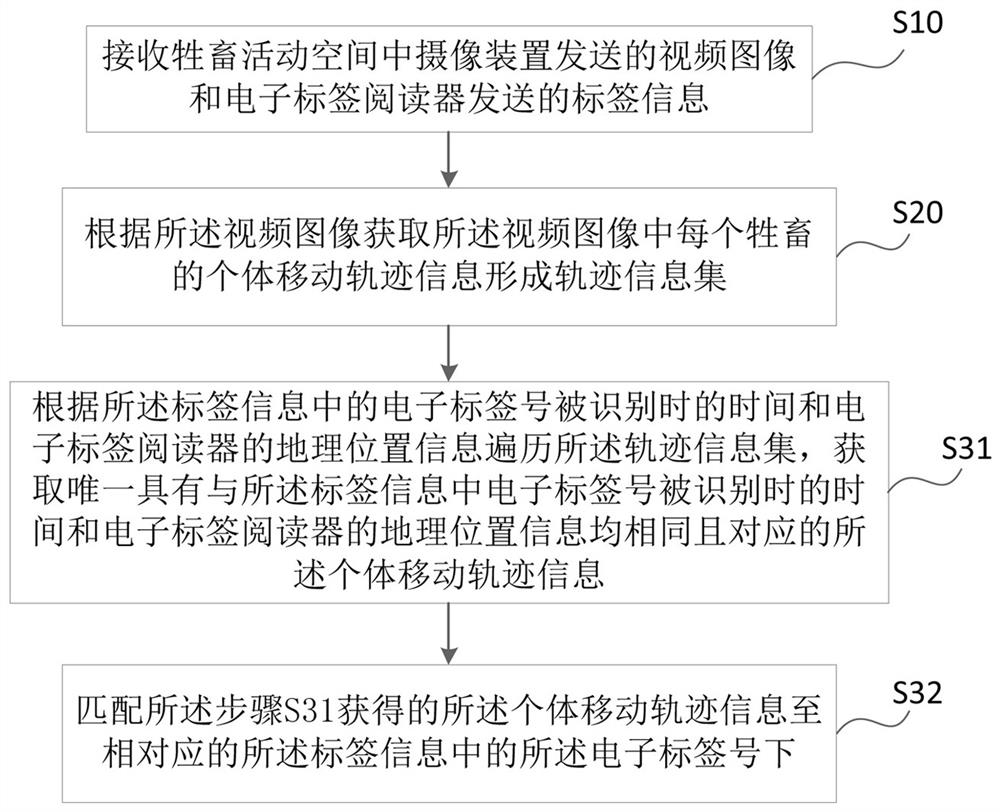

[0084] Such as image 3 As shown, the difference between this embodiment and Embodiment 1 is that the step S30 of this embodiment includes the following steps:

[0085] S31: Traverse the track information set according to the time when the electronic tag number in the tag information is recognized and the geographic location information of the electronic tag reader, and obtain the time uniquely related to the time when the electronic tag number in the tag information is recognized The same as the geographic location information of the electronic tag reader and the corresponding individual movement track information; specifically, the time when the electronic tag number is recognized and the geographic location of the electronic tag reader that recognizes the electronic tag number are used as the search basis , searching for the movement track information of individuals with the same geographic location information at the same time in the track information set;

[0086] S32: M...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com