Deep learning hyper-parameter tuning improvement method based on Bayesian optimization

A technology of deep learning and hyperparameters, applied to instruments, character and pattern recognition, computer components, etc., can solve problems such as unfavorable engineering applications, increased computing costs, and large fluctuations in optimization results, so as to improve optimization efficiency and take a long time to solve , the effect of speeding up the optimization speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0044] Figure 4 A Bayesian optimization-based deep learning hyperparameter tuning improvement method is shown, and its overall steps are: The overall steps are:

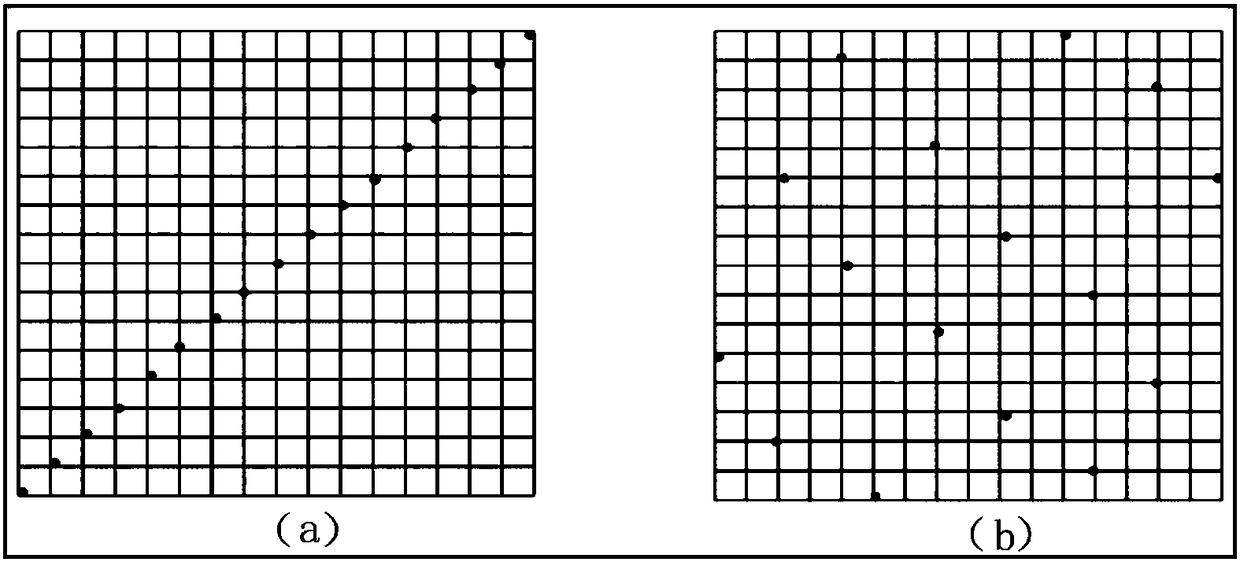

[0045]Step 1. Use the fast optimal Latin square experimental design algorithm to generate the initial point set X=x 1 ...x t .

[0046] The specific steps of the rapid optimal Latin square experimental design algorithm:

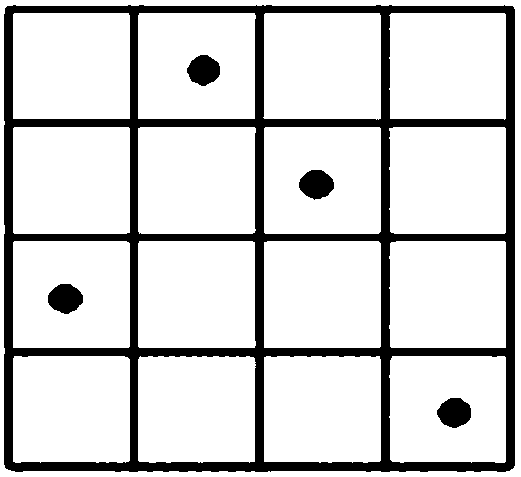

[0047] a. given n p , n v , n s , and each variable optimization interval; in this embodiment, the number of sampling points n p =16, variable dimension n v = 2, n s =1, the optimization interval is taken as [0,1];

[0048] b. Calculate the theoretical interval division number n d ,

[0049] c. Calculate the intermediate quantity n b ,

[0050] d. Calculate the actual number of initial points generated

[0051] e. Filling the Latin hypercube experimental design space using the translational propagation algorithm.

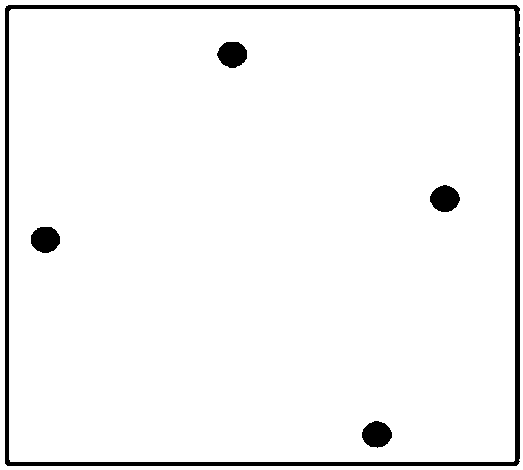

[0052] Further, the specific method of using the translational ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com