Virtual slice method for teaching video

A teaching video and virtual slicing technology, which is applied in the field of virtual slicing of teaching videos, can solve problems that affect user experience and cannot provide users with slice positioning information, and achieve the effect of improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

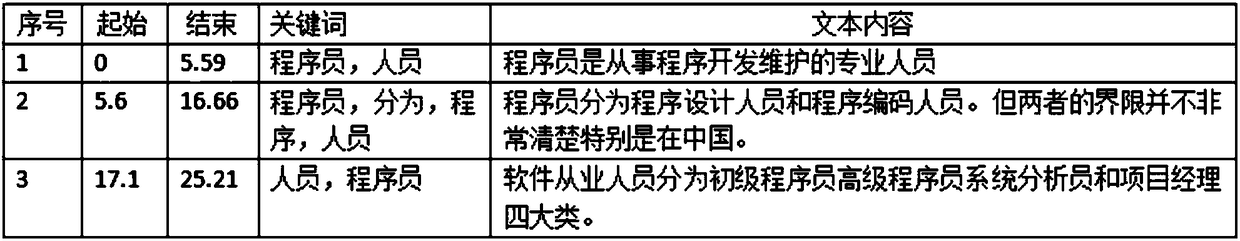

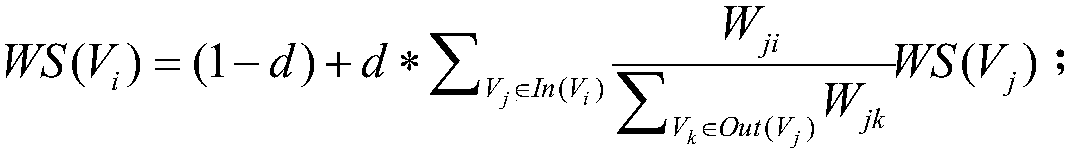

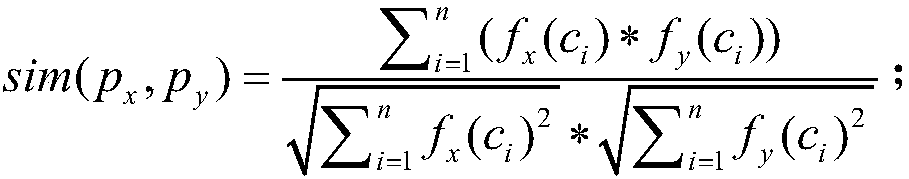

[0042] This embodiment discloses a method for virtual slicing of teaching videos, the steps are as follows:

[0043] Step S1, first extract the audio data from the teaching video, then convert the audio data to obtain each sentence text, and combine each sentence text to obtain the first text set, for example, the first text set ST={st 1 ,st 2 ,st 3 ,...,st m}, each element st in ST 1 to st m The 1st to m sentence texts in the first text collection respectively.

[0044] In this embodiment, the FFMPEG open source framework is used to extract the audio from the teaching video in MP4 format. When the teaching video is obtained, it is first judged whether the teaching video is a video format supported by FFMPEG. FFMPEG supports mainstream video formats on the market, but it is still possible It is an unsupported format. If this is the case, you need to convert the teaching video format first. In this embodiment, if there are multiple audio tracks in the teaching video extra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com