RGBD depth sensor-based real-time gesture analysis and evaluation method and system

A technology of depth sensor, evaluation method, applied in the field of image processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0077] A real-time palm gesture analysis and evaluation method based on RGBD depth sensor, is characterized in that, comprises the following steps:

[0078] Step 1, use the RGBD sensor to acquire T frames of initial images within a period of time, each frame of the initial images in the T frames of initial images includes palm nodes, wrist nodes and elbow nodes, and determine the coordinates of the palm nodes of the T frame initial images ;

[0079] include:

[0080] Step 11, choose an initial image from the T frame initial image as the initial image of the current frame, and obtain the coordinates of the palm node P in the initial image of the current frame through the initial palm node

[0081] Among them, M represents the number of white pixels in the area circle, M is a natural number greater than or equal to 1, x i Represents the abscissa of the i-th pixel, y i Represents the ordinate of the i-th pixel, z i Indicates the distance between the i-th pixel point and the R...

Embodiment 2

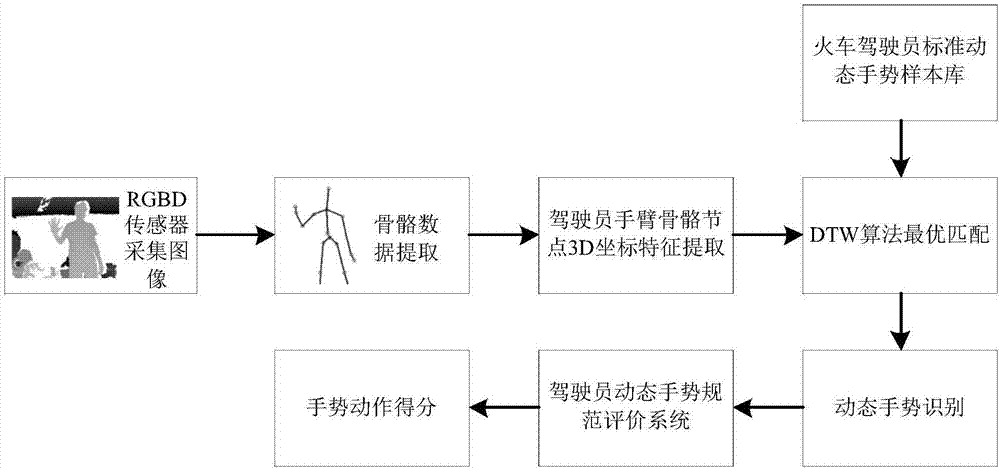

[0099] A real-time arm gesture analysis and evaluation method based on RGBD depth sensor, such as image 3 , including the following steps:

[0100] Step 1, use the RGBD sensor to acquire T frame initial images within a period of time, select an initial image from the T frame initial images as the t-th frame initial image, and extract the arm bone node movement sequence of the t-th frame initial image;

[0101] include:

[0102] The initial image of the tth frame includes the initial palm node P 1 t , wrist node P 2 t , Elbow node P 3 t , shoulder point P 4 t and shoulder center node P s t , get the node P through formula (3) n t To shoulder center node P s t distance D sn t :

[0103]

[0104] In formula (3), n=1,2,3,4, t=1,2,..., T, D sn t Indicates the node P in the initial image of frame t n t To shoulder center node P s t distance, T is the total number of frames of the initial image, x n t ,y n t ,z n t respectively represent the coordinat...

Embodiment 3

[0113] On the basis of Embodiments 1 and 2, this embodiment provides a real-time gesture analysis and evaluation method based on an RGBD depth sensor, including the real-time palm gesture analysis and evaluation method provided in Embodiment 1 and the method provided in Embodiment 2. Real-time arm gesture analysis and evaluation method. This embodiment can recognize the driver's palm gesture and arm dynamic gesture at the same time, and can perform normative evaluation on the driver's gesture according to the output result of the recognition algorithm and give the score of the palm gesture and arm dynamic gesture, not only can monitor the driver's gesture in real time In order to ensure the safety of the train, it can also avoid artificial monitoring of the train driver's gestures and reduce the consumption of human resources.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com