JND (Just-noticeable difference) based video encoding method and device

A video coding and JND technology, applied in the field of video coding, can solve the problems of inaccurate JND model and reduce the quality of compressed video, and achieve the effect of good quality, accurate effect and improved compression efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

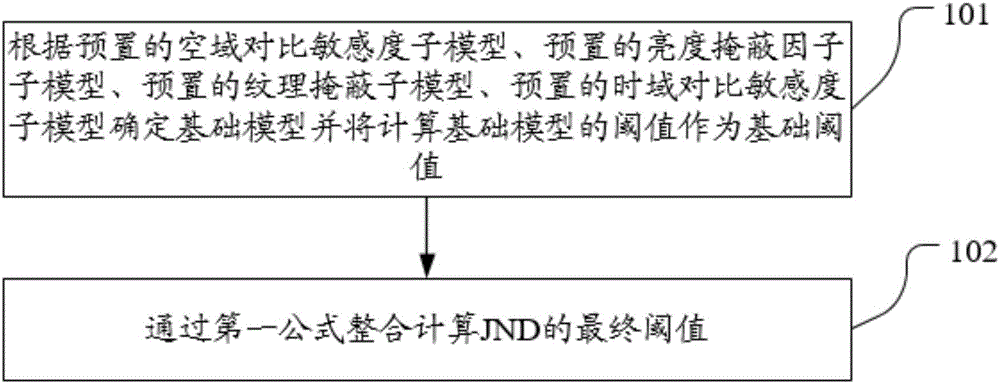

[0045] see figure 1 A first embodiment of a JND-based video coding method provided by an embodiment of the present invention includes:

[0046] 101. Determine the base model according to the preset spatial contrast sensitivity submodel, the preset brightness masking factor submodel, the preset texture masking submodel, and the preset temporal contrast sensitivity submodel, and calculate the threshold of the base model as the base threshold T basic ;

[0047] In this embodiment, firstly, it is necessary to determine the basic model according to the preset sub-model of spatial contrast sensitivity, the preset sub-model of luminance masking factor, the preset sub-model of texture masking, and the preset sub-model of temporal contrast sensitivity and Calculate the threshold of the base model as the base threshold T basic .

[0048] 102, through the first formula JND=T basic ×(F 1 ×F 2 ×F 3 -α×F 1 ×F 2 -β×F 2 ×F 3 -γ×F 1 ×F 3 ) to integrate and calculate the final thr...

no. 2 example

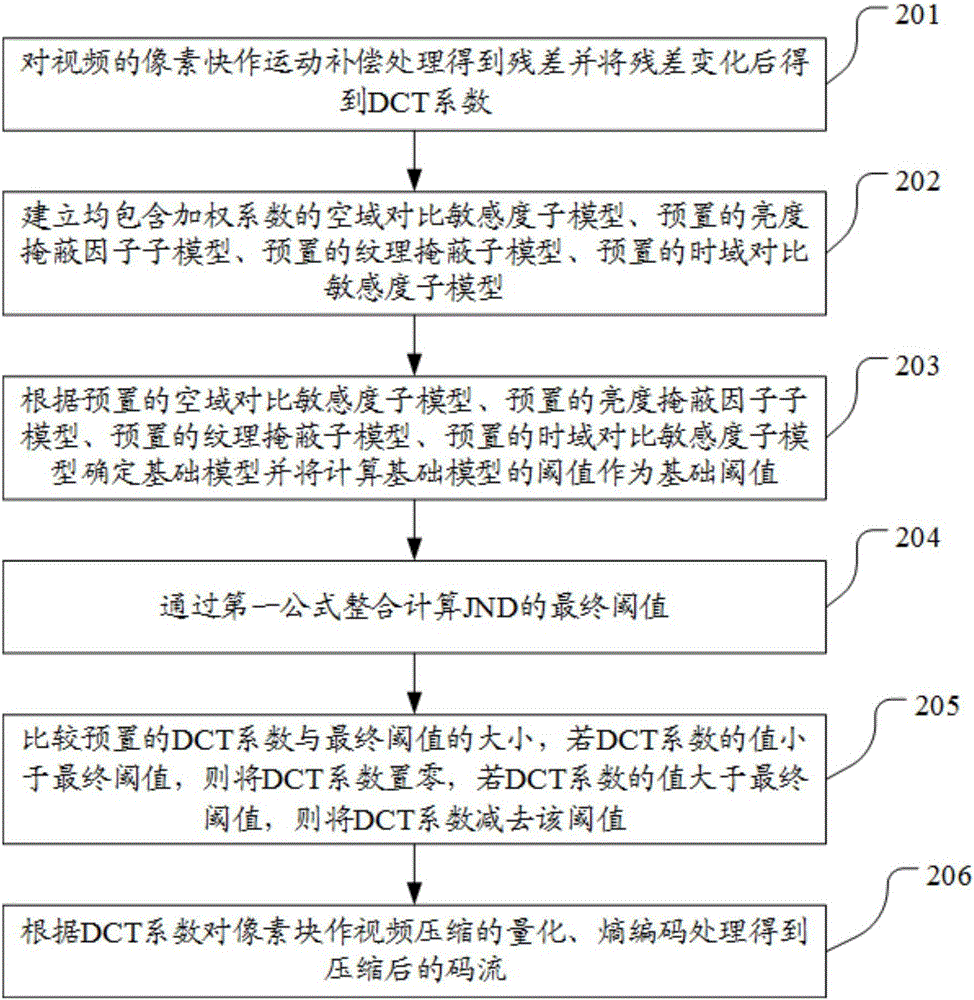

[0050] see figure 2 A second embodiment of a JND-based video coding method provided by an embodiment of the present invention includes:

[0051] 201. Perform motion compensation processing on the pixel blocks of the video to obtain residuals and change the residuals to obtain DCT coefficients;

[0052] In this embodiment, it is first necessary to perform motion compensation processing on the pixel blocks of the video to obtain residuals, and then change the residuals to obtain DCT coefficients.

[0053] 202. Establish a spatial contrast sensitivity submodel, a preset brightness masking factor submodel, a preset texture masking submodel, and a preset temporal contrast sensitivity submodel, all of which include weighting coefficients;

[0054] In this embodiment, after performing motion compensation processing on video pixel blocks to obtain residuals and changing the residuals to obtain DCT coefficients, it is also necessary to establish a spatial contrast sensitivity sub-mod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com