Intelligent extraction method of build-up area on the basis of nighttime light data

A technology for nighttime lighting and extraction methods, which is applied to instruments, character and pattern recognition, computer parts and other directions, and can solve the problems of threshold time and regional differences that have great influence, cannot adapt to built-up area extraction, and limited accuracy.

Inactive Publication Date: 2016-11-16

四川省遥感信息测绘院

View PDF5 Cites 22 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

At present, the extraction of urban built-up areas mainly uses land use survey data, TM / ETM or MODIS image interpretation data, but the processing efficiency is slow, and there is a certain difference between the extracted built-up area results and the real development level of the city. The extraction of city limits from remote sensing data has attracted more and more attention. Currently, the commonly used methods focus on the threshold method. However, the accuracy of using the threshold method to extract the range of built-up areas from nighttime light remote sensing data is limited, and the selection of the threshold is greatly affected by time and regional differences. , the same threshold cannot adapt to the extraction of built-up areas in different cities at different times

[0003] In recent years, some researchers have used automatic classification algorithms to extract the range of urban built-up areas by combining nighttime light data and vegetation index data. However, the introduction of multi-source data also leads to the complexity of classification algorithms. Information, constructing an intelligent optimization algorithm that can adapt to the city's own characteristics and time, and realize the intelligent extraction of urban built-up areas is a problem to be solved by existing technologies

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment

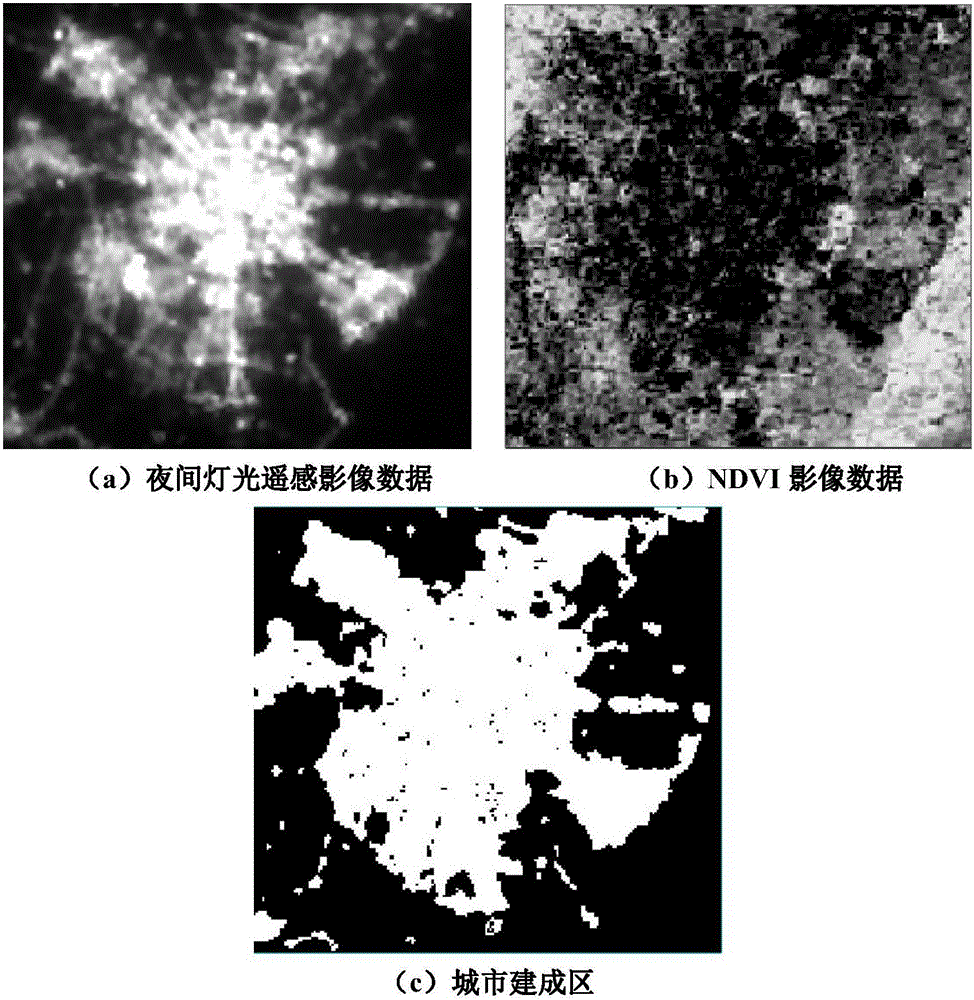

[0130] Taking the NPP / VIIRS night light remote sensing data and MODIS NDVI data of a certain city as an example, the algorithm in the present invention is used to automatically extract the urban space, and the comparison effect between the original remote sensing data and the processing results is as follows: image 3 shown.

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

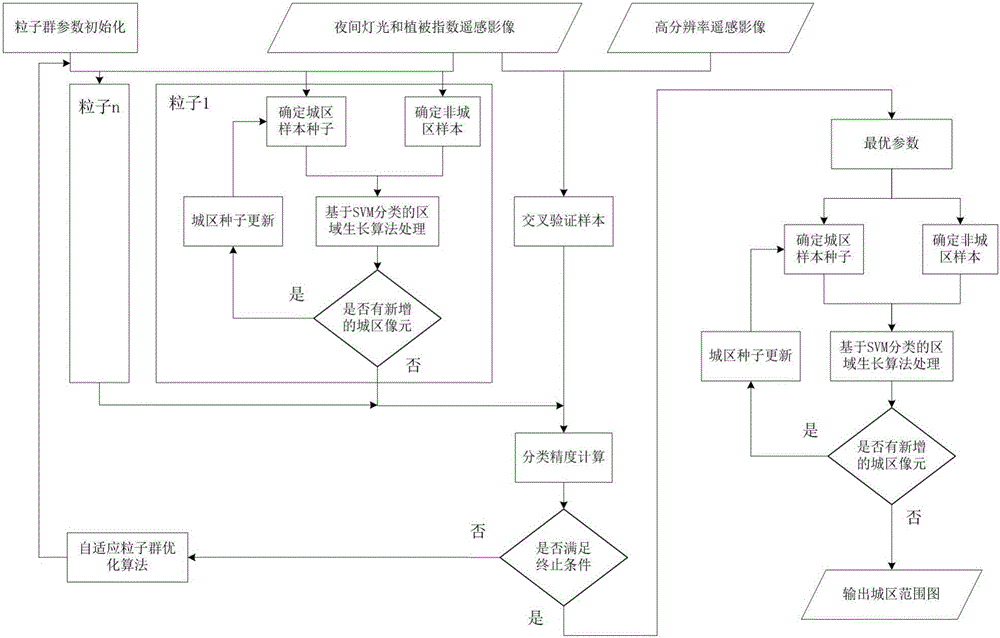

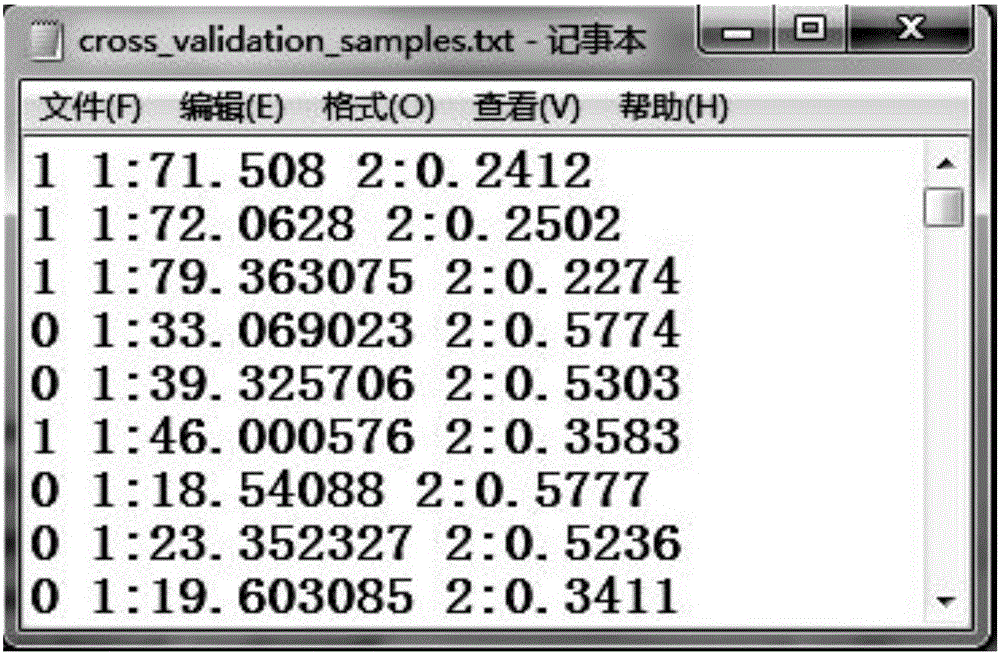

The invention relates to an intelligent extraction method of a build-up area on the basis of nighttime light data. The intelligent extraction method comprises the following steps: adopting an adaptive particle swarm optimization algorithm to realize the optimal selection of the selection parameters of a VIIRS (Visible Infrared Imaging Radiometer Suite) nighttime light image sample and a MODIS (Moderate Resolution Imaging Spectroradiometer) normalized difference vegetation index image sample; adopting a region growing algorithm based on SVM (Support Vector Machine) classification to finish SVM model training, and adopting a cross validation method to carry out precision validation on the model; and according to an optimized parameter, determining a city sample and a non-city sample, and adopting the region growing algorithm based on the SVM to extract a city built-area range. By use of the intelligent extraction method, from a sample selection source, the adaptive optimization of a sample selection parameter is carried out, and the SVM and the region growing algorithm are adopted to improve processing efficiency and precision for the nighttime light data to extract the nighttime light data.

Description

technical field [0001] The invention relates to an extraction method, in particular to an intelligent extraction method for built-up areas based on night light data. Background technique [0002] With the acceleration of urbanization, research on the spatial layout and spatial expansion of urbanized regions is becoming more and more important at the regional and even national scales. At present, the extraction of urban built-up areas mainly uses land use survey data, TM / ETM or MODIS image interpretation data, but the processing efficiency is slow, and there is a certain difference between the extracted built-up area results and the real development level of the city. The extraction of city limits from remote sensing data has attracted more and more attention. Currently, the commonly used methods focus on the threshold method. However, the accuracy of using the threshold method to extract the range of built-up areas from nighttime light remote sensing data is limited, and the...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More IPC IPC(8): G06K9/00G06K9/62

CPCG06V20/176G06F18/2411

Inventor 张荞黄青伦陈慧罗想王萍张艳梅

Owner 四川省遥感信息测绘院

Features

- Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com