Indoor three-dimensional scene reconstruction method employing plane characteristics

A technology for three-dimensional scenes and indoor scenes, applied in the field of three-dimensional scene reconstruction, can solve the problems of inaccurate matching point sets, reduced accuracy, and deviation of transformation matrices, and achieves high robustness, good speed, and good accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The specific implementation manners of the present invention will be described in detail below in conjunction with the accompanying drawings.

[0050]In this embodiment, a laboratory with a relatively complex environment is selected as the reconstructed indoor scene, and a Kinect camera with an image resolution of 640×480 is used. The experimental program combined with the PCL point cloud library uses C++ to realize this method under the Ubuntu system. This implementation mode is run on a computer with Intel dual-core 2.93GHz CPU. In order to verify the real-time and stability of this method, the hand-held Kinect camera is used to collect data freely in the scene.

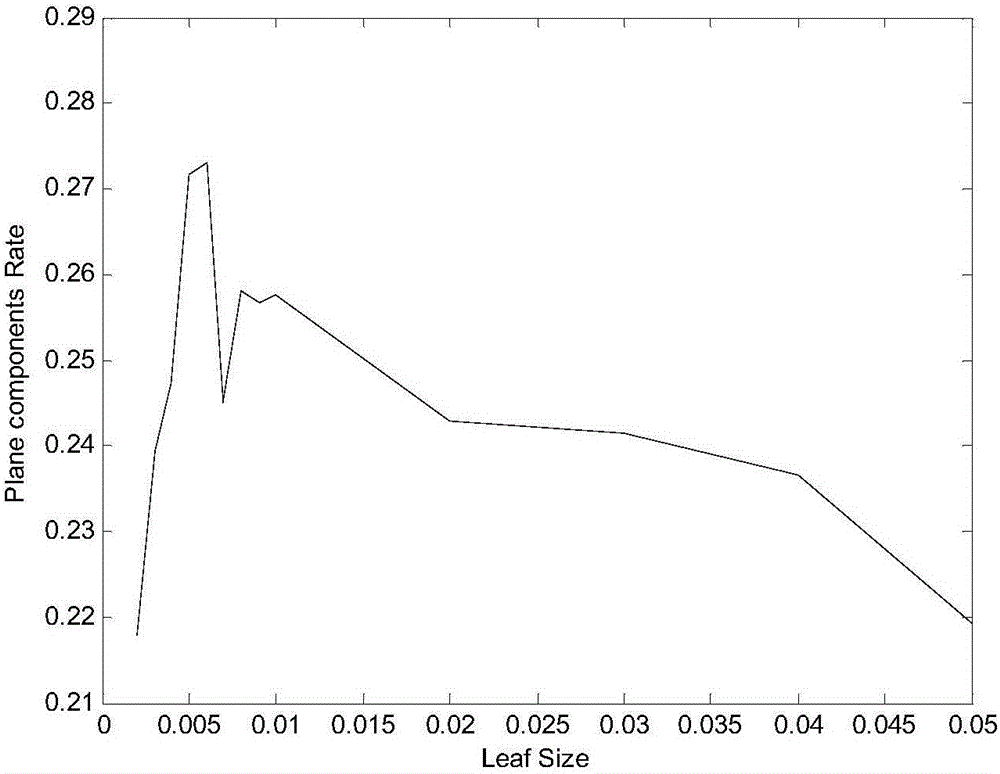

[0051] Using the Kinect camera as a collection tool for color images and depth images, RGB images and depth images are first obtained through the Kinect camera. Then, feature extraction is performed on the color image, and a preliminary rotation matrix is established. At the same time, the single-frame r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com