Method and system for data processing for robot action expression learning

A technology of data processing and data processing devices, which is applied in the field of intelligent robots, can solve problems such as the failure to develop robots, and achieve the effect of enriching communication forms and improving intelligence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

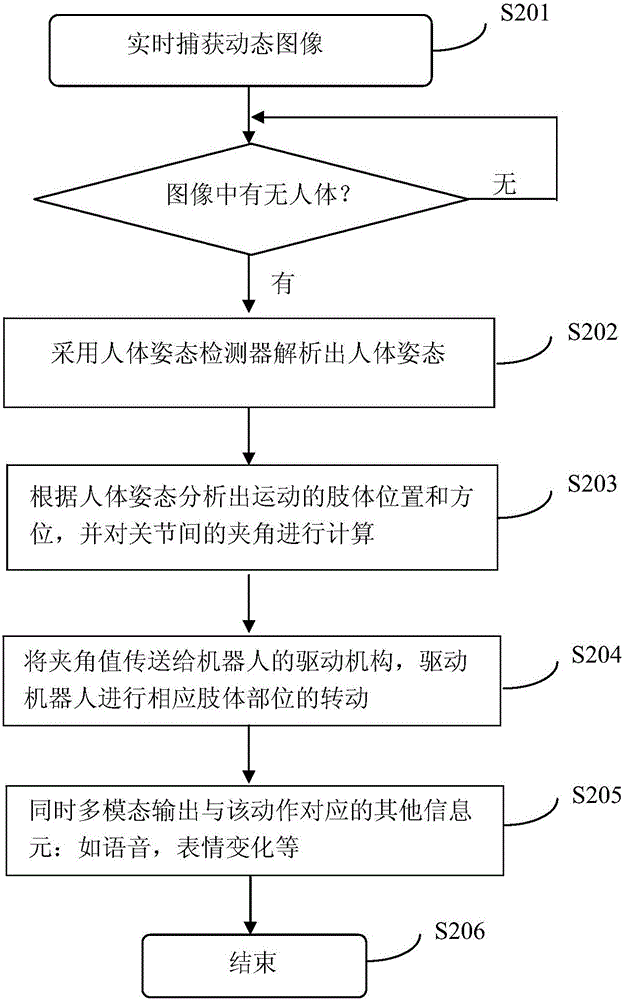

[0063] figure 2 This is an overall flow chart of motion imitation according to an embodiment of the present invention. The method begins with step S201, where the robot captures dynamic images in real time. For example, the target motion can be captured and recorded by adopting mechanical, acoustic, electromagnetic, optical, inertial navigation and other motion capture technologies.

[0064] Combine image processing, pattern recognition and other technologies to judge whether there is a human body in the captured image. In one embodiment, a human body detection algorithm based on HOG features may be used to capture a human body image, and then the image is normalized so that the person is basically located in the center of the entire image.

[0065] If there is no human body, the system continues to capture images. If there is a human body, in step S202, analyze the human body posture, for example, analyze the human body posture by using a human body posture detector.

[...

Embodiment 2

[0079] Such as Figure 5 As shown, therein is shown yet another specific embodiment of the idea according to the invention. In this figure, the method starts at step S101. In this step, a series of actions issued by the target within a period of time are captured and recorded. This step is still performed, for example, by optical sensing components such as a camera of the robot. Then, there is a lot of work in preprocessing the image as needed. For example, the human body can be accurately extracted in a complex background, and the foreground image of the human body can be obtained. In the present invention, the depth information is obtained based on stereo vision instead of monocular vision, and the three-dimensional human body posture is recovered from the image. This ensures the accuracy of the captured motion.

[0080] In addition, accurate extraction of human motion key frames is also required. When the robot captures the frame of the human action sequence through i...

Embodiment 3

[0108] The present invention also provides a data processing device for robot learning action expression, which includes:

[0109] Motion capture module, which is used to capture and record a series of actions issued by the target within a period of time;

[0110] An associated information identification and recording module, which is used to identify and record synchronously the information sets associated with the captured series of actions, the information sets are composed of information elements;

[0111] A sorting module, which is used to sort out the recorded actions and the information sets associated with them and store them in the memory bank of the robot according to the corresponding relationship;

[0112] Action imitation module, which is used for when the robot receives the action output instruction, calls the information set that matches the content to be expressed in the information set stored in the memory bank and makes an action corresponding to the informat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com