Camera tracking method and device

A camera and image technology, applied in the field of computer vision, can solve problems such as difficult to eliminate and estimation errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

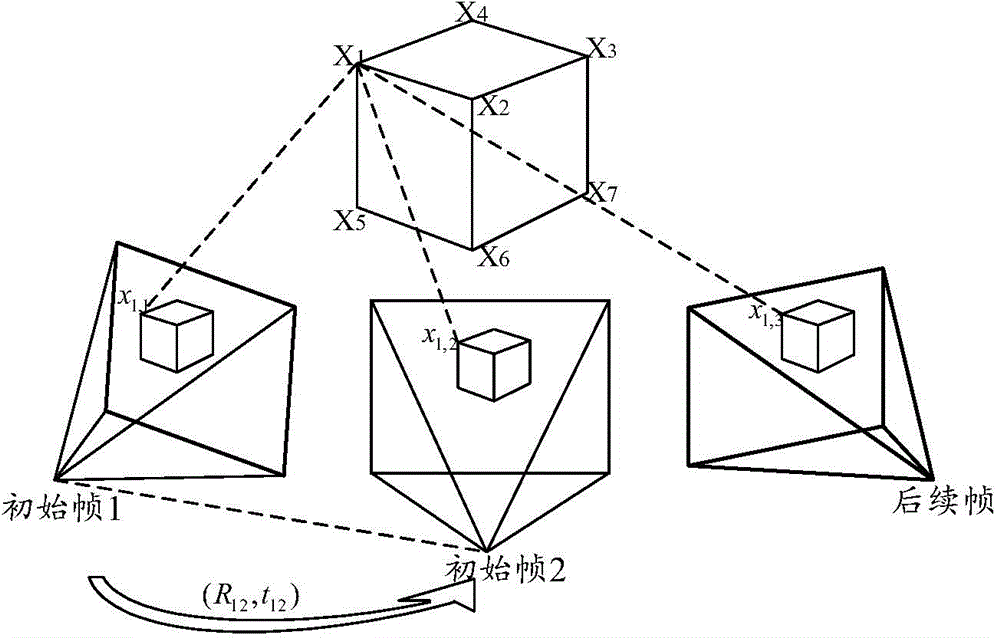

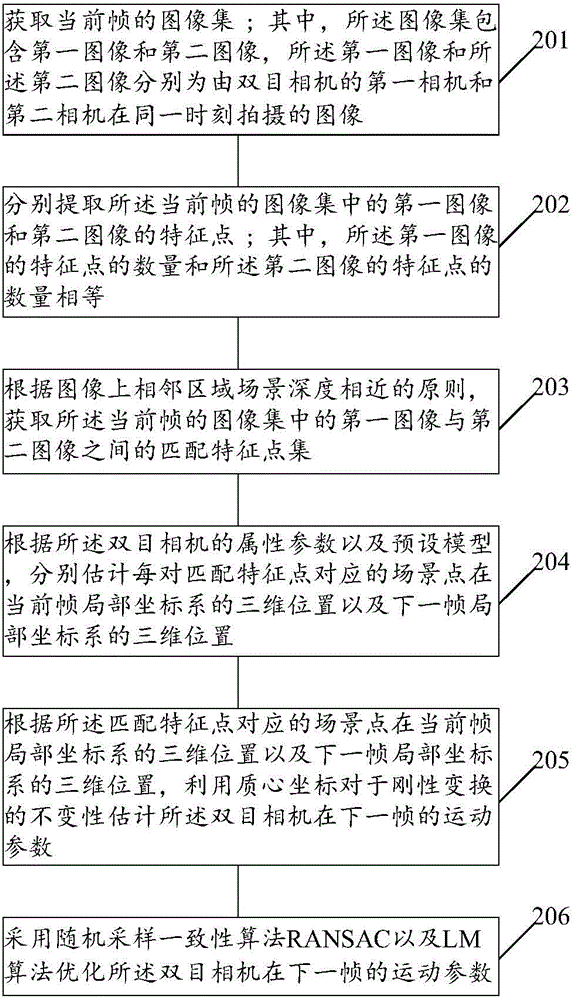

[0149] figure 1 A flow chart of a camera tracking method provided by an embodiment of the present invention, such as figure 2 As shown, the following steps may be included:

[0150]201: Obtain the image set of the current frame; wherein, the image set includes a first image and a second image, and the first image and the second image are respectively obtained by the first camera and the second camera of the binocular camera Images taken at the same time.

[0151] Wherein, the image set of the current frame belongs to the video sequence taken by the binocular camera; the video sequence is a collection of image sets taken by the binocular camera within a period of time.

[0152] 202: Extract respectively feature points of the first image and the second image in the image set of the current frame; wherein, the number of feature points of the first image is equal to the number of feature points of the second image.

[0153] Wherein, the feature points usually refer to the poin...

Embodiment 2

[0221] image 3 A flowchart of a camera tracking method provided by an embodiment of the present invention, such as image 3 As shown, the following steps may be included:

[0222] 301: Acquire a video sequence; wherein, the video sequence includes at least two frame image sets, the image set includes a first image and a second image, and the first image and the second image are respectively captured by a binocular camera Images captured by the first camera and the second camera at the same moment.

[0223] 302: Acquire matching feature point sets between the first image and the second image in each frame image set.

[0224] It should be noted that the method of obtaining the matching feature point set between the first image and the second image in each frame image set is the same as the method of obtaining the matching feature point set between the first image and the second image in the current frame image set in Embodiment 1. The method of point set is the same and will...

Embodiment 3

[0233] Figure 4 A structural diagram of a camera tracking device 40 provided for an embodiment of the present invention, as shown in Figure 4 shown, including:

[0234] The first acquisition module 401: used to acquire the image set of the current frame; wherein, the image set includes a first image and a second image, and the first image and the second image are the first images of the binocular camera respectively. The image captured by the camera and the second camera at the same moment.

[0235] Wherein, the image set of the current frame belongs to the video sequence shot by the binocular camera; the video sequence is a collection of image sets shot by the binocular camera within a period of time.

[0236] Extraction module 402: for extracting feature points of the first image and the second image in the image set of the current frame acquired by the first acquisition module 401 respectively; wherein, the number of feature points of the first image and the number of f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com