Human face feature extraction and classification method

A classification method and face feature technology, which is applied in the field of image processing, can solve the problems of face recognition not reaching the expected effect, and achieve the effect of increasing speed, improving classification accuracy, and strong feature discrimination

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The implementation process of the present invention will be described in detail below.

[0022] The present invention provides a kind of human face feature extraction and classification method, comprises the following steps:

[0023] (1) Read in face images: read in standard face images from the face training database;

[0024] (2) The 2D-PCA method will be used to reduce the feature dimension of the read face image, that is, the high-dimensional image matrix is mapped to the projection subspace of 2D-PCA, and converted into a low-dimensional image matrix;

[0025] (3) Convert the low-dimensional image matrix obtained by dimensionality reduction in step (2) into a one-dimensional column vector;

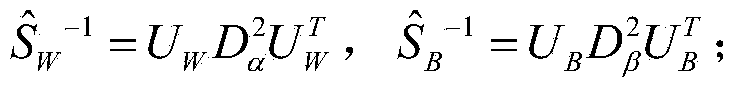

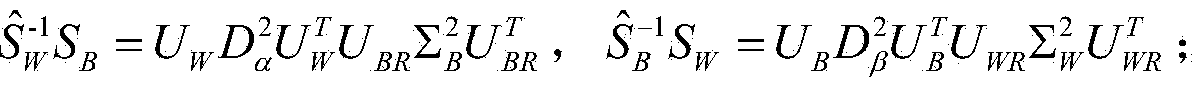

[0026] (4) According to the one-dimensional column vector in step (3), obtain the intra-class scatter matrix S of the training set W and between-class scatter matrix S B , respectively for S W and S B Do eigenvalue decomposition, even if S W and S B Represented by its ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com