Image retrieval method

An image retrieval and image technology, applied in the field of extended query retrieval, can solve problems affecting retrieval efficiency and achieve the effect of improving retrieval efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The technical scheme of the present embodiment is as follows:

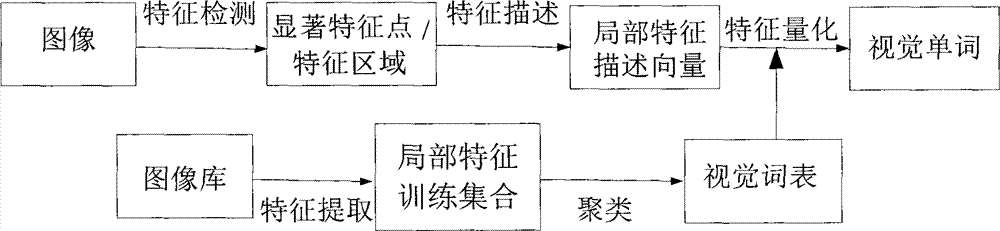

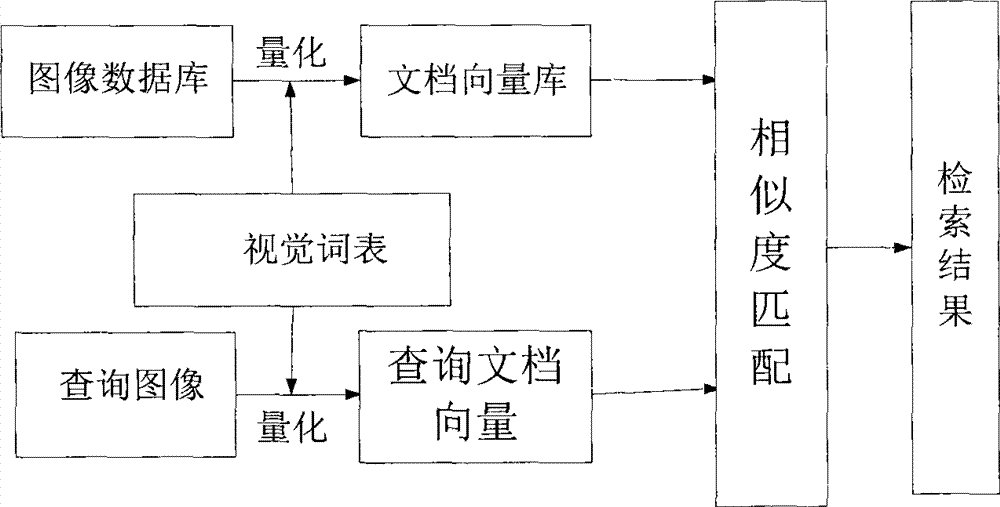

[0028] First, use the bag-of-visual-words model to transform the image into a set of visual words. The transformation process is as follows: figure 1 shown. The specific transformation process is as follows: perform feature detection on the image, obtain salient feature points or salient areas, perform feature description and then obtain local feature description vectors; perform feature extraction and sampling on images in the entire image library to obtain local feature sets as feature training gather. K-means clustering is performed on the feature training set, and each cluster center is regarded as a "visual word", and all cluster centers form a "visual vocabulary". The local feature set extracted from a single image is quantized into a set of visual words. During quantization, each local feature description vector is compared with the feature vectors represented by all visual words in the visual voc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com