Three-dimensional matching method based on union similarity measure and self-adaptive support weighting

A technique of similarity measurement and stereo matching, applied in image data processing, instrumentation, computing, etc., can solve problems such as mismatching, difficult to distinguish pixels from their neighbors, etc., to reduce the rate of mismatching, and the implementation method is simple Ease of operation and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

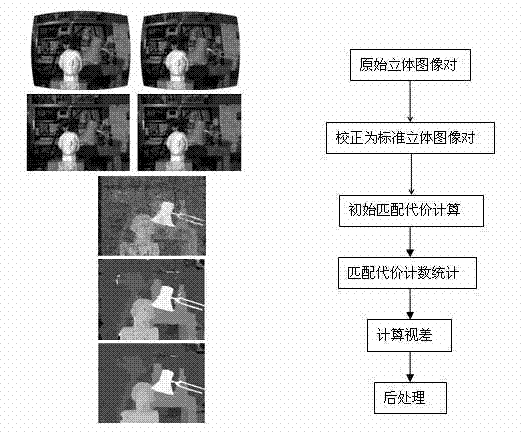

[0032] The present invention will be further described below in conjunction with the accompanying drawings.

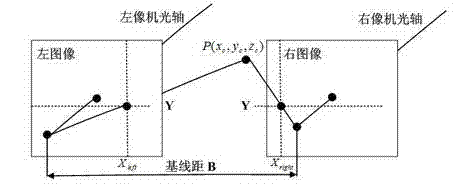

[0033] figure 1 It is a schematic diagram of binocular stereoscopic imaging with a horizontal parallel configuration, where the baseline distance B is the distance between the projection centers of the two cameras, and the focal lengths of the two cameras are both f. Suppose two cameras watch the same object point of the space object at the same time , obtained images of point P on the “left eye” and “right eye” respectively, and their image coordinates are respectively , . Now that the images of the two cameras are on the same plane, the image coordinate Y coordinates of the object point P are the same, that is .

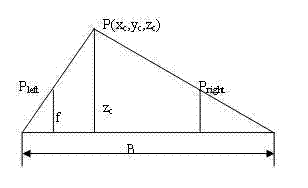

[0034] figure 1 The schematic in simplifies to figure 2 The schematic diagram in is obtained by the similar triangle geometric relation:

[0035]

[0036] Let the disparity be: , from which the three-dimensional position of the space point...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com