Blind autonomous navigation method based on stereoscopic vision and information fusion

A technology of stereo vision and autonomous navigation, applied in the field of navigation system, can solve the problems such as difficult separation of main objects, lack of autonomous navigation algorithm, increase of obstacle misjudgment rate, etc., so as to improve autonomy and independent walking ability, application prospect Visible, performance-enhancing effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The present invention will now be described in further detail in conjunction with the accompanying drawings and preferred embodiments. These drawings are all simplified schematic diagrams, which only illustrate the basic structure of the present invention in a schematic manner, so they only show the configurations related to the present invention.

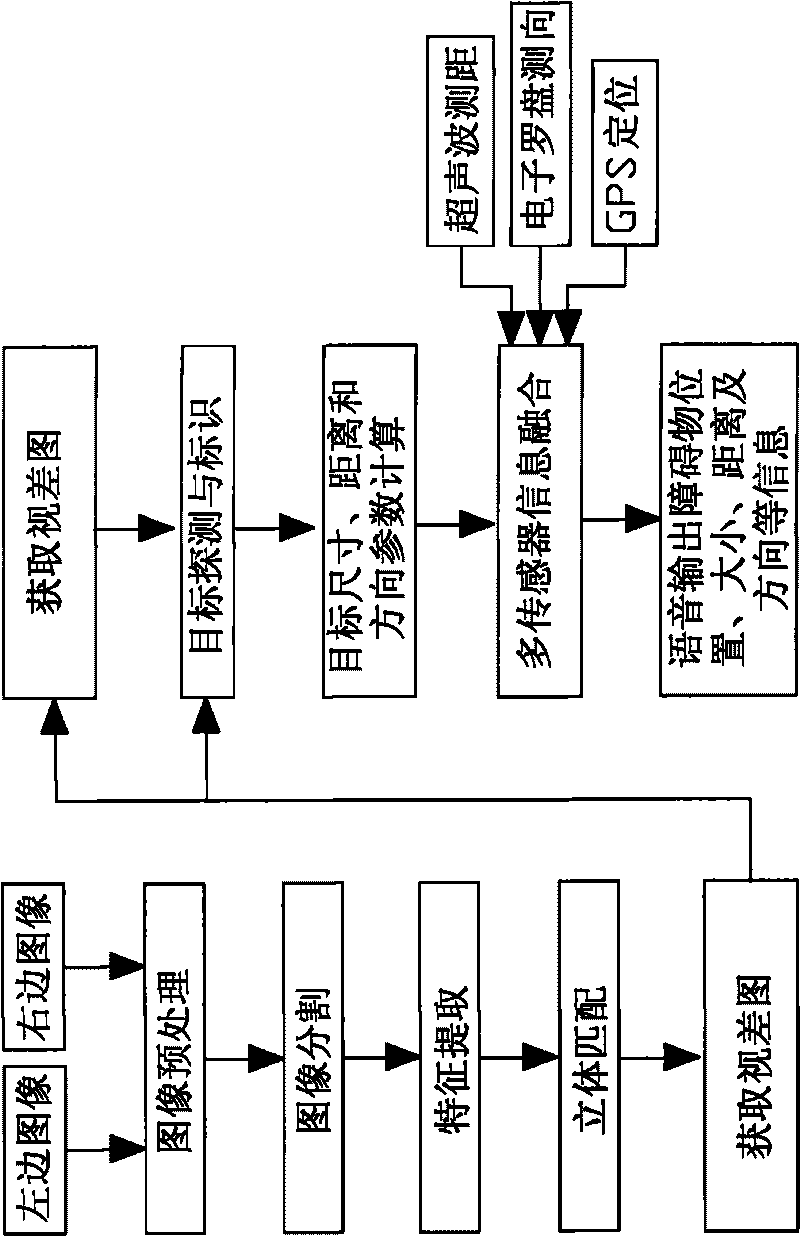

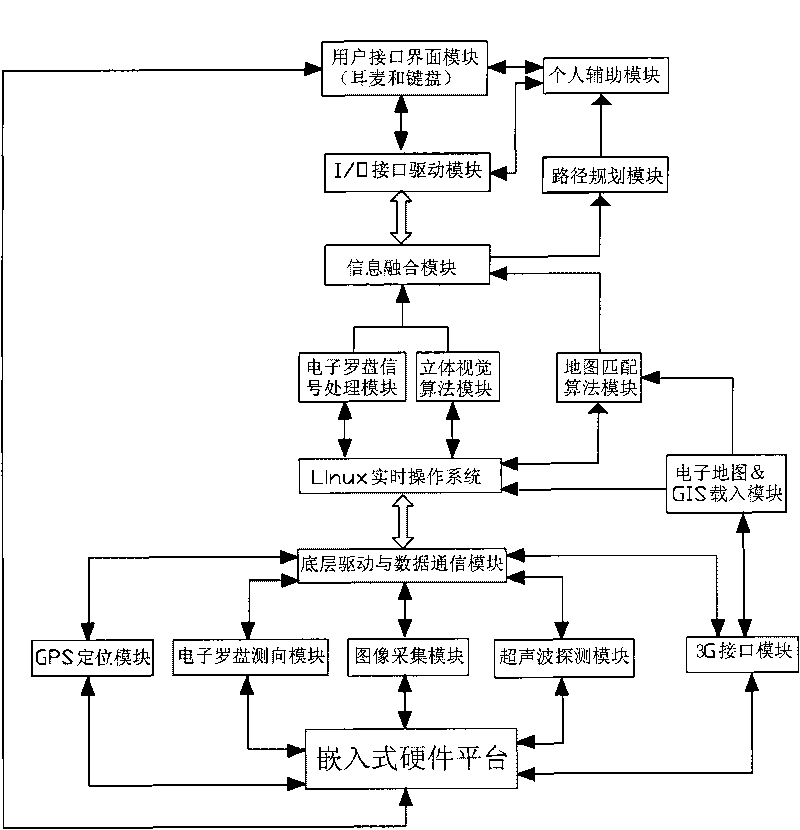

[0020] figure 2 It is a schematic diagram of the system software of the present invention. In the figure, a system software structure is built based on an embedded hardware platform and a Linux real-time operating system, mainly divided into multi-sensor data acquisition, data communication and transmission storage, information processing and fusion, information display and user interface. parts. The navigation algorithm mainly focuses on stereo vision and multi-sensor information fusion. The algorithm flow chart is as follows: figure 1 As shown in the figure, the output of the left image and the right image are mainly co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com