Method for managing embedded system memory

An embedded system and memory management technology, applied in the field of embedded system memory management, can solve the problems of memory block statistical characteristic allocation failure and other problems, and achieve the effect of improving the probability of successful allocation and improving the success rate of allocation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

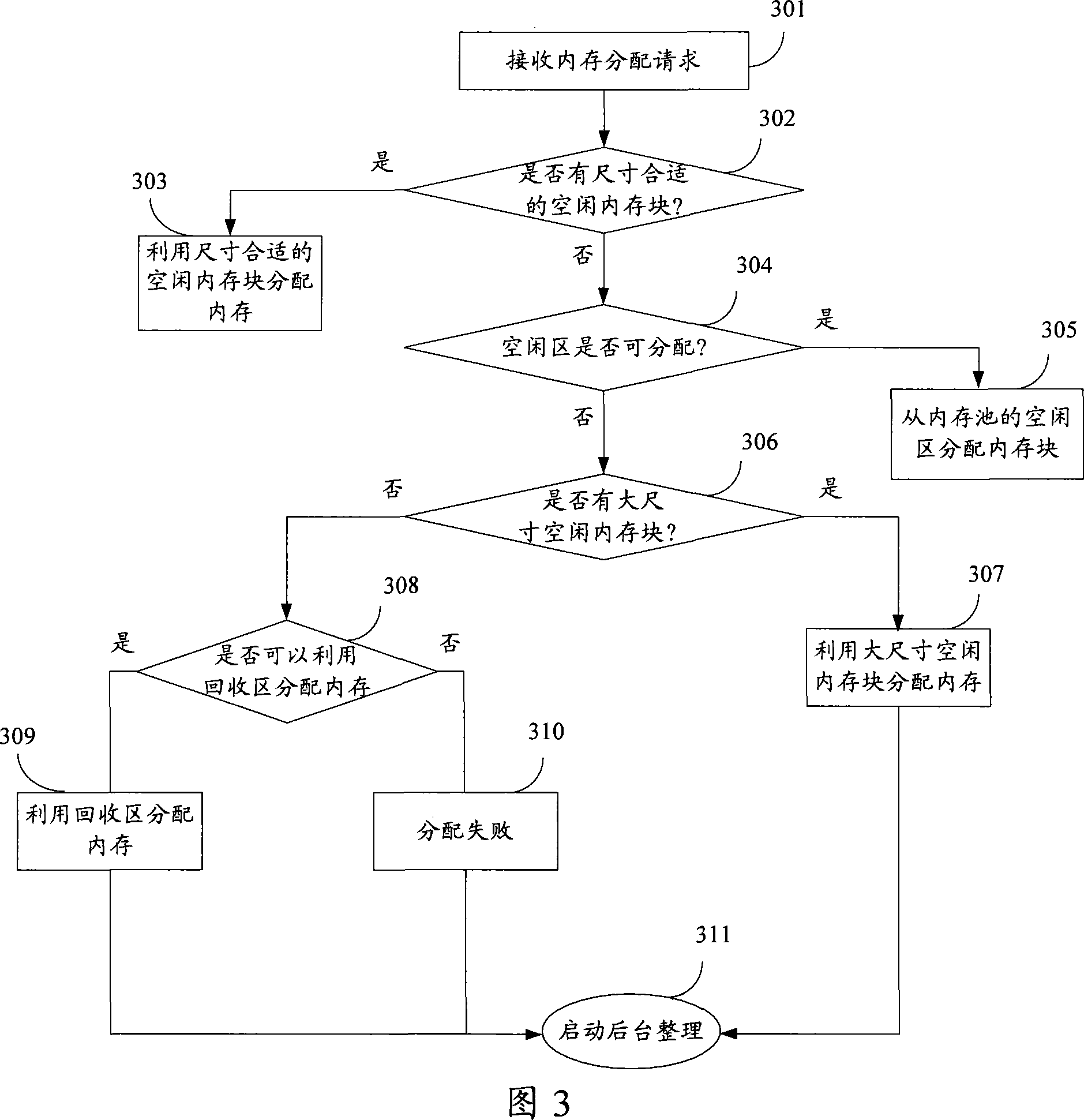

[0035] The invention fuses the memory blocks with continuous addresses, and the recovery area formed can be re-divided as the free area of the memory pool. That is to say, the recovery area can be used as an effective extension of the free area to increase the probability of successful allocation. When the statistical characteristics of memory blocks change, there are sufficient reclaimed areas to meet the new requirements of memory allocation.

[0036] Embodiment one comprises the following steps:

[0037] 1. Traversing the memory pool, merging the free memory blocks with continuous addresses into the recycling area;

[0038] 2. After receiving the memory allocation request, allocate memory from the recovery area.

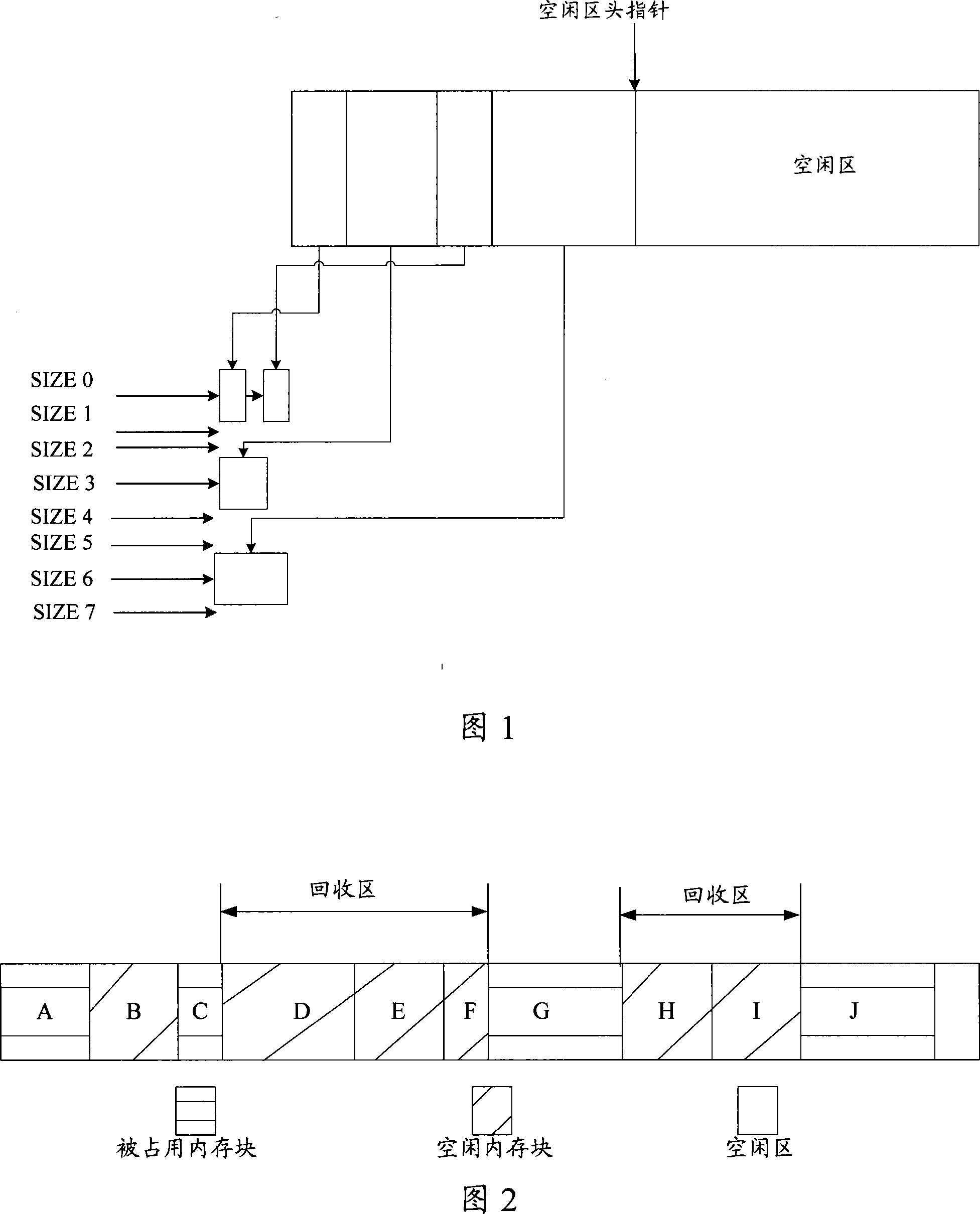

[0039] In the present invention, each divided SIZE memory block has a memory header structure indicating some status information, and the memory header structure includes a status bit for identifying whether the memory block is in use or idle, and also include...

Embodiment 2

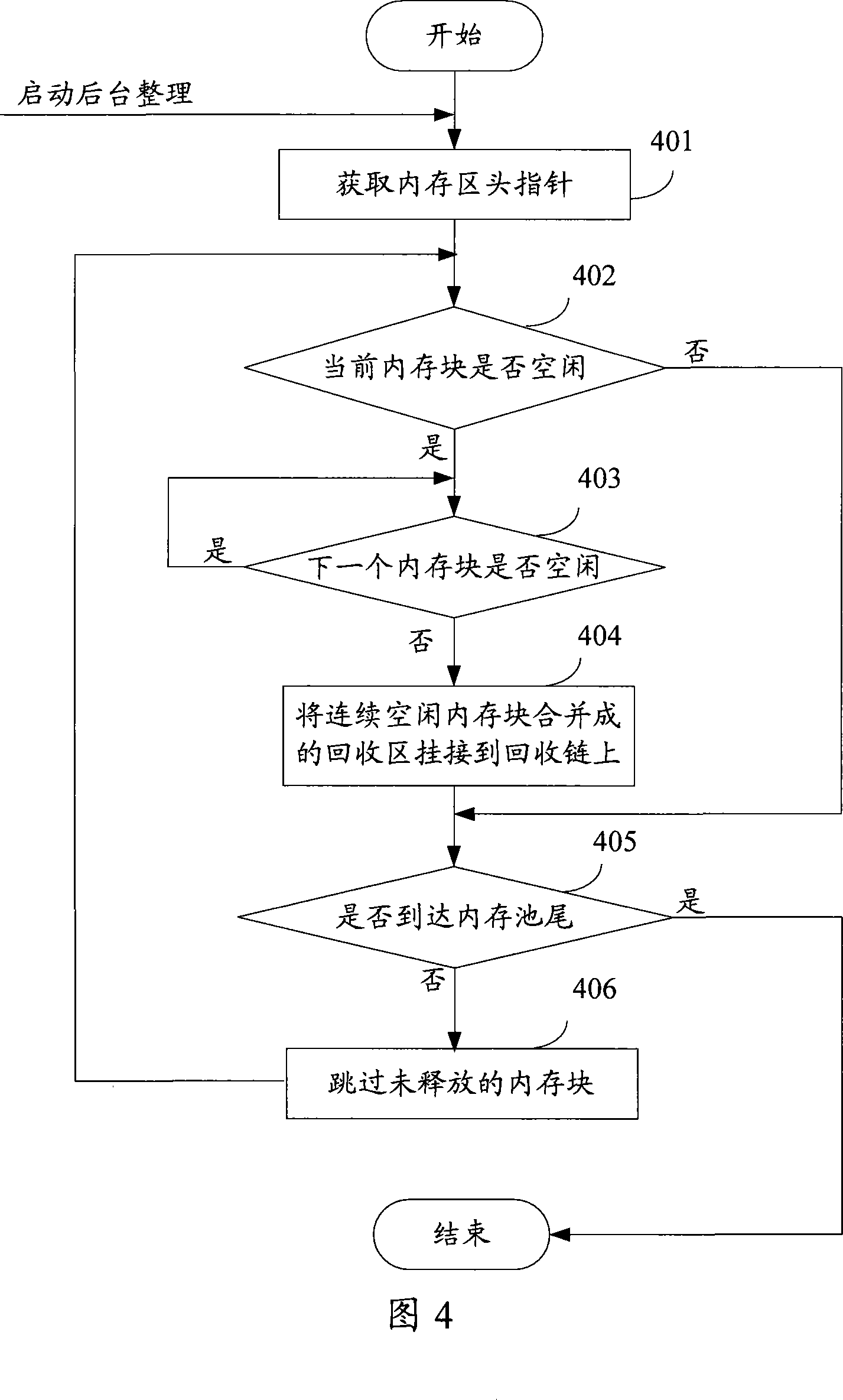

[0051] As described above, when the memory block cannot be allocated from the free chain and the free area, the background sorting can be started again, which guarantees the real-time performance of the memory allocation. This embodiment focuses on further elaborating on this method.

[0052] see image 3 , is a flow chart of memory allocation in Embodiment 2. include:

[0053] Step 301: Receive a request to allocate a memory block of a certain size; for example, request to allocate a memory block of SIZE6;

[0054] Step 302: Search for a free chain, is there a free memory block with a suitable size (SIZE6)? If yes, execute step 303, otherwise, execute step 304;

[0055] Step 303: Allocate memory with a free memory block of appropriate size;

[0056] Step 304: Is the free area of the memory pool enough to allocate a memory block of this size? If so, execute step 305, otherwise, execute step 306;

[0057] Step 305: allocate a memory block from the free area of the me...

Embodiment 3

[0077] In order to further ensure the success rate of memory allocation, this embodiment proposes a backup pool solution.

[0078] Those skilled in the art understand that embedded real-time operating systems generally plan a plurality of memory pools, so one of them can be set as the backup pool of the remaining memory pools, and the backup pools are not allocated during the normal allocation process of the remaining memory pools. When a memory pool cannot satisfy the allocation request, the backup pool is used for memory allocation. The backup pool can pre-allocate memory blocks of various sizes preset by the system. As described in the background art, memory blocks of SIZE0-SIZE7 are pre-divided.

[0079] see you again image 3 , when the memory cannot be allocated from the free area, the free chain and the reclaimed area, the allocation in step 310 will fail. Therefore, when it is determined in step 308 that the reclaimed area cannot be used to allocate memory, the backu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com