Performing thread distribution method for multi-nucleus multi-central processing unit

A technology of central processing unit and execution thread, applied in the direction of multi-programming device, etc., can solve the problems of time slice being unable to be synchronized, calling in sequence, waiting for requests by multiple execution threads, etc., to improve efficiency, improve running speed and efficiency, avoid Effects of resource conflict problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

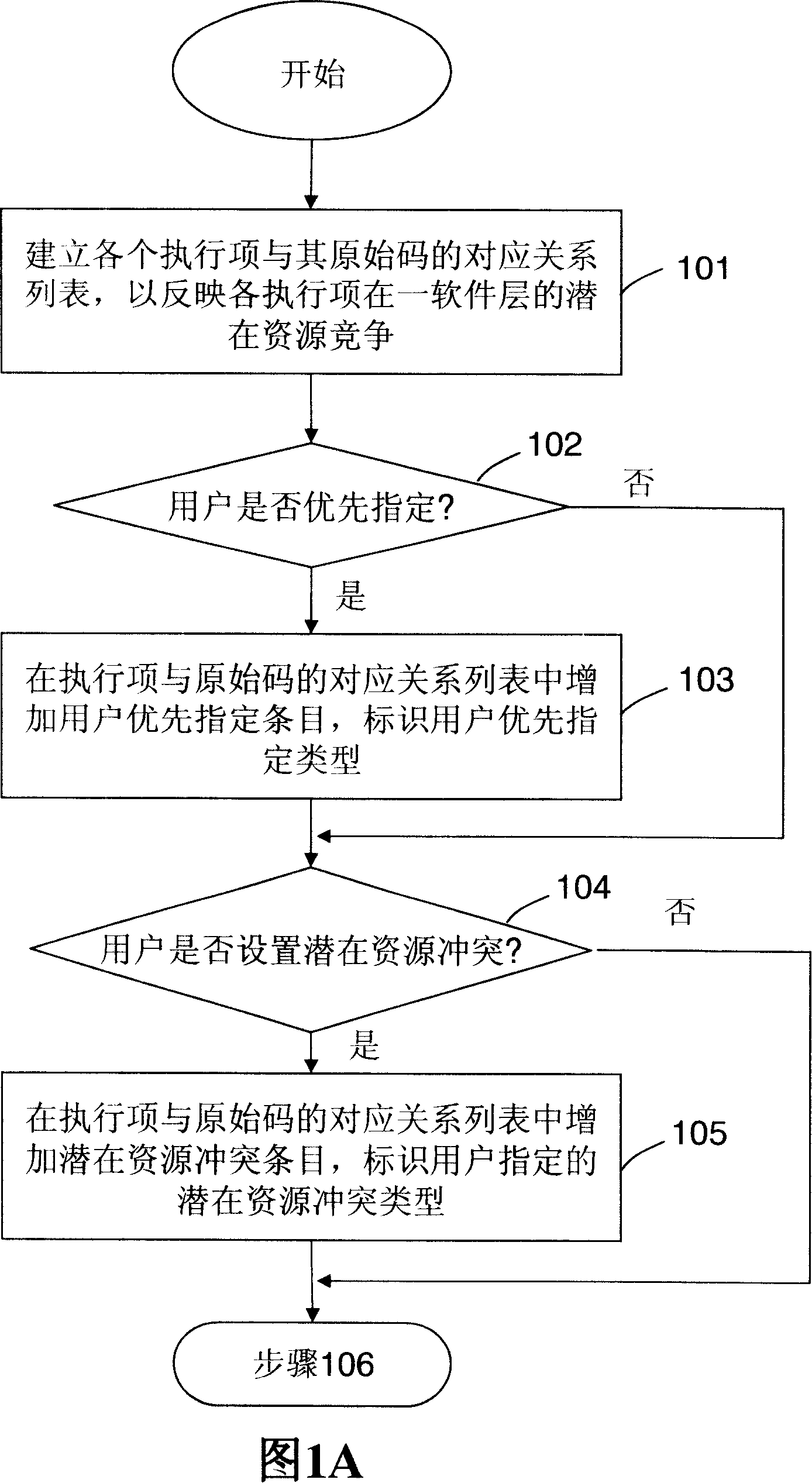

Method used

Image

Examples

Embodiment Construction

[0043]When multiple execution cores of multiple central processing units need to call upper-level modules, that is, modules of the software layer, the known technology usually adopts the method of executing thread scheduling to make calls, so it can be considered that each execution thread is assigned to a single central processing unit to run on a single execution core. For example, in conjunction with the embodiment shown in FIG. 2 , it is relatively simple to sequentially assign the started execution threads to the first execution core 10 of the first central processing unit 1, the second execution core 12 of the first central processing unit 1, the second The first execution core 14 of the CPU 2 and the second execution core 16 of the second CPU 2 . However, such a solution may have a dynamic link library (Dynamic Link Libraries, DLL) that provides multiple execution threads that can be called separately, and memory or overall variables will be shared between these executi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com