System and Method for Creating an Environment and for Sharing a Location Based Experience in an Environment

a technology of environment and location, applied in the field of environment creation system and environment sharing location based experience in an environment, can solve the problems of limited realism and interaction, limited acquisition to dedicated vehicles, and many environments simply not availabl

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

I. Overview

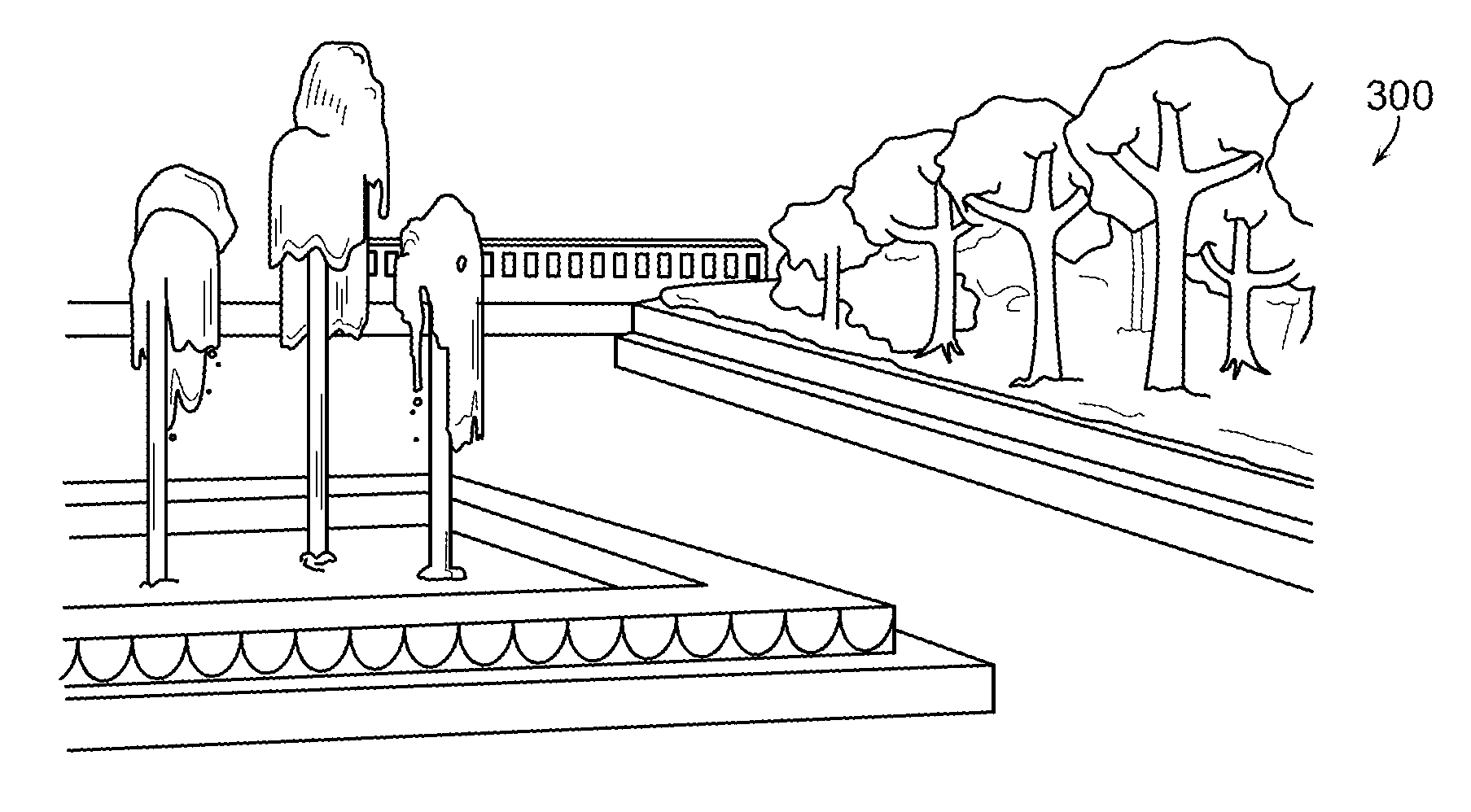

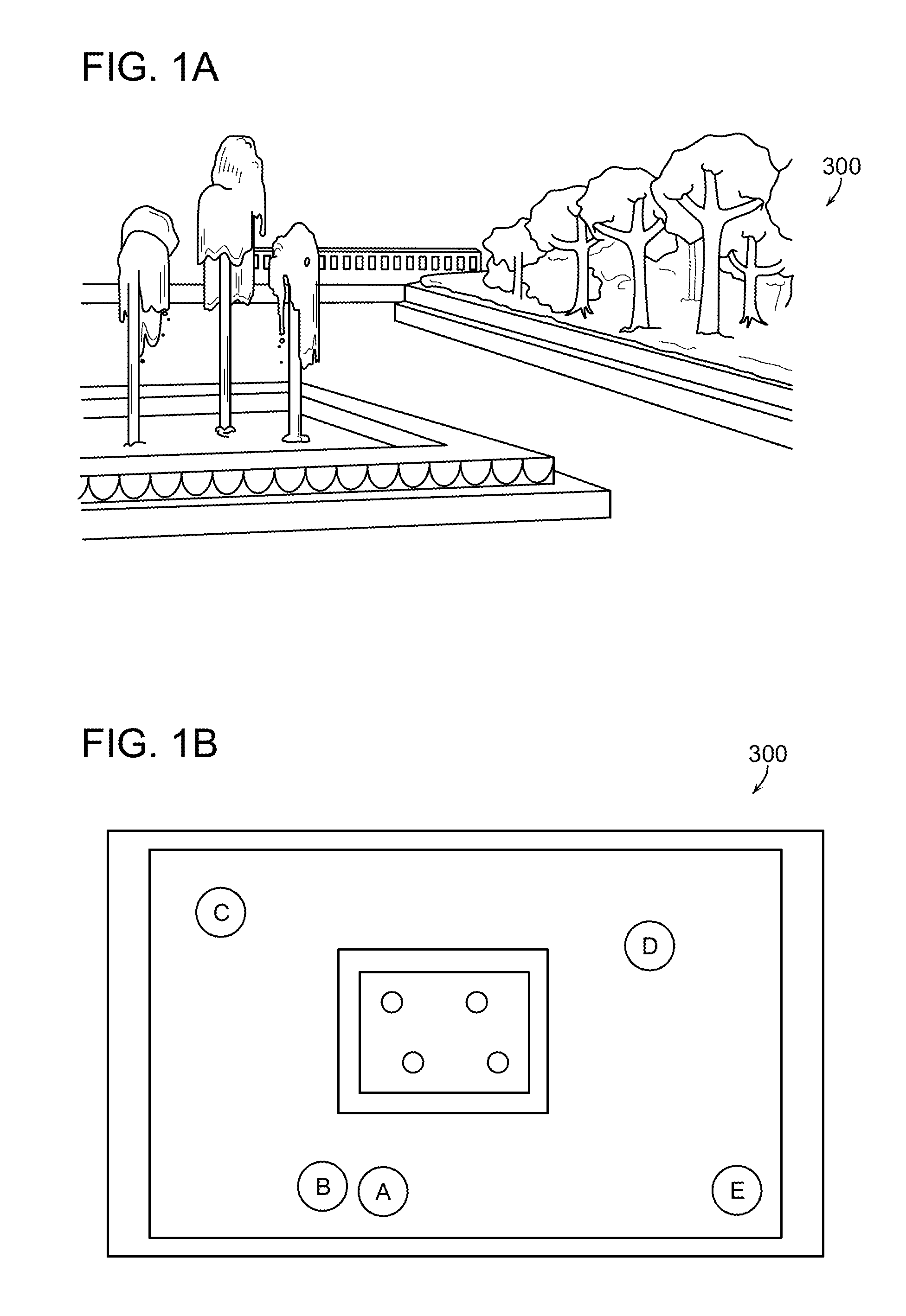

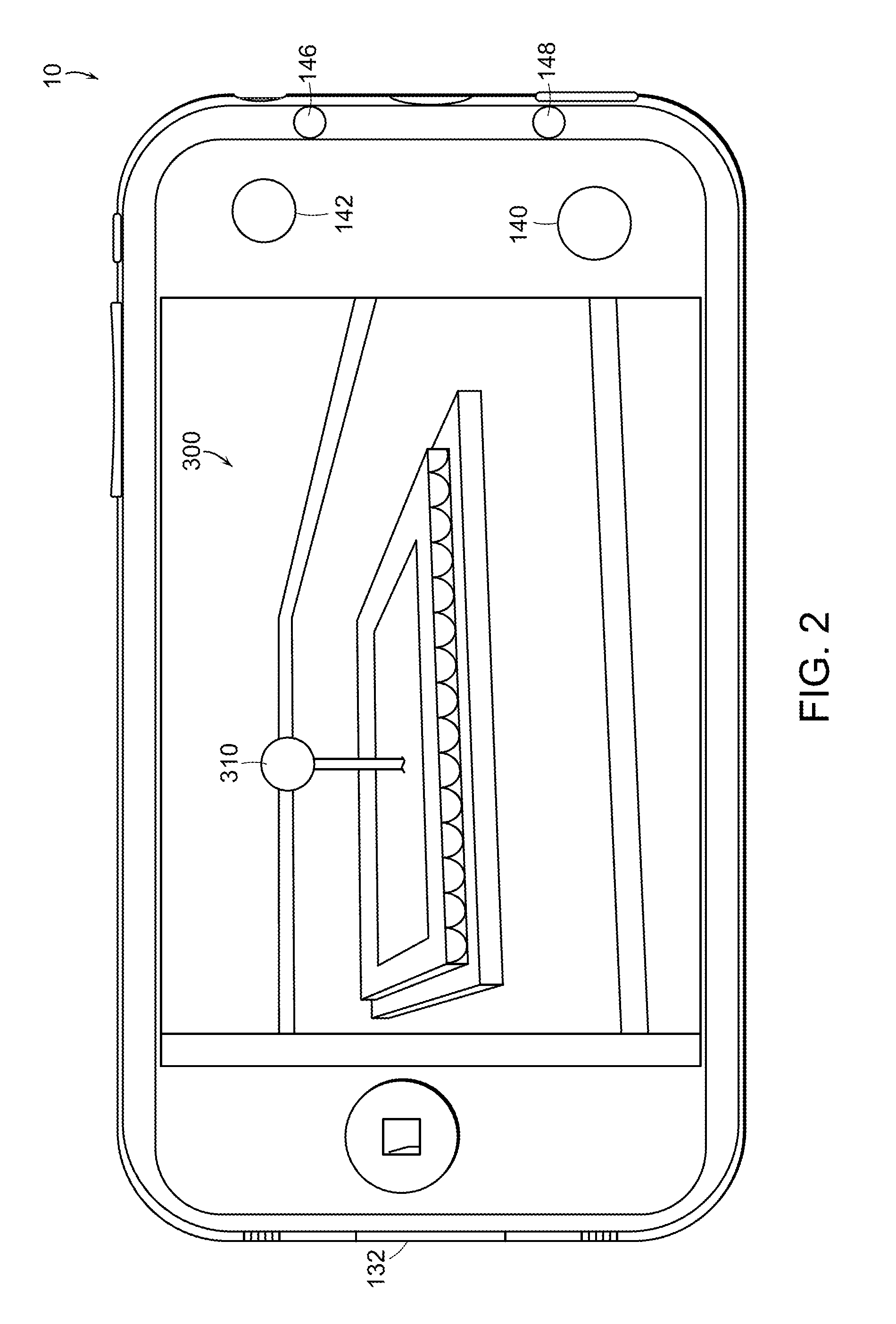

[0036]In an exemplary form, a 3D model or “virtual model” is used as a starting point, such as the image of the plaza of FIG. 1a. Multiple users (or a single user taking multiple pictures) take pictures (images) of the plaza from various locations, marked A-E in FIG. 1b using a mobile device, such as smart phone 10 shown in FIG. 3. Each image A-E includes not only the image, but metadata associated with the image including EXIF data, time, position, and orientation. In this example, the images and metadata are uploaded as they are acquired to a communication network 205 (e.g., cell network) connected to an image processing server 211 (FIG. 3). In some embodiments, the mobile device also includes one or more depth cameras as shown in FIG. 2.

[0037]The image processing server 211 uses the network 205 and GPS information from the phone 10 to process the metadata to obtain very accurate locations for the point of origin of images A-E. Using image matching and registration tech...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com