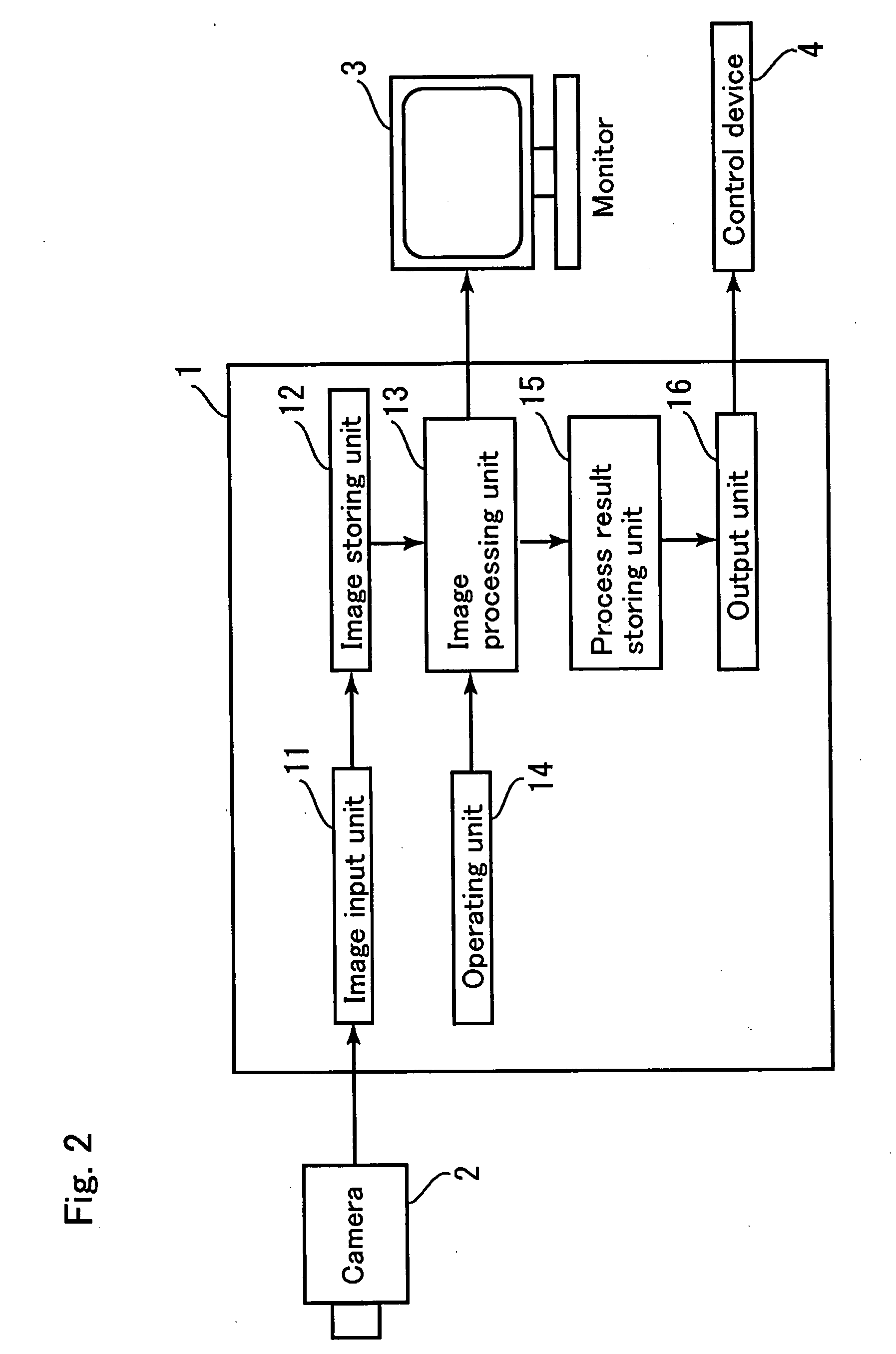

Character extracting apparatus, method, and program

a character extraction and character technology, applied in the field of character extraction apparatus, methods and programs, can solve the problems of difficult to recognize the lateral portion as a character area, difficult to recognize the character area, and longer total processing time for extracting character areas, etc., to extract each character accurately and extract each character in an image

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

second embodiment

[0073]FIG. 9 is a detailed flowchart showing the data interpolated with the local minimum points according to the The data utilized to compensate represents uneven brightness and the like on the background of the captured image. A position corresponding to the background portion of the image 61 is calculated based on the integrated pixel value at each coordinate position along the character direction A. Then, the uneven brightness all over the image 61 is supposed based on the pixel value integration evaluation values at the positions calculated as the background portion of the image 61.

[0074] A coordinate position where the integrated pixel value, the pixel value integration evaluation value, is the local maximum value is extracted at each coordinate position along the character string direction A over the image 61 to extract a candidate of a position which is the supposed background portion of the image 61. In more detail, it is compared between the pixel value integration evalua...

first embodiment

[0079] The following process is substantially the same as the process in the first embodiment in which the section minimum value point 631 is used instead of the candidate minimum point 731. At Step S415, as shown in FIG. 8D, at each coordinate position along the character string direction A, a base value 632 at the coordinate position is calculated based on interpolation with the pixel value integration evaluation value at the candidate minimum points 731 extracted at the Step S414. At Step S415, a process to subtract the data interpolated with the local minimum points based on the base points 632 from the project data as the waveform date 62 is executed. That is, at each coordinate position along the character string direction A, the process to subtract the base value 632 from the waveform data 62 is executed. As a result of the above-mentioned process, a compensated waveform data 62c as shown in FIG. 8F is generated.

[0080] At Step S6, an area including the character to be extract...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com