End-to-end model training method and device, computer equipment and storage medium

A model training and model technology, applied in speech analysis, speech recognition, instruments, etc., can solve problems such as dependence on training data, robustness, and low flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

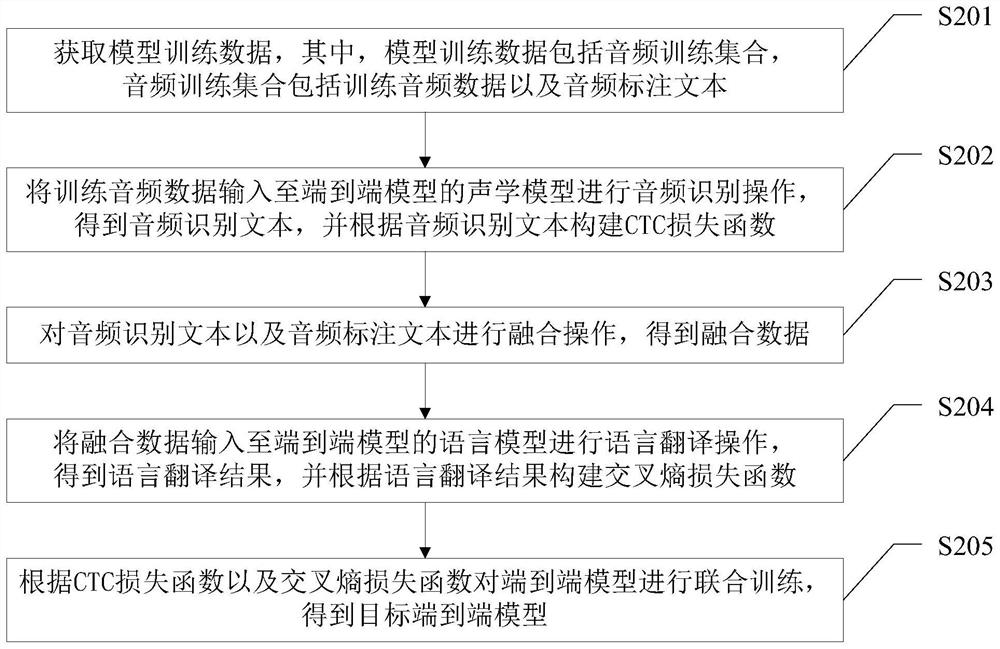

[0044] continue to refer to figure 2 , which shows an implementation flow chart of the end-to-end model training method applied to speech recognition provided by Embodiment 1 of the present application. For convenience of description, only the parts related to the present application are shown.

[0045] The above-mentioned end-to-end model training method applied to speech recognition includes: step S201 , step S202 , step S203 , step S204 and step S205 .

[0046] In step S201, model training data is acquired, wherein the model training data includes an audio training set, and the audio training set includes training audio data and audio annotation text.

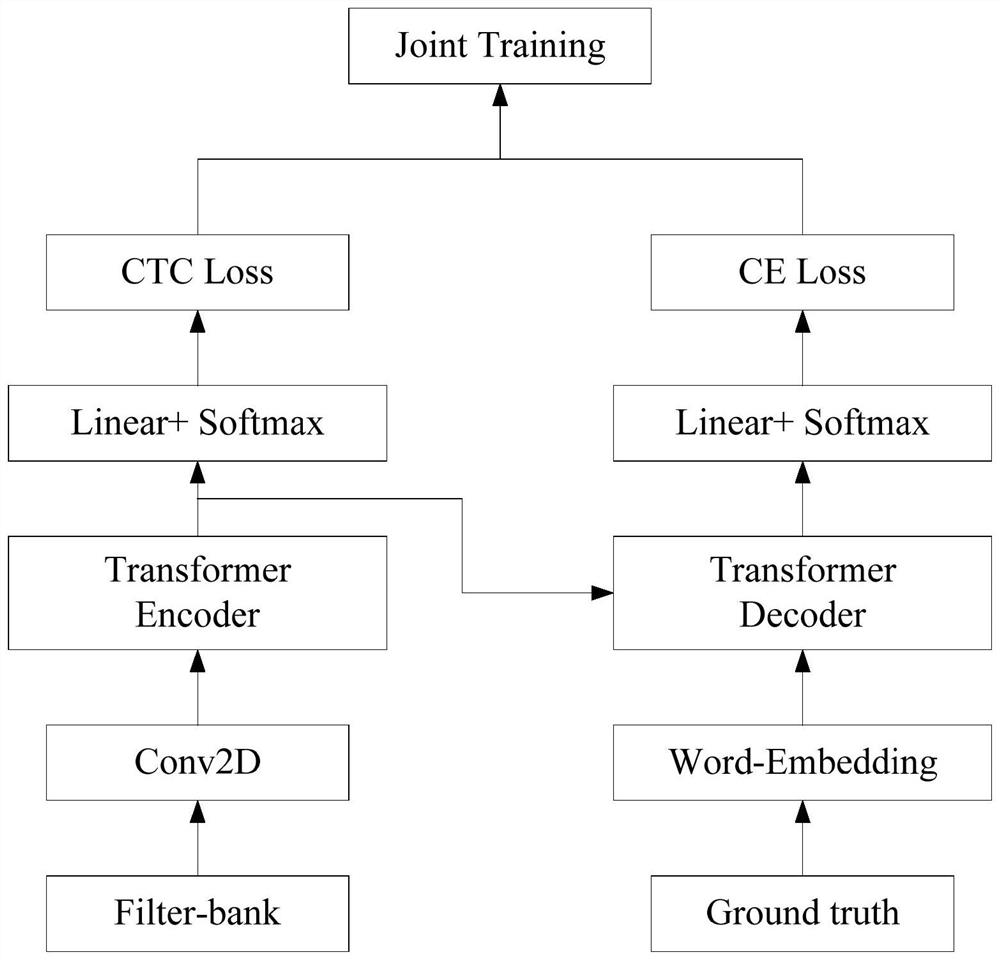

[0047] In this embodiment of the present application, the end-to-end model applied to speech recognition may use the standard CTC-Attention model, such as image 3 shown.

[0048] In the embodiment of the present application, the training audio data refers to the audio used for training the acoustic model in the end-to-en...

Embodiment 2

[0112] further reference Figure 9 , as a response to the above figure 2 The implementation of the shown method, the present application provides an embodiment of an end-to-end model training device applied to speech recognition, the device embodiment is the same as figure 2 Corresponding to the method embodiments shown, the apparatus can be specifically applied to various electronic devices.

[0113] like Figure 9 As shown, the end-to-end model training apparatus 200 applied to speech recognition in this embodiment includes: a training data acquisition module 210 , an audio recognition module 220 , a text fusion module 230 , a language translation module 240 and a joint training module 250 . in:

[0114] A training data acquisition module 210, configured to acquire model training data, wherein the model training data includes an audio training set, and the audio training set includes training audio data and audio annotation text;

[0115] The audio recognition module 2...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com