Interactive small sample semantic segmentation training method

A technology of semantic segmentation and training methods, applied in the direction of character and pattern recognition, instruments, computer components, etc., can solve the problems of limited application and promotion, and achieve the effect of solving the large workload of labeling data

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

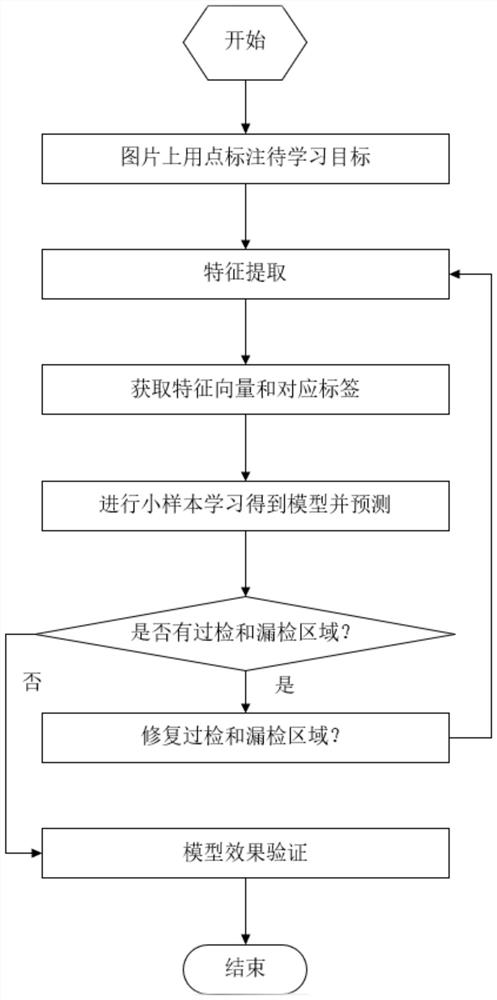

[0034] Embodiment 1 of this application provides such as figure 1 The training method for the interactive small-sample semantic segmentation shown:

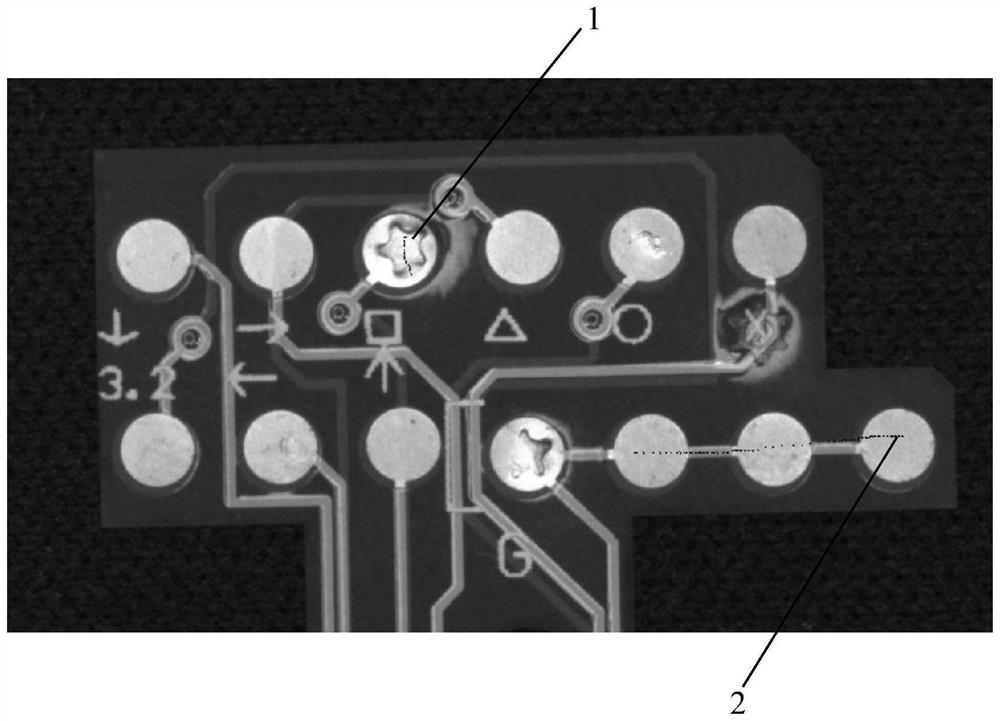

[0035] Step 1, such as image 3 As shown, let the user draw a series of points of different categories on the original picture, and use the points to mark the target to be learned (abnormal sample 1, normal sample 2); compared with the traditional target detection, the labeling of the present invention does not need to be very detailed , you only need to draw a series of points of different categories on the image to be labeled to achieve high-precision detection;

[0036] Step 2. Input the picture marked with the target to be learned in step 1 into the pre-training model, perform feature extraction, and obtain a feature map;

[0037] Step 3. Project the marked points onto the feature map to obtain feature vectors and category labels, and use the logistic regression model to classify and predict the original marked points;

[...

Embodiment 2

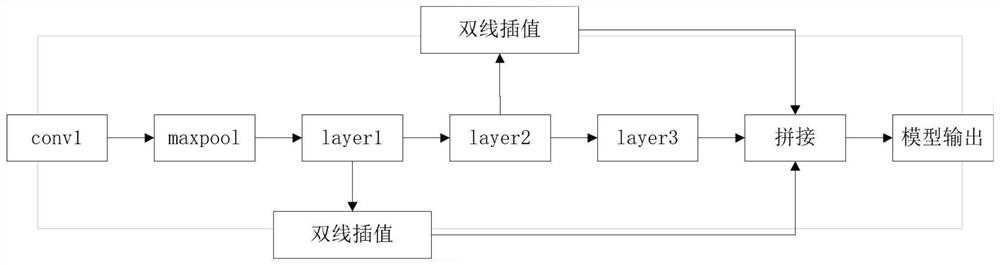

[0042] On the basis of Embodiment 1, Embodiment 2 of the present application provides the following figure 2 Specific steps for step 2 shown:

[0043] Step 2.1. The picture marked with the target to be learned is pooled through the conv1 layer and the maxpool layer to obtain a feature map;

[0044] Step 2.2. After the feature map is processed by layer1, input layer2 to extract features, and then go through layer3 for further feature extraction; improve the expressive ability of the network, and further extract deep-level features;

[0045] Step 2.3: Interpolate the output obtained from layer 1 with the output obtained from layer 2, and then concatenate with the output obtained from layer 3 to obtain multiple spliced feature maps of different scales as the final output, which is more conducive to the network Learn and express.

Embodiment 3

[0047] On the basis of Embodiment 1, Embodiment 3 of the present application provides the specific steps of Step 3:

[0048] Step 3.1, point the marked point i (x,y) is projected onto the feature map to get the marked point on the feature map i (x new ,y new ):

[0049] point i (x new ,y new ) = point i (x,y)*(w t / w,h t / h)) (1)

[0050] In the above formula, (x, y) represents the corresponding position coordinates of the original label point; (x new ,y new ) represents the position coordinates of the original label point projected on the feature map; w t 、h t are the width and height of the feature map respectively; w and h are the width and height of the original map respectively;

[0051] Step 3.2, obtain the feature vector T and the category label by projecting the marked points on the feature map;

[0052] Step 3.3, use the logistic regression model to classify and predict the original label points:

[0053]

[0054] In the above formula, x represents th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com