Conference voice data processing method and system

A technology of speech data and processing methods, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of confusion of speakers, inconvenience, waste of human resources and time cost, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment 1

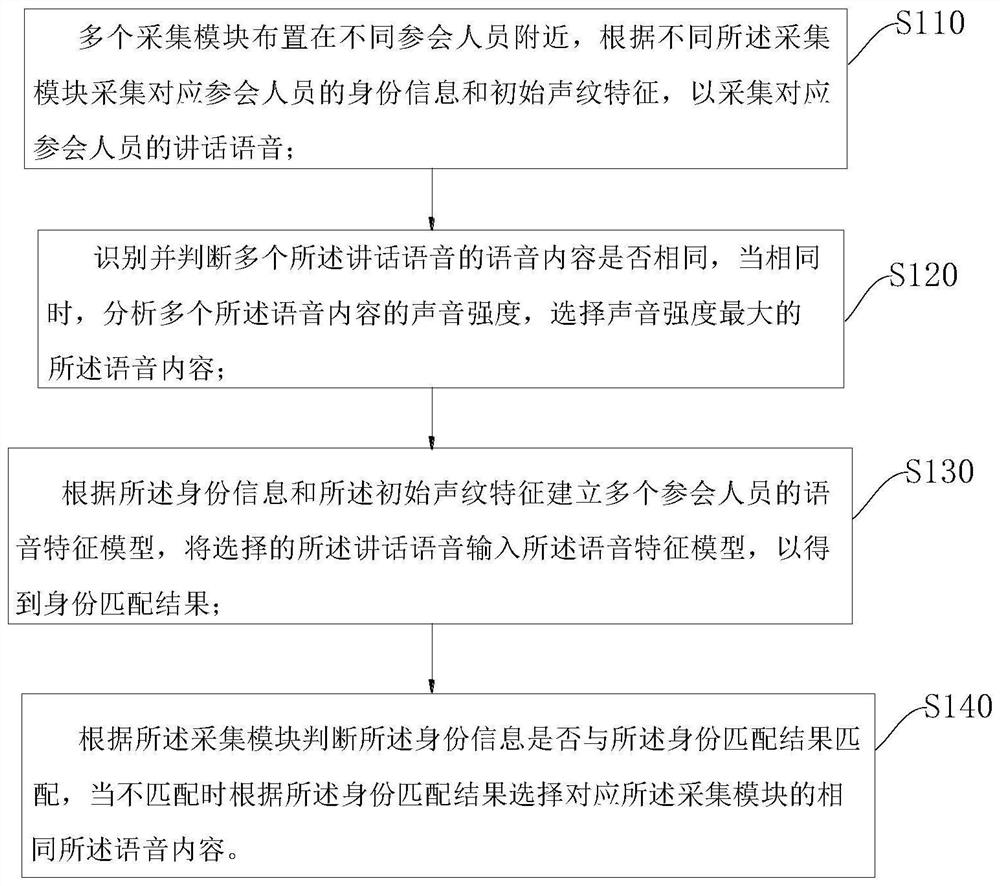

[0035] A method for processing conference voice data, comprising the following steps:

[0036] S110: A plurality of acquisition modules 201 are arranged near different participants, and the above-mentioned acquisition modules 201 collect the identity information and initial voiceprint features of the corresponding participants according to the different participants, so as to collect the speech voices of the corresponding participants;

[0037] S120: Identifying and judging whether the speech contents of the plurality of speech speeches are the same, and if they are the same, analyzing the sound intensities of the plurality of speech contents, and selecting the speech contents with the highest sound intensity;

[0038] S130: Establish voice feature models of a plurality of participants according to the above identity information and the above initial voiceprint features, and input the selected speech voice into the above voice feature models to obtain an identity matching resul...

Embodiment 2

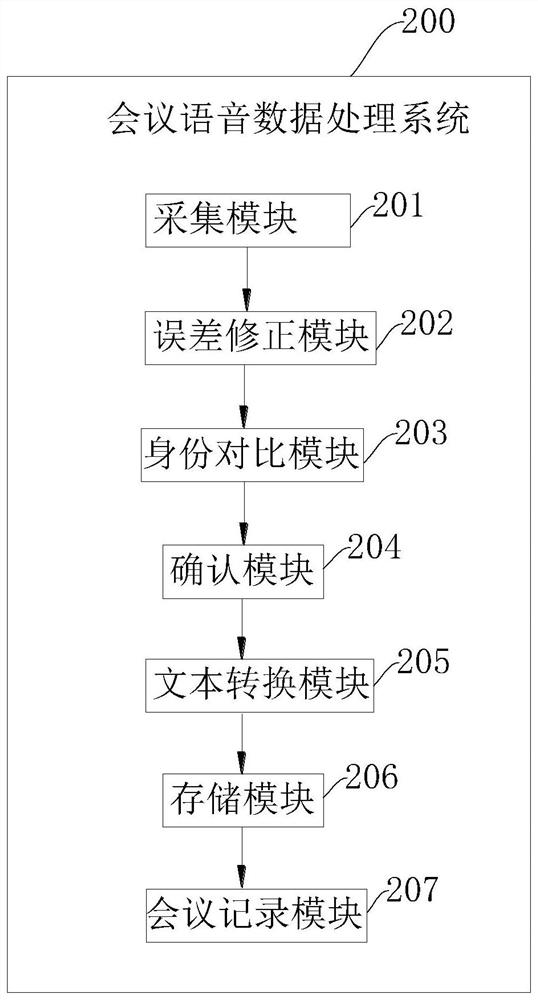

[0051] see figure 2 , figure 2 It is a schematic diagram of a conference voice data processing system 200 provided by an embodiment of the present invention.

[0052] A conference voice data processing system 200, comprising an error correction module 202, a confirmation module 204, an identity comparison module 203 and a plurality of acquisition modules 201: the plurality of acquisition modules 201 are used to be arranged near different participants, according to different acquisition Module 201 collects the identity information and initial voiceprint features of the corresponding participants to collect the speech voices of the corresponding participants; the above-mentioned error correction module 202 is used to identify and judge whether the voice content of a plurality of the above-mentioned speech voices is the same. , analyze the sound intensity of a plurality of above-mentioned speech contents, select the above-mentioned speech content with the largest sound intensi...

Embodiment 3

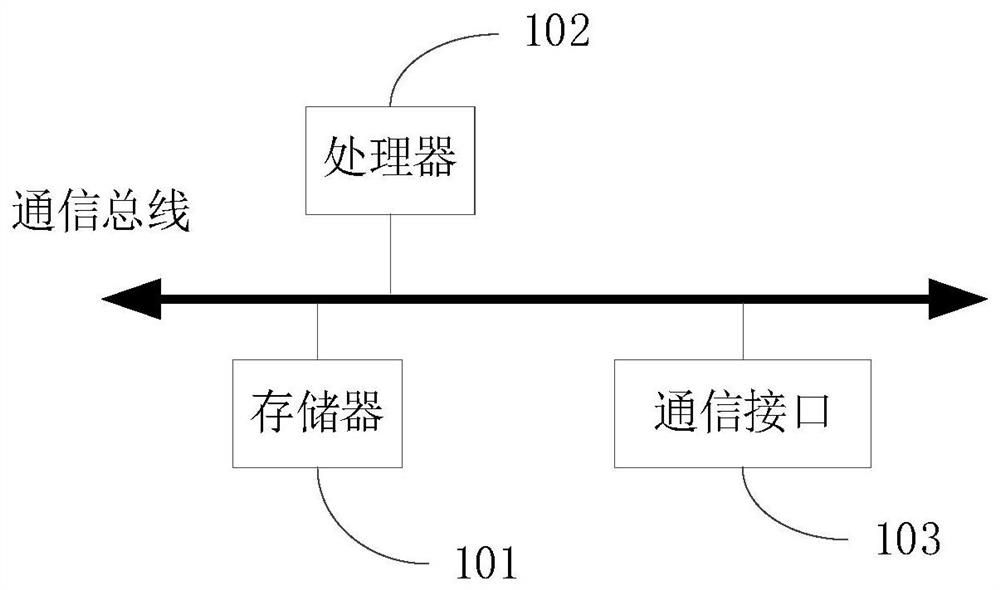

[0064] see image 3 , image 3 A schematic structural block diagram of an electronic device provided in an embodiment of the present application. The electronic device includes a memory 101, a processor 102, and a communication interface 103. The memory 101, the processor 102, and the communication interface 103 are electrically connected to each other directly or indirectly, so as to realize data transmission or interaction. For example, these components can be electrically connected to each other through one or more communication buses or signal lines. The memory 101 can be used to store software programs and modules, such as program instructions / modules corresponding to the conference speech processing system provided in the embodiment of the present application, and the processor 102 executes various functions by executing the software programs and modules stored in the memory 101 applications and data processing. The communication interface 103 can be used for signalin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com