Multi-modal joint event detection method based on pictures and sentences

A technology that combines events and detection methods, used in character and pattern recognition, still image data retrieval, computer components, etc., to achieve the effect of improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

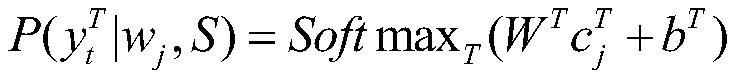

Method used

Image

Examples

Embodiment Construction

[0070] The accompanying drawings disclose non-restrictive schematic flow diagrams of preferred examples involved in the present invention; the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings.

[0071] Event detection is an important part of the event extraction task, which can identify image actions and text trigger words that mark the occurrence of events and classify them into predefined event types. It has a wide range of applications in the fields of network public opinion analysis and intelligence collection. As the carriers of disseminating network information become more and more diverse, researchers have begun to focus on event detection tasks in different fields, that is, how to automatically obtain events of interest from different information carriers such as unstructured pictures and texts. Also, the same event may appear in pictures and sentences in different forms. However, the existing...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com