Multi-depth camera rapid calibration and data fusion method

A technology of data fusion and calibration method, applied in the field of 3D vision, can solve the problems of data fusion error, the data collected by the camera cannot be synchronized, and achieve the effect of reducing the matching error and improving the fusion accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0049] Embodiment 1 quick calibration

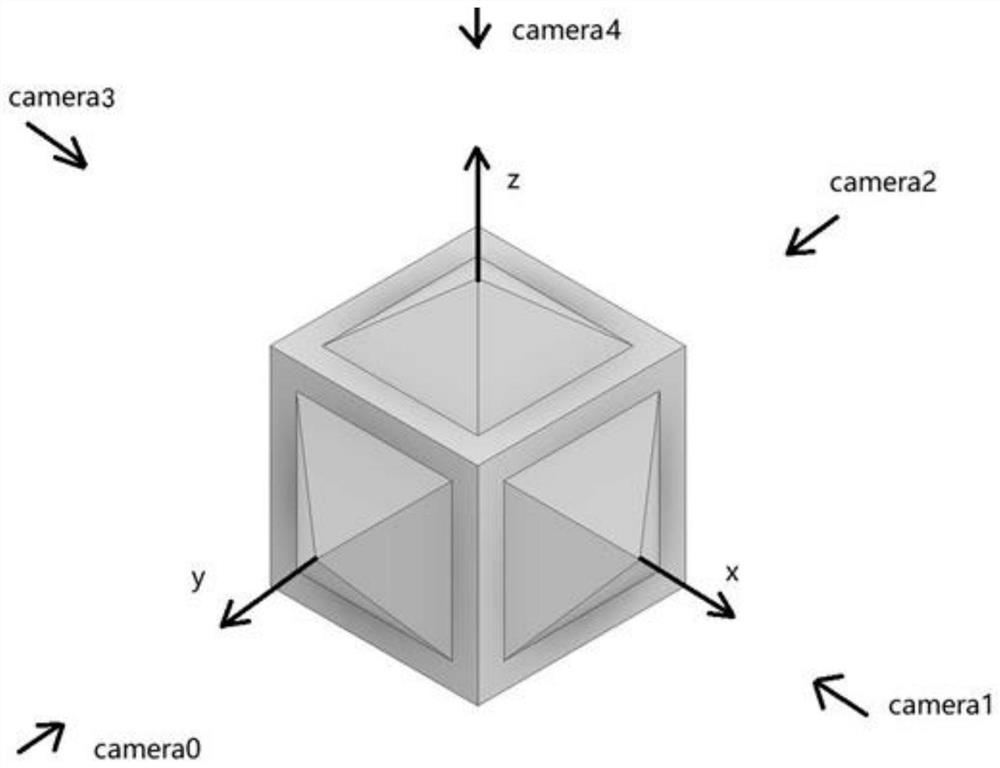

[0050] Calibrator and its location such as image 3As shown, the calibration object is based on a cube, and a pyramid-shaped shape is arranged in the center of the five faces. The four faces of the pyramid are exactly the same, and the angle between each face and the plane is 106 degrees.

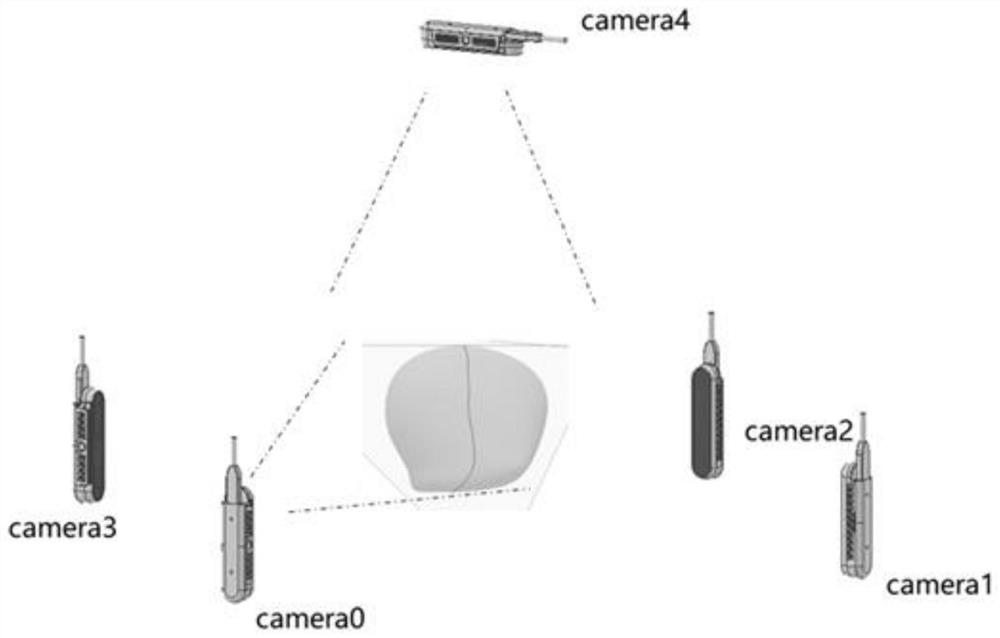

[0051] When calibrating, first place the calibration object in the middle determined by the five cameras (camera0, camera1, camera2, camera3, camera4), each face of the calibration object faces the camera, and when the calibration object is still, the five cameras take turns To collect data, each camera takes turns collecting once in each round, repeating multiple rounds, and then performing calibration calculations to complete the calibration.

[0052] The principle is as follows:

[0053] a) The system itself determines a defective local coordinate system, as follows: the y-axis of the local coordinate system points to camera0 (camera 0) in the po...

Embodiment 2

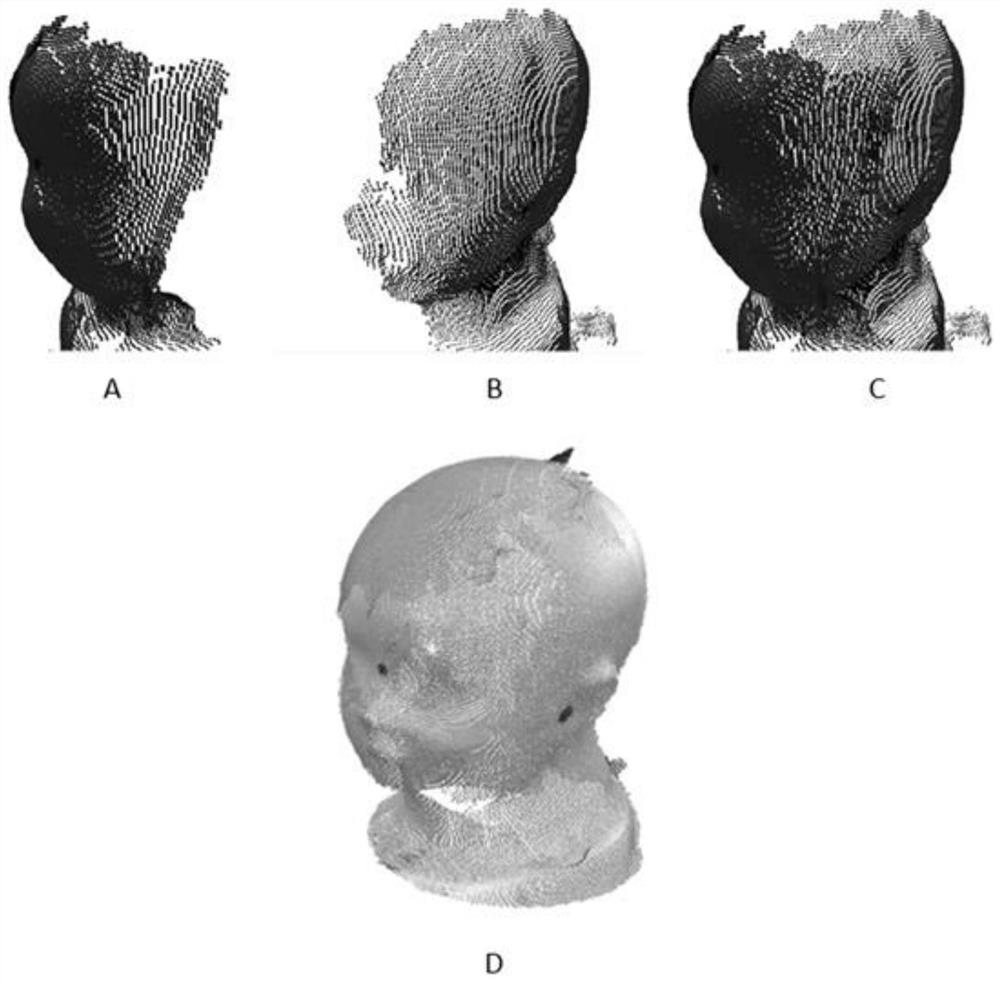

[0084] Example 2 Dynamic matching-fusion

[0085] In view of the fact that the measured object cannot be completely still during measurement, and when the cameras of the scanner collect data, the cameras with mutual interference cannot be activated at the same time, and need to be carried out in time, resulting in the data collected by each camera not being synchronized with the target at a fixed position data. Even when the calibration is completely accurate, data fusion errors will result. To solve this problem, the present invention proposes a method for matching RBG color images and depth data of integrated cameras to reduce multi-camera point cloud fusion errors.

[0086] Principle description:

[0087] a) In this application example, the top camera 4 is used as the reference camera, and other four cameras can also be selected as the reference camera.

[0088] b) Stick three red marking dots on the top of the target facing the camera 4, these dots are easily recognized ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com