Vehicle Control Method Based on Reinforcement Learning Control Strategy in Mixed Fleet

A control strategy and vehicle control technology, applied in vehicle position/route/height control, control/regulation system, non-electric variable control, etc., can solve the problems of easy deviation of results and strong dependence on human factors, and reduce calculation cost, improvement of formation deviation phenomenon, and improvement of stability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

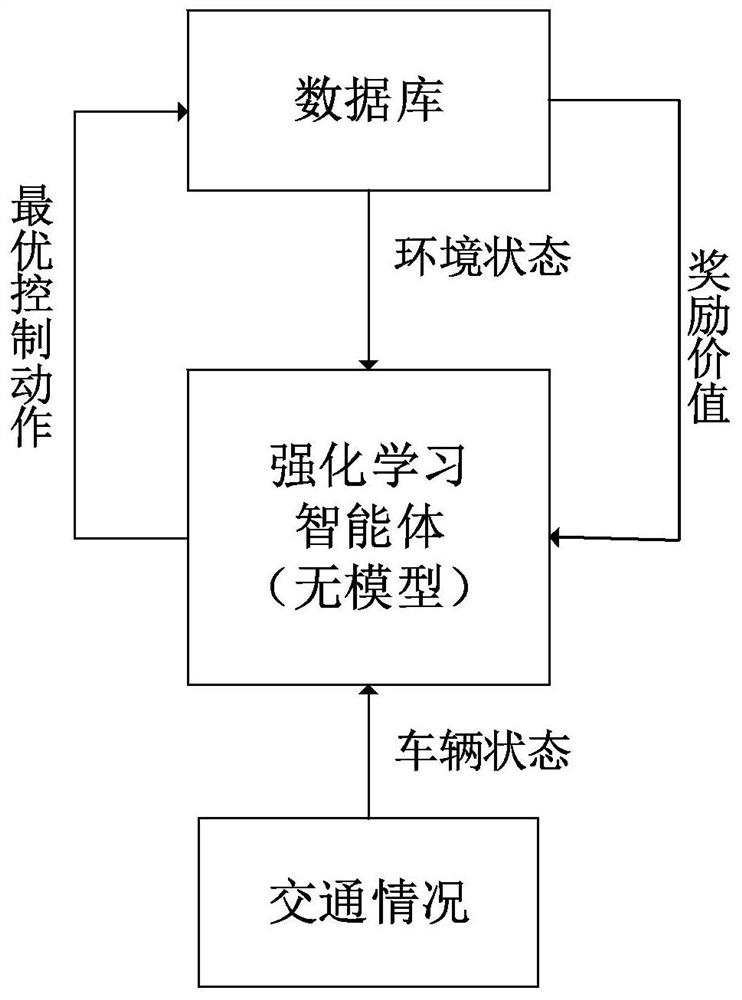

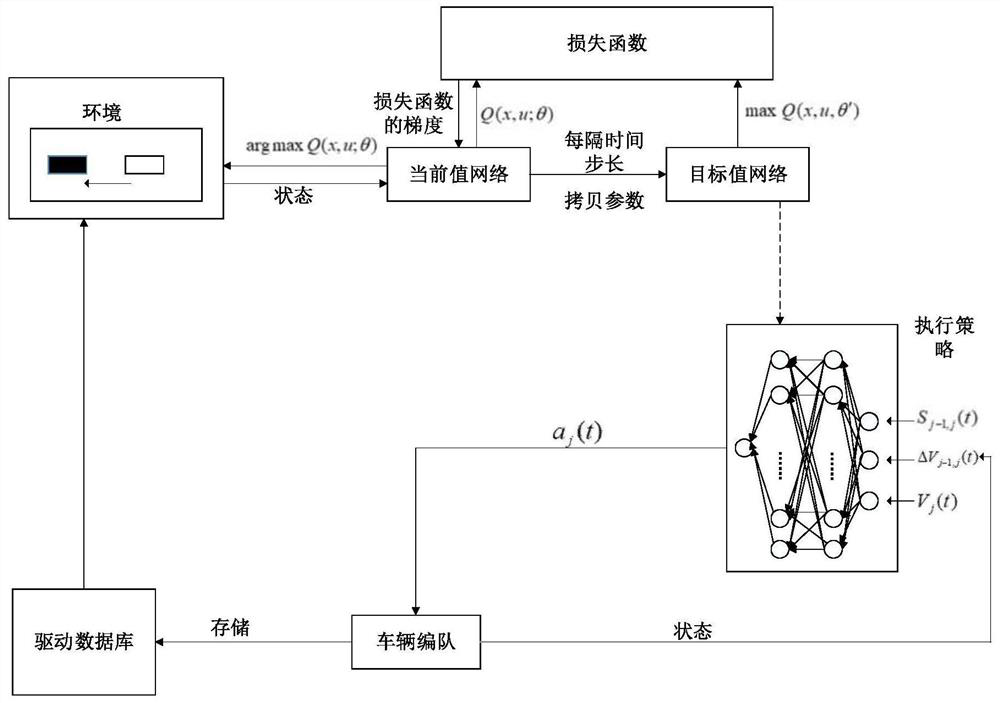

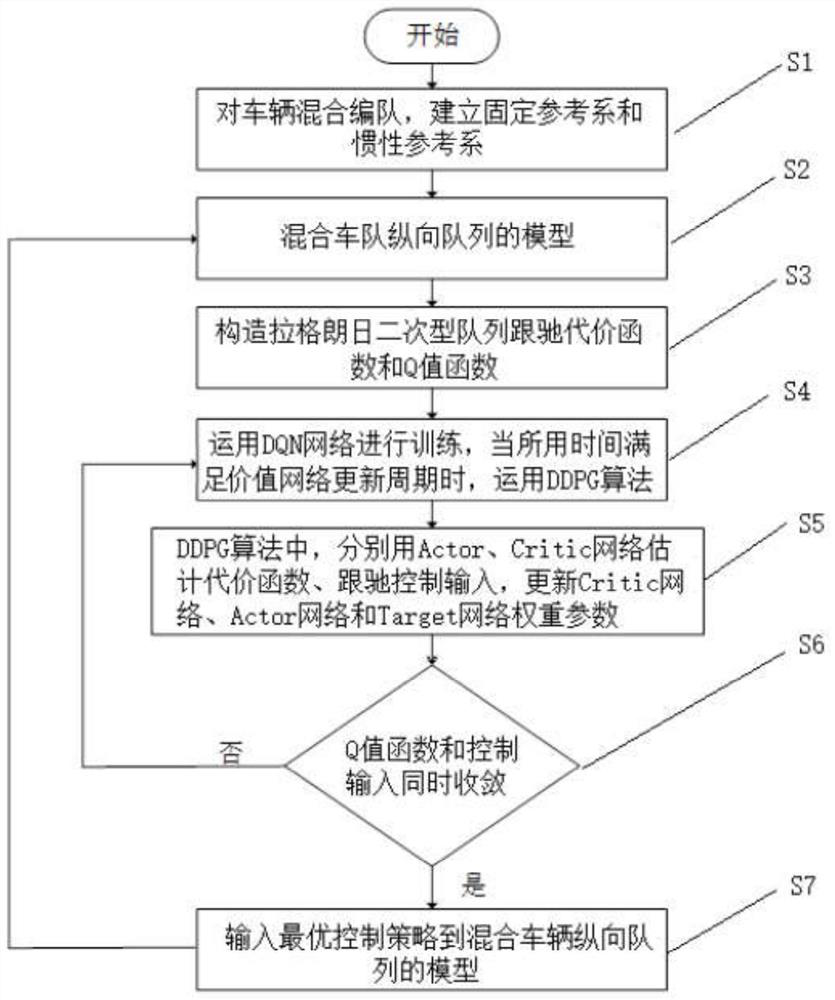

[0066] Such as figure 1 As shown, in general, the problem of knowing the state transition probability is called "model-based" problem, and the problem of not knowing is called "model-free" problem. The Markov decision process in the prior art is a modeling method proposed for the "no model" problem. The reinforcement learning algorithm of mixed traffic that the present invention proposes is a kind of model-free free control strategy, and this method forms a database with the driving data of vehicles in the mixed fleet, such as speed, acceleration, and driving distance, and combines this database with the traffic on the road. The situation is used as the environment, and each vehicle in the formation is regarded as an agent, and the environment can realize the feedback status and rewards to the agent. The input is the defined environment state, vehicle state, and optimal control action, and the output is the reward value caused by the action in this state. It can be applied t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com