Model-free data center resource scheduling algorithm based on reinforcement learning

A data center and resource scheduling technology, applied in resource allocation, integrated learning, digital data processing, etc., can solve the problems that cloud computing algorithms cannot adapt to changing environments, modeling is difficult, and task allocation is not scientific and reasonable enough to achieve tasks. more scientific and rationalized distribution, avoid environmental modeling difficulties, and achieve the effect of efficient utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

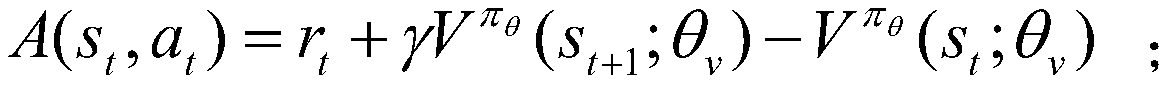

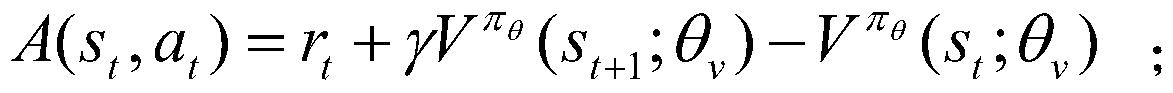

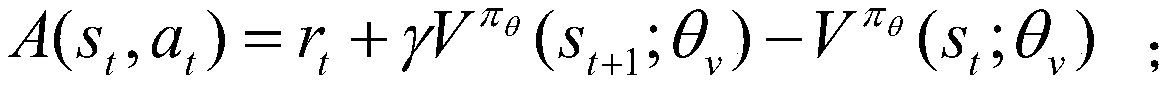

[0027] Embodiment 1: A model-free data center resource scheduling algorithm based on reinforcement learning, including an environment model and a DRL model. The environment model includes a time model, a VM model, and a Task model. The Task model is used to store tasks that have not yet been executed, and the VM model It is used to execute tasks. The DRL model includes Agent1 model and Agent2 model. The Agent1 model is used to judge whether the task is executed. The Agent2 model is used to increase or decrease virtual machines to achieve load balancing. The Agent1 model and Agent2 model include state space, action space and reward Function and deep neural network are composed of four parts;

[0028] The state space 1 of the Agent1 model is (e t ,c t ,m t ,n t ); where e t is the execution time of the task, c t is the cost of the task, n t is the proportion of the busy virtual machine; the state space 2 of the Agent2 model is in Represents the load of the environment ...

Embodiment 2

[0035] Embodiment 2: A model-free data center resource scheduling algorithm based on reinforcement learning, including an environment model and a DRL model. The environment model includes a time model, a VM model, and a Task model. The Task model is used to store tasks that have not yet been executed, and the VM model It is used to execute tasks. The DRL model includes Agent1 model and Agent2 model. The Agent1 model is used to judge whether the task is executed. The Agent2 model is used to increase or decrease virtual machines to achieve load balancing. The Agent1 model and Agent2 model include state space, action space and reward Function and deep neural network are composed of four parts;

[0036] The Agent1 model needs to obtain the priority value cost of the task before judging whether the task is executed, cost=(e t +d t ) / e t ; where e t Indicates the execution time of the task, d t Indicates the waiting time of tasks in the queue;

[0037] The state space 1 of the ...

Embodiment 3

[0044] Embodiment 3: A model-free data center resource scheduling algorithm based on reinforcement learning, including an environment model and a DRL model. The environment model includes a time model, a VM model, and a Task model. The Task model is used to store tasks that have not yet been executed, and the VM model It is used to execute tasks. The DRL model includes Agent1 model and Agent2 model. The Agent1 model is used to judge whether the task is executed. The Agent2 model is used to increase or decrease virtual machines to achieve load balancing. The Agent1 model and Agent2 model include state space, action space and reward Function and deep neural network are composed of four parts;

[0045] The Agent1 model needs to obtain the priority value cost of the task before judging whether the task is executed, cost=(e t +d t ) / e t ; where e t Indicates the execution time of the task, d t Indicates the waiting time of tasks in the queue;

[0046] The state space 1 of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com