Binocular vision gesture recognition method and device based on range finding assistance

A binocular vision and gesture recognition technology, applied in the field of computer vision, can solve the problem of low gesture recognition accuracy, and achieve the effects of simple device structure, strong reliability and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

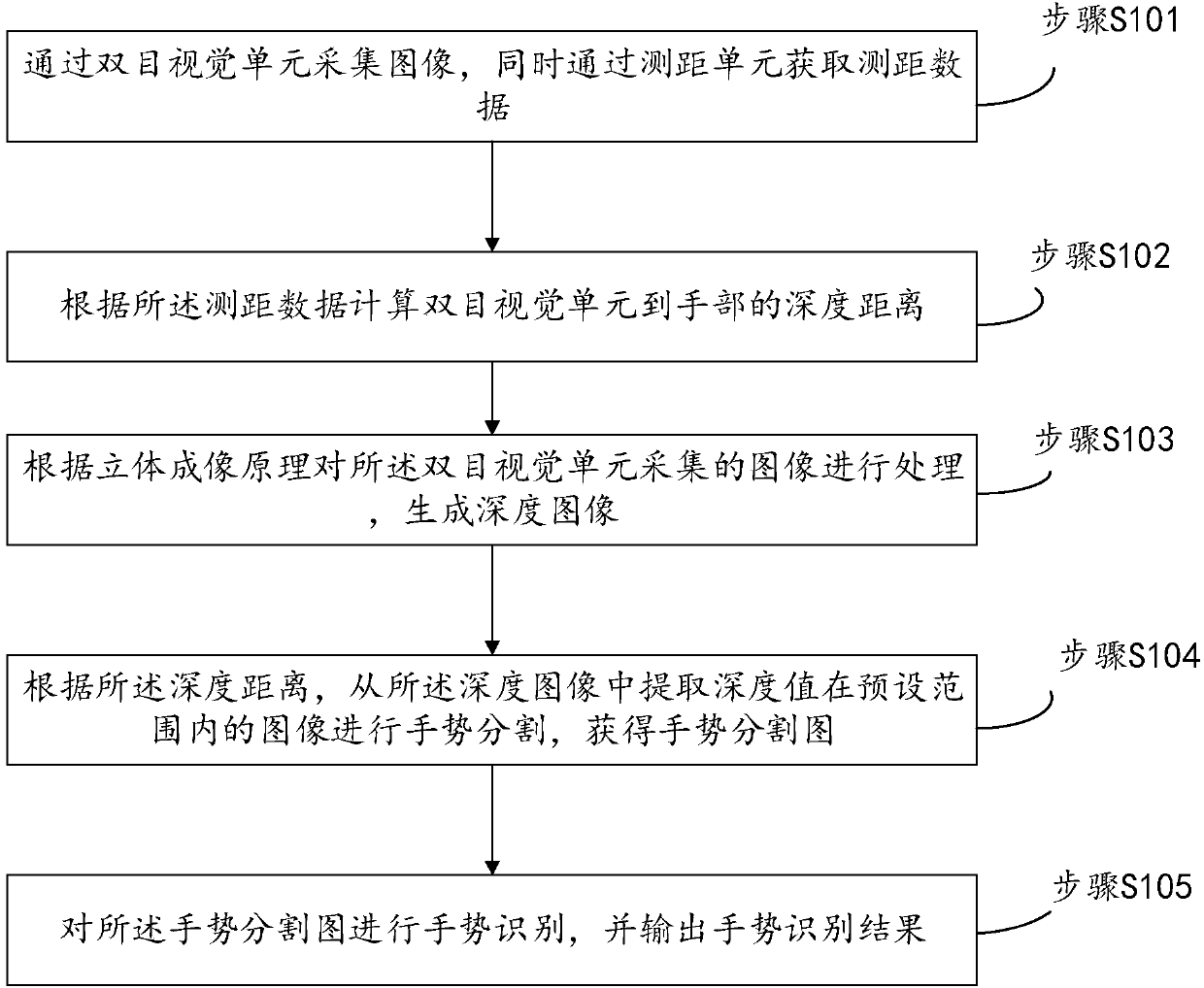

[0038] refer to figure 1 , this embodiment provides a binocular vision gesture recognition method based on ranging assistance, including:

[0039] Step S101, collecting images through the binocular vision unit, and acquiring distance measurement data through the distance measurement unit at the same time;

[0040] Step S102, calculating the depth distance from the binocular vision unit to the hand according to the ranging data;

[0041] Step S103, processing the image collected by the binocular vision unit according to the principle of stereo imaging to generate a depth image;

[0042] Step S104, according to the depth distance, extract images whose depth values are within a preset range from the depth image for gesture segmentation, and obtain a gesture segmentation map;

[0043] Step S105, performing gesture recognition on the gesture segmentation map, and outputting a gesture recognition result.

[0044] The binocular vision gesture recognition method based on distance...

Embodiment 2

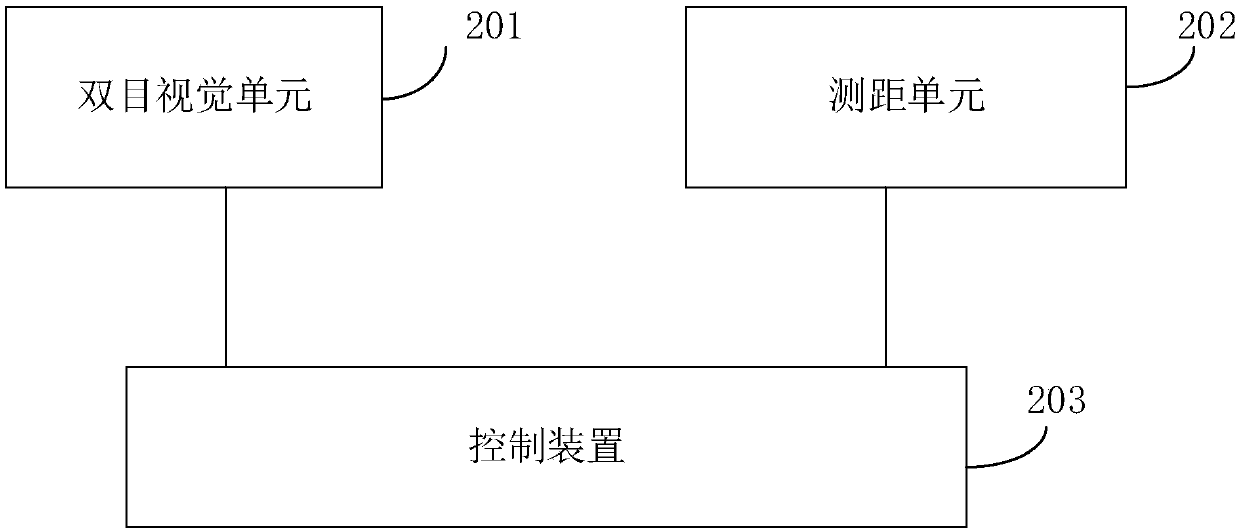

[0061] refer to image 3 , this embodiment provides a binocular vision gesture recognition device based on ranging assistance, including a binocular vision unit 201, a ranging unit 202, and a control device 203;

[0062] The binocular vision unit 201 is used to collect images and send them to the control device 203;

[0063] The ranging unit 202 is used to obtain ranging data while the binocular vision unit 201 collects images;

[0064] The control device 203 is used to calculate the depth distance from the binocular vision unit to the hand according to the ranging data, and process the images collected by the binocular vision unit according to the principle of stereo imaging to generate a depth image. According to the depth distance, from Extracting images with depth values within a preset range from the depth image for gesture segmentation to obtain a gesture segmentation map, performing gesture recognition on the gesture segmentation map, and outputting a gesture recogni...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com