A 3D model retrieval method based on deep learning

A three-dimensional model, deep learning technology, applied in digital data information retrieval, character and pattern recognition, instruments, etc., can solve the problems of limited use scope and high hardware requirements, improve retrieval performance, exert autonomy, and avoid dependence. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

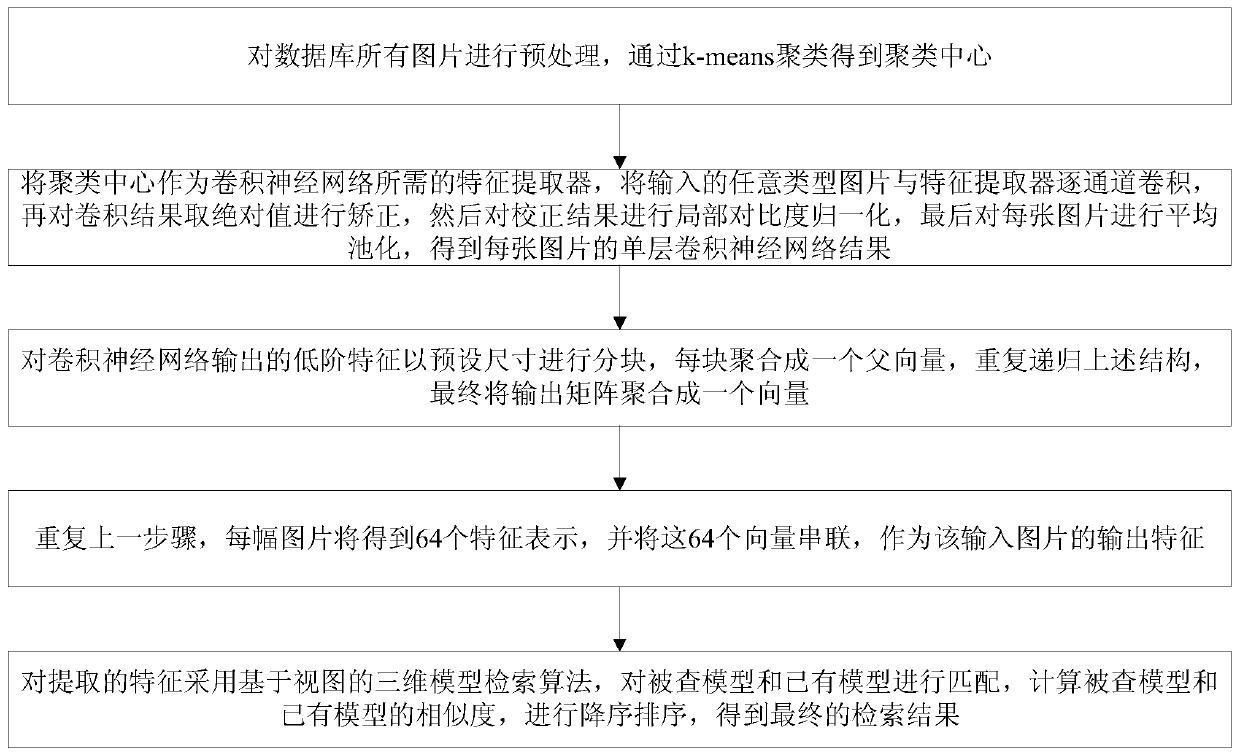

[0037] In order to solve the above problems, methods that can comprehensively, automatically and accurately extract the features of multi-view objects and retrieve them are needed. Studies have shown that: with the increase of the number of neural network layers, the obtained features will show intuitive and excellent properties such as combination, translation invariance, and class distinguishability. [8] . The embodiment of the present invention proposes a 3D model retrieval method based on deep learning, see figure 1 , see the description below:

[0038] 101: Convolute any type of picture with the feature extractor channel by channel, correct the absolute value of the convolution result, and normalize the local contrast, perform average pooling on each picture, and obtain a single-layer volume of each picture Aggregating neural network results;

[0039] 102: Divide the low-level features output by the convolutional neural network into blocks with a preset size, aggregate...

Embodiment 2

[0043] The scheme in embodiment 1 is further introduced below in conjunction with specific calculation formulas and examples, see the following description for details:

[0044] 201: Preprocess all the images in the database, and obtain the cluster centers through k-means clustering;

[0045] Among them, preprocessing is performed on all pictures in the database, including normalizing picture size and extracting picture blocks Brightness and contrast normalization x (i) , whitening, k-means clustering to get the cluster center c (j) step, where i∈{1,2,…,M}, j∈{1,2,…,N}.

[0046] In the embodiment of the present invention, the input image is first preprocessed, and the process is as follows: firstly, the input RGB image data of different sizes is scale-normalized, and the size is adjusted to be 148×148×3, and then the image interval step size needs to be adjusted. 1 Extract image blocks of size 9×9×3 A total of 19600 picture blocks can be obtained, where i∈{1,2,…,19600}. ...

Embodiment 3

[0099] The scheme in embodiment 1 and 2 is carried out feasibility verification below in conjunction with specific example, see the following description for details:

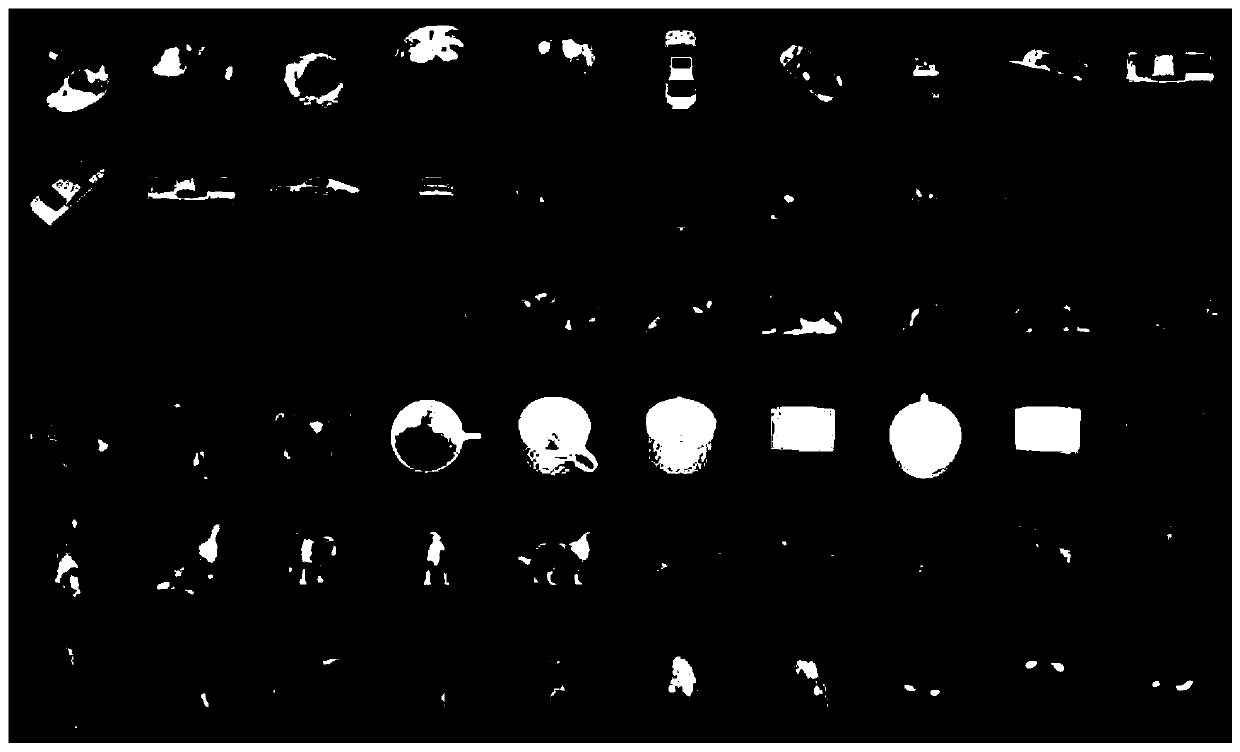

[0100] In this experiment, the ETH database is divided into 8 categories, with 10 objects in each category, and a total of 80 objects. Each object includes 41 images. Includes: car, horse, tomato, apple, cow, pear, mug, puppy and more.

[0101] This experiment uses the MVRED database produced by the Tianjin University laboratory, including 311 query objects and 505 test objects. Each object includes 73 images. Including RGB pictures and corresponding depth maps and masks. The 505 test objects are divided into 61 categories, each category contains 1 to 20 objects. 311 objects are used as query models, and each category contains no less than 10 objects. Each type of object contains pictures from three perspectives, including 36, 36, and 1 pictures respectively.

[0102] Precision-recall curve: It mainly des...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com