Method for estimating relative posture of robot

A relative posture and robot technology, applied in the field of robot vision, can solve the problems of poor aesthetics and large occupied area, and achieve low cost requirements and simple effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

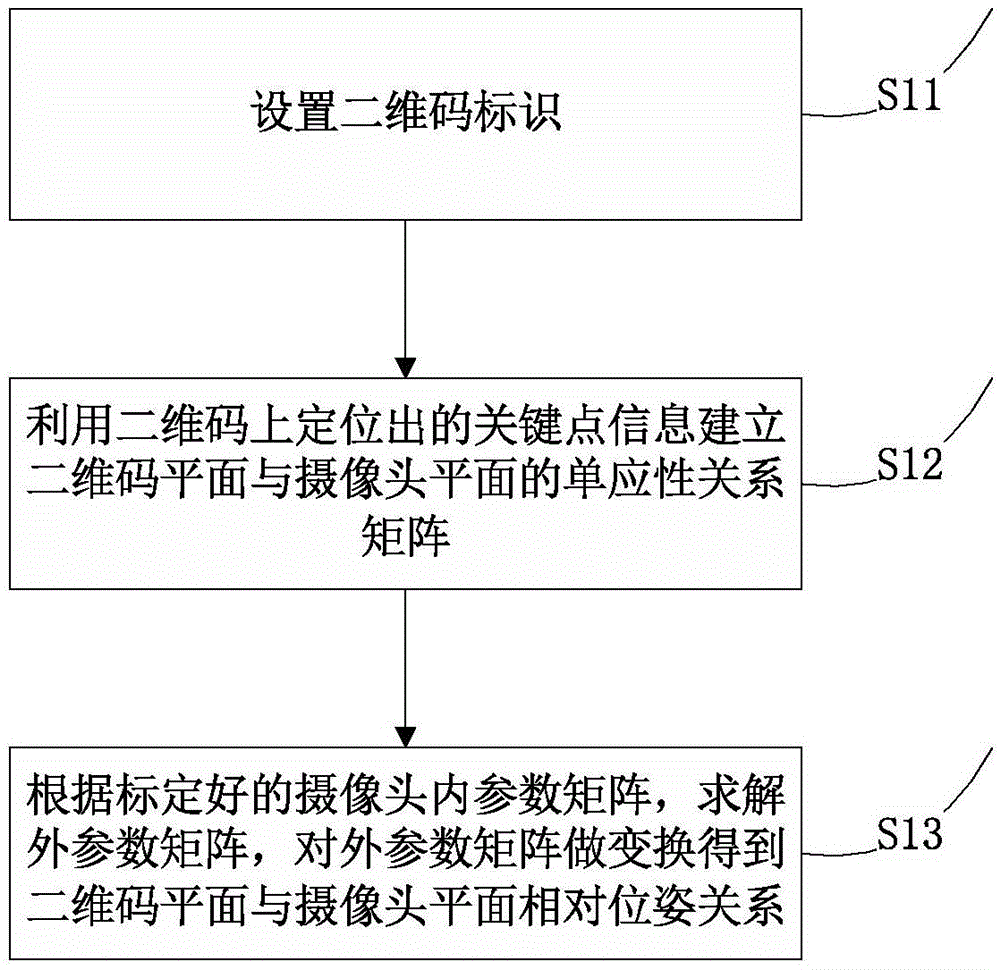

[0020] S11: Set the two-dimensional code identification.

[0021] S12: Perform adaptive binarization processing on the collected scene images containing the two-dimensional code logo, locate the designated positions on all detection graphics in the two-dimensional code and the centers of the correction graphics 3, and establish a binary system according to their positional relationship. The homography relationship matrix between the dimension code plane and the camera plane.

[0022] S13: According to the calibrated internal parameter matrix of the camera, the external parameter matrix is solved, and the external parameter matrix is transformed to obtain the relative pose relationship between the two-dimensional code plane and the camera plane.

Embodiment 2

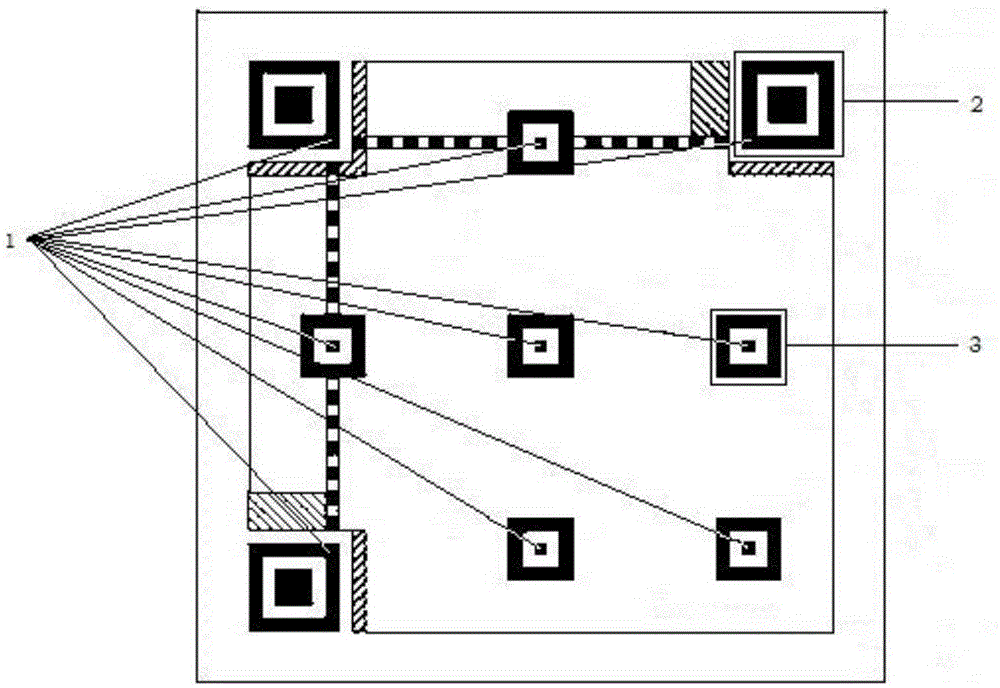

[0024] The following is attached with the manual figure 2 The relative pose estimation method of the robot is described in detail. A method for estimating a relative pose of a robot, comprising the steps of:

[0025] S21: Set the two-dimensional code identification.

[0026] The size range of the two-dimensional code mark should preferably be between 5-10 cm. The number of correction graphics 3 is not less than one. In the scene, try to ensure that the plane of the QR code is perpendicular to the horizontal plane, and one side of the QR code is parallel to the horizontal plane as much as possible. Secondly, ensure that the height of the center point of the QR code mark is as consistent as possible with the center position of the monocular camera of the robot or that the height difference does not exceed 5cm.

[0027] S22: Using a near-infrared camera with an infrared supplementary light to collect an image with a two-dimensional code mark.

[0028] When the robot moves c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com