Multi-modal emotion recognition system and method

A multi-modal, emotion recognition technology, applied in character and pattern recognition, speech recognition, acquisition/recognition of facial features, etc., can solve the problems of small amount of information, single emotion recognition method, difficult to realize human emotion recognition, etc., to achieve accurate The effect of recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

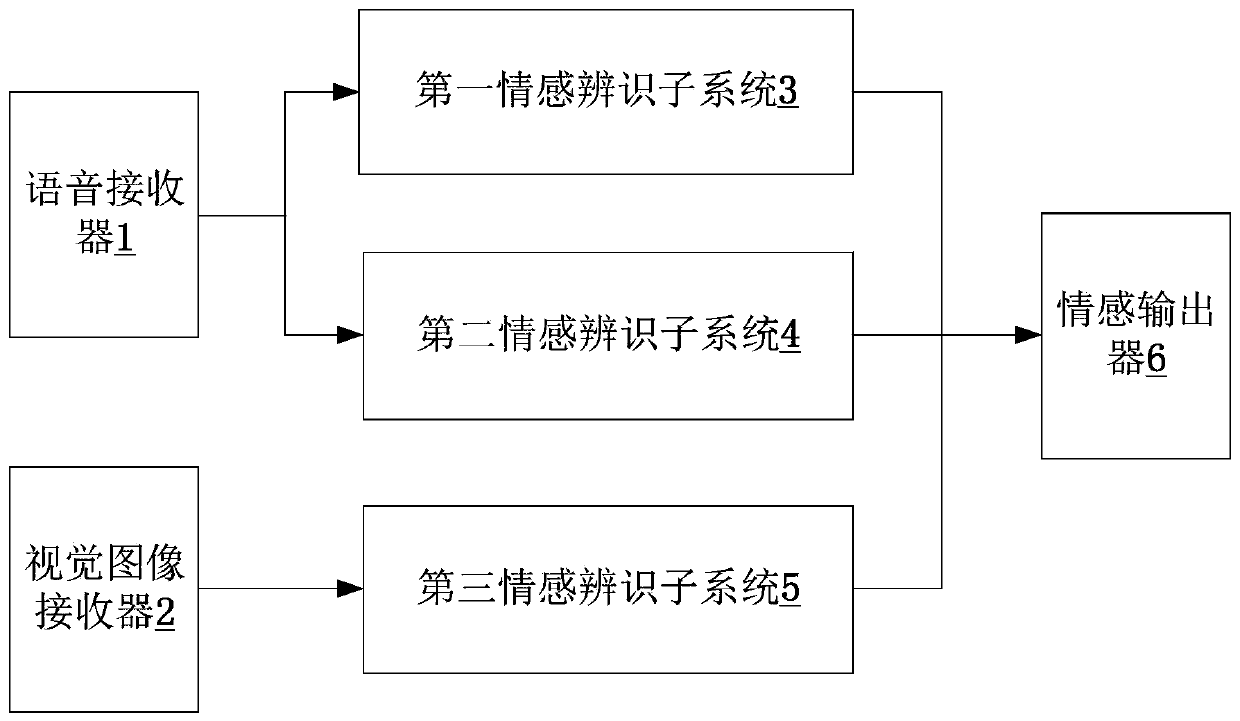

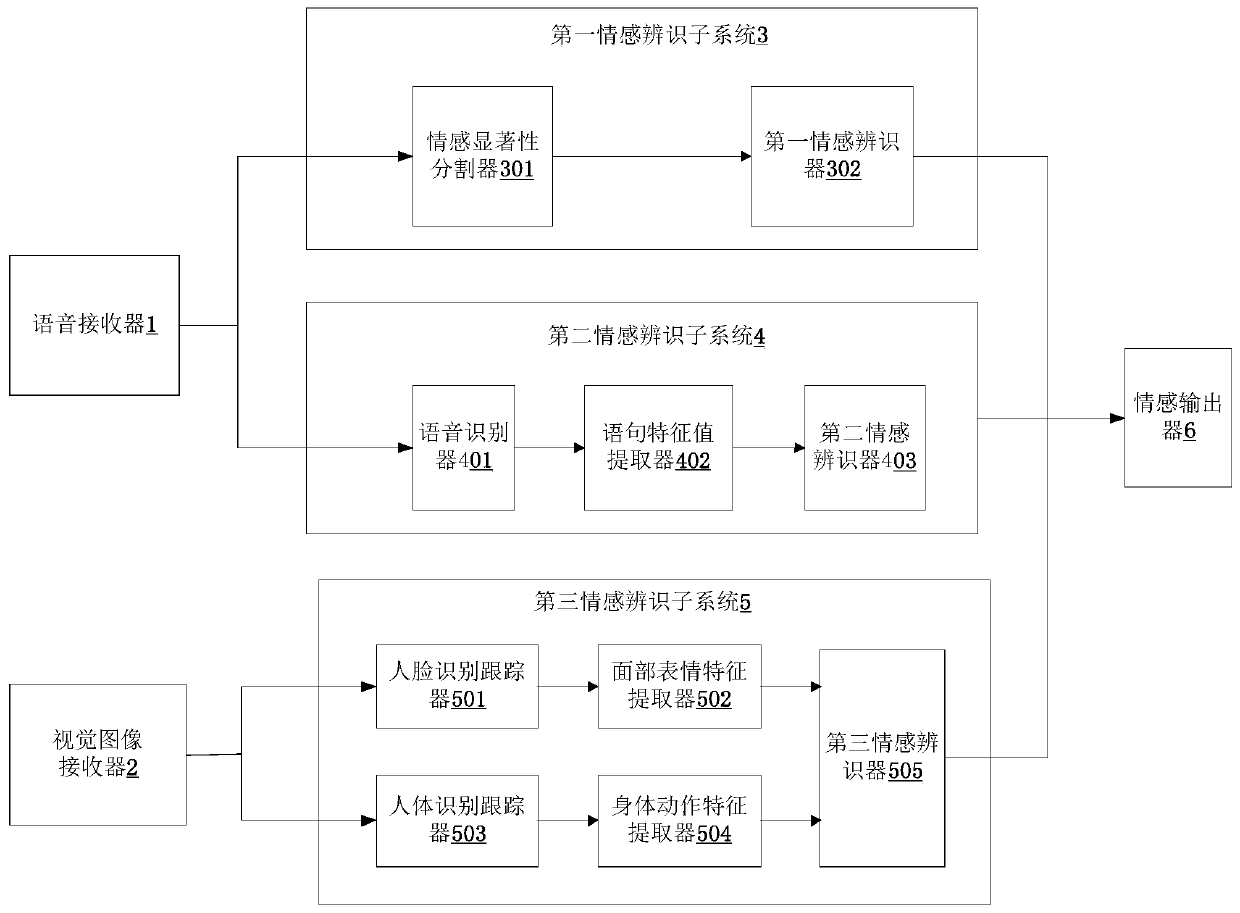

[0022] combine figure 1 , the multimodal emotion recognition system provided in this embodiment includes: a voice receiver 1, a first emotion recognition subsystem 3, a second emotion recognition subsystem 4, a visual image receiver 2, a third emotion recognition subsystem 5, Emotional output device 6; Voice receiver 1, is used for receiving the voice signal that target object sends; Visual image receiver 2, is used for receiving the visual image data about target object; The first emotion identification subsystem 3, is used for according to voice signal Obtain the first emotion recognition result; the second emotion recognition subsystem 4 is used to obtain the second emotion recognition result according to the voice signal; the third emotion recognition subsystem 5 is used to obtain the third emotion recognition result according to the visual image data; emotion output A device 6 is configured to determine the emotional state of the target object according to the first emoti...

Embodiment 2

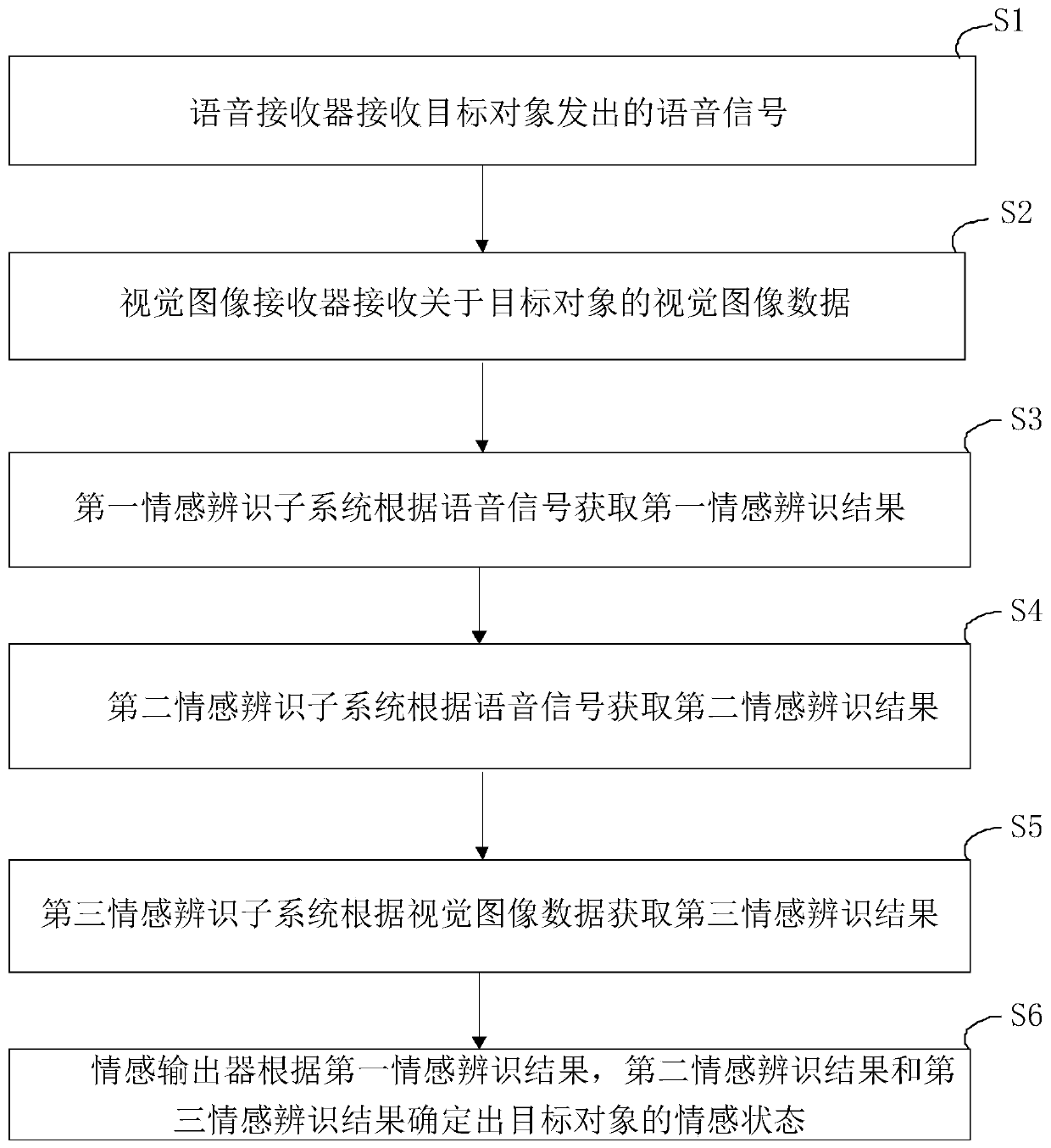

[0033] combine image 3 , an embodiment of the present invention provides a multi-modal emotion recognition method, including:

[0034] Step S1: the voice receiver 1 receives the voice signal sent by the target object;

[0035] Step S2: the visual image receiver 2 receives visual image data about the target object;

[0036] Step S3: the first emotion recognition subsystem 3 obtains the first emotion recognition result according to the voice signal;

[0037] Step S4: The second emotion recognition subsystem 4 obtains a second emotion recognition result according to the voice signal;

[0038] Step S5: The third emotion recognition subsystem 5 acquires a third emotion recognition result according to the visual image data;

[0039] Step S6: The emotion output unit 6 determines the emotional state of the target object according to the first emotion recognition result, the second emotion recognition result and the third emotion recognition result.

[0040] Preferably, as Figur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com