Navigation method and navigation terminal

A technology of navigation terminal and navigation method, applied in directions such as road network navigators, can solve the problems of monotonous AR information navigation method, unable to display navigation information, unable to select mobile terminals, etc., to improve interactive experience, improve selection time, and save time Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0039] reference figure 1 , figure 1 It is an implementation flowchart of a navigation method provided by an embodiment of the present invention, and the details are as follows:

[0040] In step S101, a real scene image is taken, and the geographic coordinates of the geographic location and the range value manually selected in the electronic fence are obtained;

[0041] Wherein, the real scene image is an image of a real scene captured by the lens in real time.

[0042] When the handheld terminal is a mobile terminal, the real scene image is an image in which the lens in the mobile terminal captures the real scene in real time.

[0043] For ease of description, taking a mobile terminal as an example, after the mobile terminal turns on the camera device, the camera device captures a real scene image.

[0044] The mobile terminal obtains the latitude, longitude and altitude through the GPS, obtains the direction the user is facing at the time through the compass sensor, and obtains the ti...

Embodiment 2

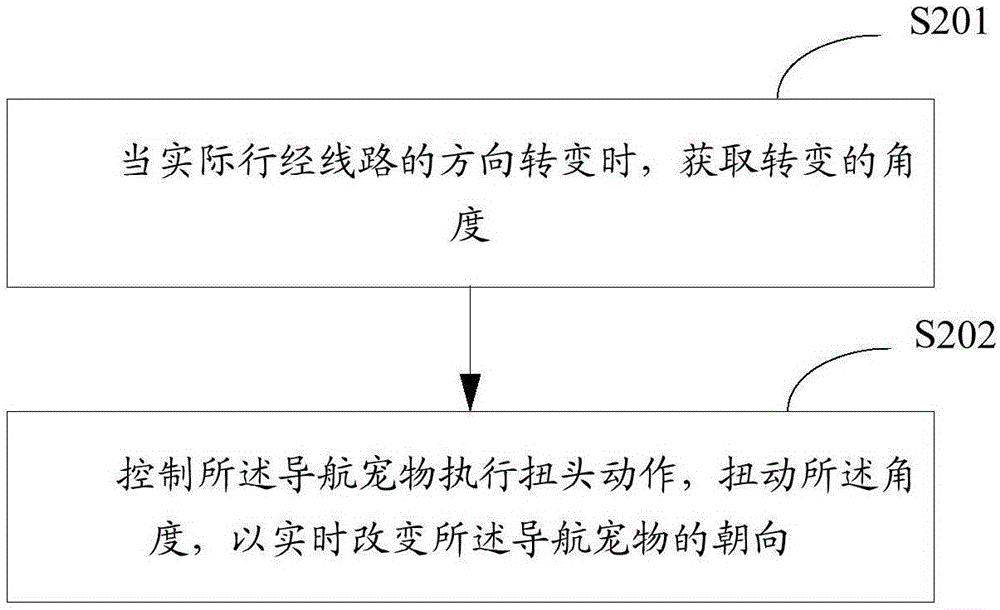

[0077] figure 2 It is the implementation flowchart of step S105 of the navigation method provided by the embodiment of the present invention, and the details are as follows:

[0078] S201: When the direction of the actual traveling route changes, obtain the angle of the change;

[0079] S202: Control the navigation pet to perform a head-turning motion and twist the angle to change the orientation of the navigation pet in real time.

[0080] In the embodiment of the present invention, controlling the navigation pet to perform the head-turning action can intuitively actually travel the direction of the route, reducing the time for the user to determine the direction, and improving the navigation efficiency.

Embodiment 3

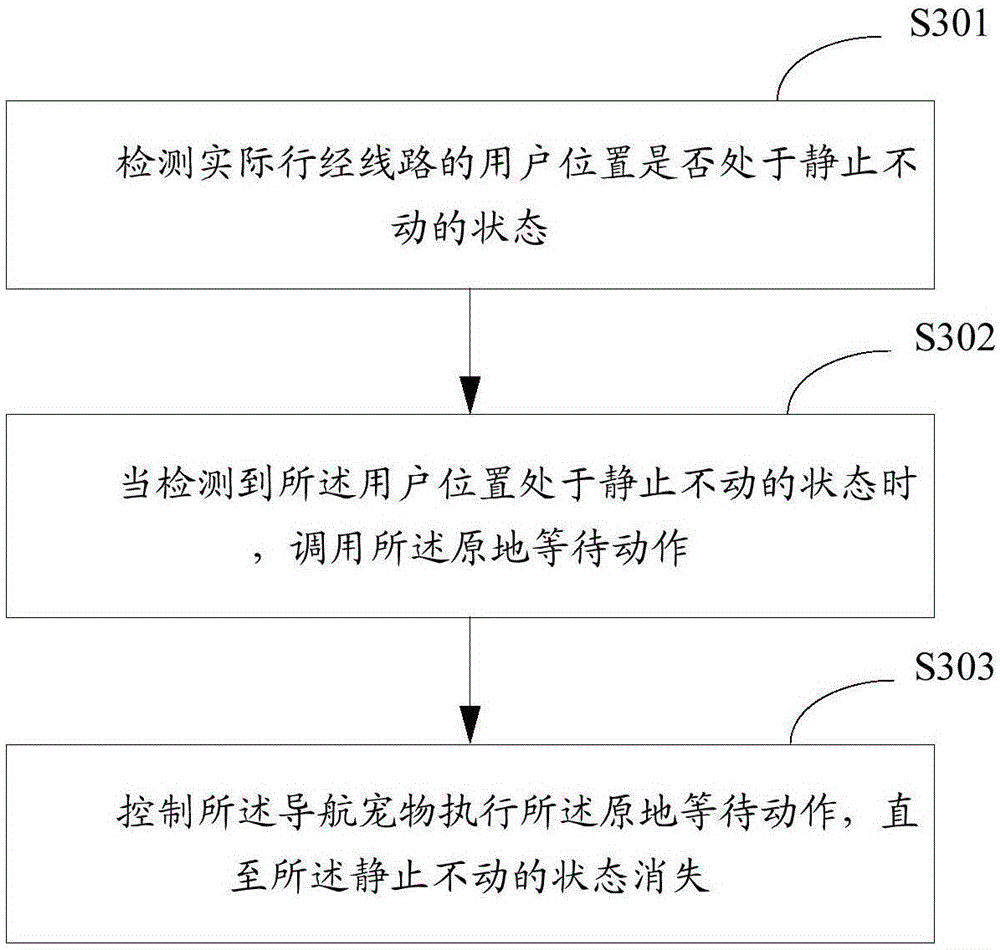

[0082] image 3 It is the implementation flow chart of the navigation pet performing the waiting in place action provided by the embodiment of the present invention. The details are as follows:

[0083] S301: Detect whether the position of the user actually traveling on the line is in a stationary state;

[0084] S302: When it is detected that the user's position is in a stationary state, call the in-place waiting action;

[0085] S303: Control the navigation pet to perform the waiting action in place until the stationary state disappears.

[0086] In the embodiment of the present invention, the navigation pet is controlled to perform the waiting action in place, and the position of the user who actually travels on the route can be intuitively controlled, which improves the display efficiency.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com