Non-reference quality evaluation method for fuzzy distortion three-dimensional images

A stereoscopic image, fuzzy distortion technology, applied in the direction of image analysis, image data processing, instruments, etc., can solve the problems of high computational complexity and unsuitable application occasions, reduce computational complexity, avoid machine learning training process, and better consistent effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

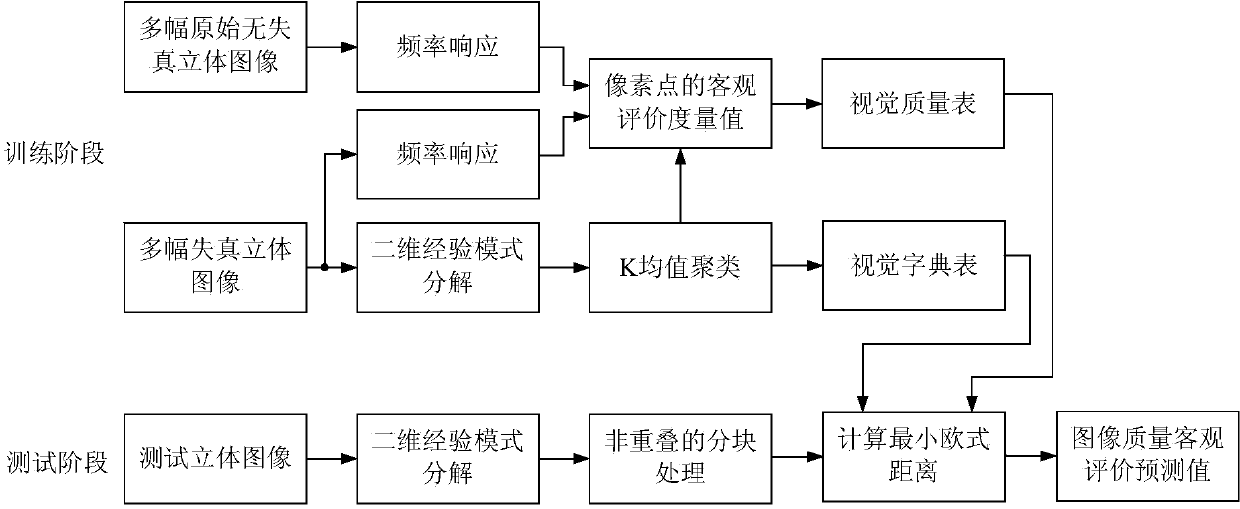

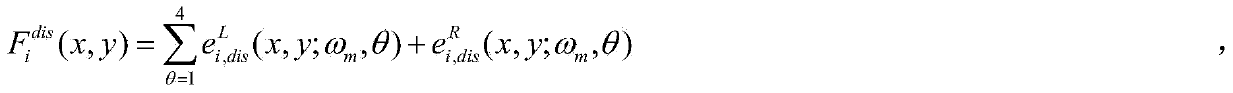

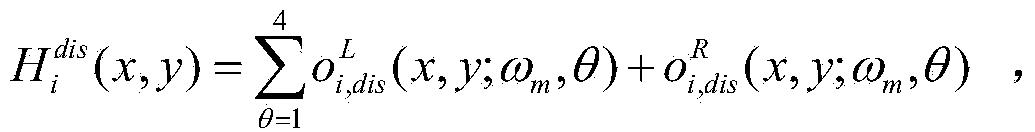

[0033] A no-reference quality evaluation method for fuzzy and distorted stereoscopic images proposed by the present invention, its overall realization block diagram is as follows figure 1 As shown, it includes two processes of training phase and testing phase: in the training phase, multiple original undistorted stereo images and corresponding blurred and distorted stereo images are selected to form the training image set, and the training image set is decomposed by using two-dimensional empirical mode Each fuzzy and distorted stereo image is decomposed to obtain the intrinsic mode function image, and then the non-overlapping block processing is performed on each intrinsic mode function image, and the visual dictionary table is constructed by using the K-means clustering method; The frequency response of each pixel in each original undistorted...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com