Real-time human body action recognizing method and device based on depth image sequence

A human action recognition and depth image technology, applied in the field of pattern recognition, can solve the problems of normalization deviation, affecting the recognition accuracy, and the recognition efficiency needs to be improved

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The specific implementation manner of the present invention will be further described below in conjunction with the drawings and embodiments. The following examples are only used to illustrate the present invention, but not to limit the scope of the present invention.

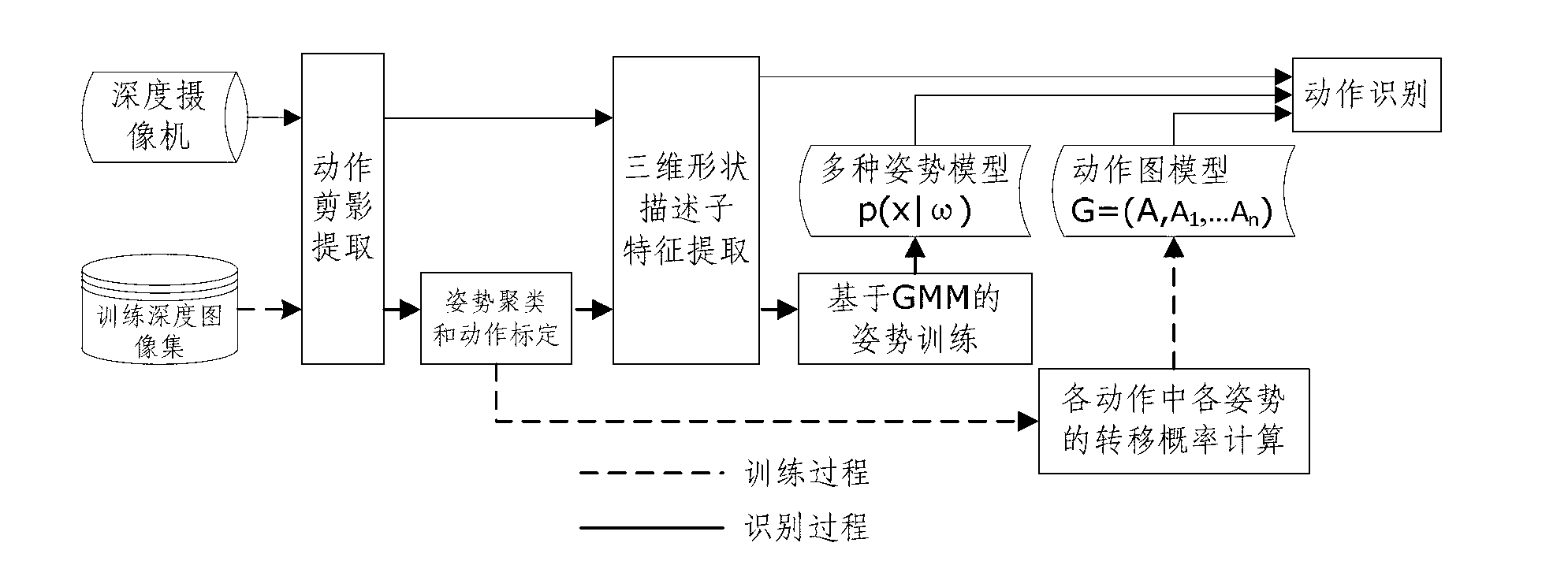

[0061] Flowchart such as figure 1 A real-time human action recognition method based on a depth image sequence is shown, which mainly includes steps:

[0062] S1. From the target depth image sequence collected by hardware devices such as depth cameras, through background modeling, image segmentation and other technologies, accurately segment the human body area, and extract the target action silhouette R, for example, as figure 2 shown in the first column; and extract training action silhouettes from the training depth image set in the same way.

[0063] S2. Perform posture clustering on the training action silhouettes, and perform action calibration on the clustering results; that is, classify each po...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com