Three-dimensional head modeling method based on two images

A head model, three-dimensional technology, which is applied in the field of three-dimensional head shape and texture modeling based on two front and side images, can solve the problems of immature three-dimensional head modeling technology, reduce the amount of manual interaction, and can be widely used Prospects, simple and practical effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

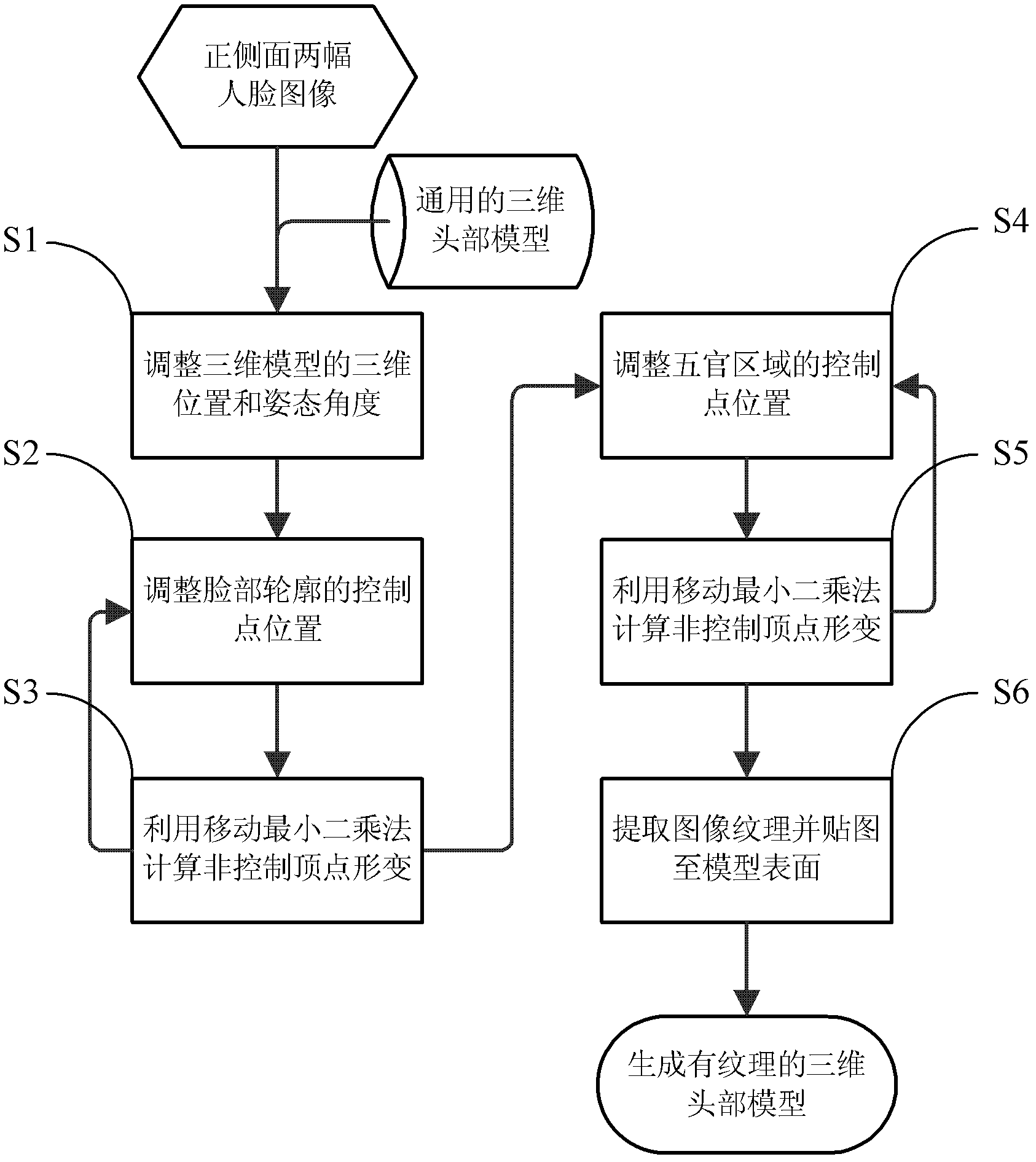

[0024] Such as figure 1 As shown, the present invention provides a kind of three-dimensional head modeling method based on two images, specifically comprises the following steps:

[0025] Such as figure 1 As shown, the input data of the present invention are two front and side face images and a general three-dimensional head model. The front and side face images can be taken by the user by using a network camera, or obtained by collecting daily digital photos. The general three-dimensional head model is composed of 229 three-dimensional vertices, and the subsequent deformation process starts from this model.

[0026] Step S1: Adjust the 3D position and attitude angle of the 3D head model so that it is approximately con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com