Method for video semantic mining

A semantic mining and video technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problems of high error rate of video content labeling, no comprehensive consideration of effective integration, lack of description information, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] Various details involved in the technical solution of the present invention will be described in detail below in conjunction with the accompanying drawings. It should be pointed out that the described embodiments are only intended to facilitate the understanding of the present invention, rather than limiting it in any way.

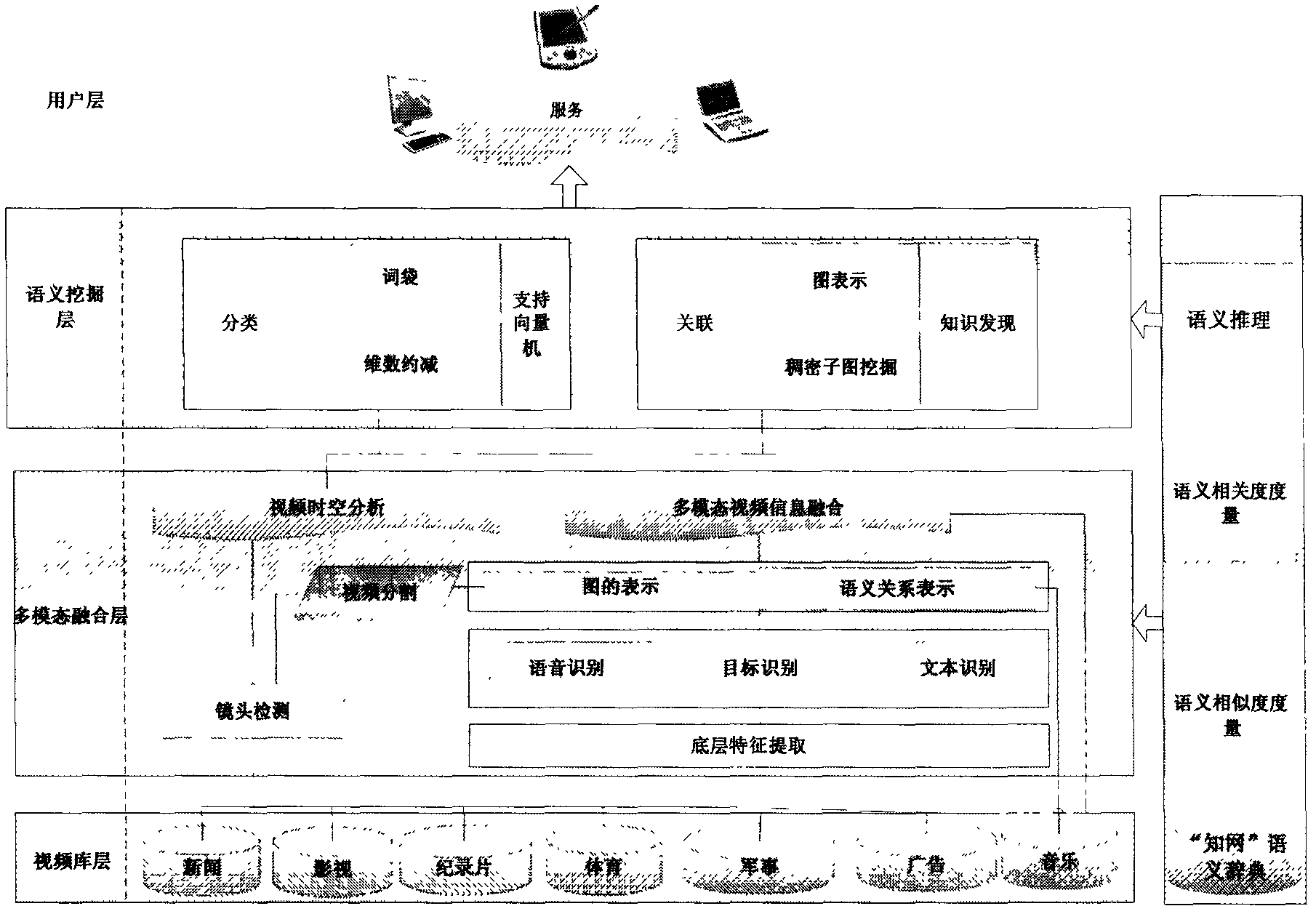

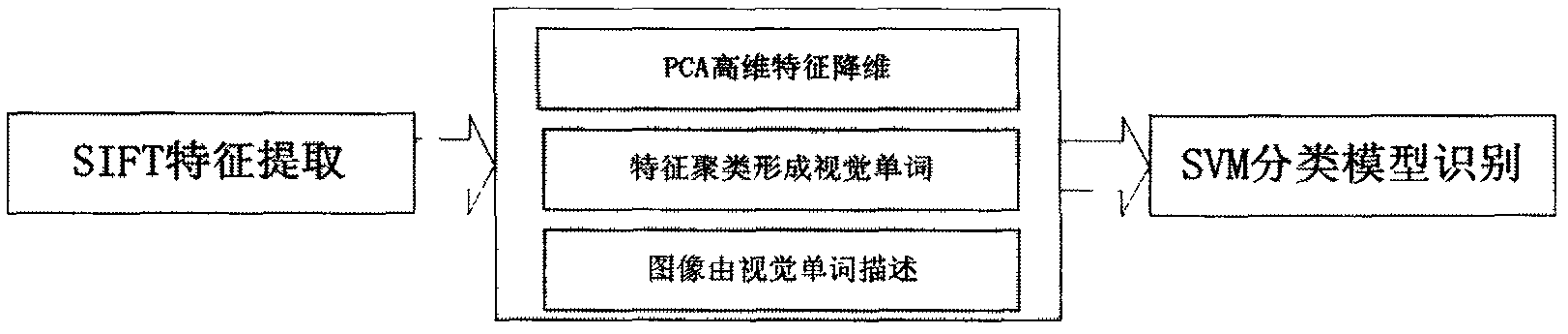

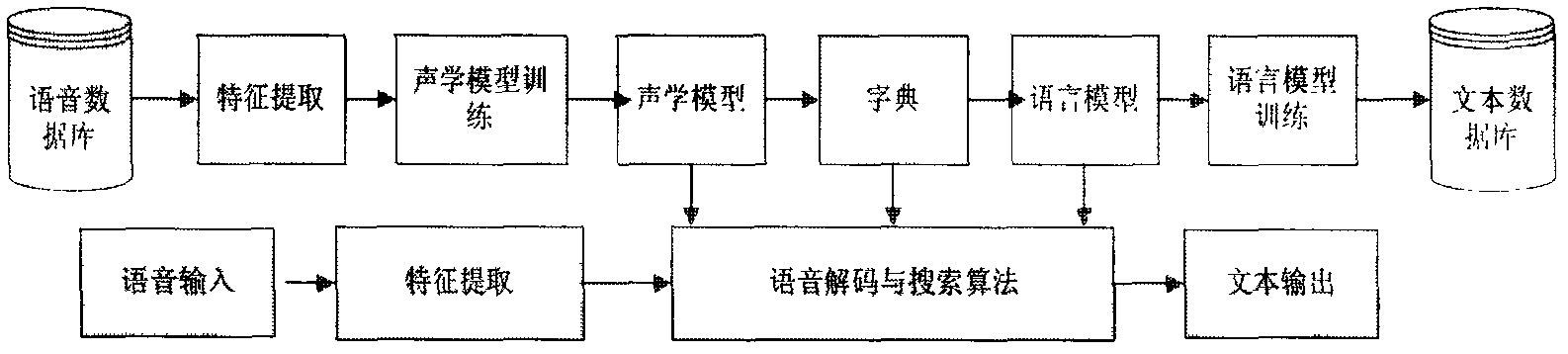

[0019] The present invention proposes a method for video semantic mining, such as figure 1 As shown, the method is divided into four layers in the processing flow. The bottom layer is the video library layer, which stores various forms of video resources; the upper layer of the video library is the multi-modal fusion layer, where the structural analysis of the video and the recognition and effective fusion of images, text, and voice are completed; The next upper layer is the video mining layer, which realizes the graphical model representation of video and the video mining algorithm based on dense subgraph discovery. In addition, video classificati...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com