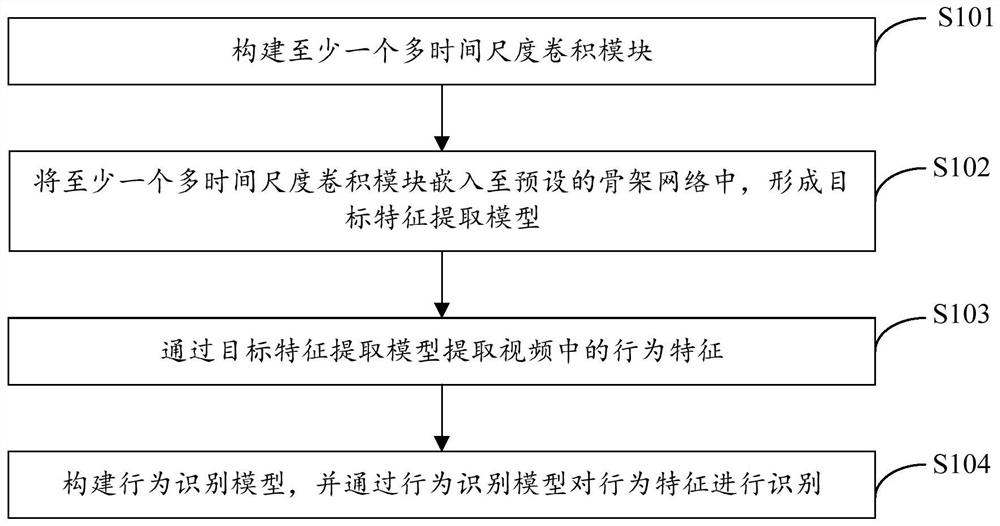

Video behavior identification method and device based on multi-time scale convolution

A multi-time scale, recognition method technology, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve the problem of low recognition accuracy, improve recognition accuracy, enhance robustness, and improve accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

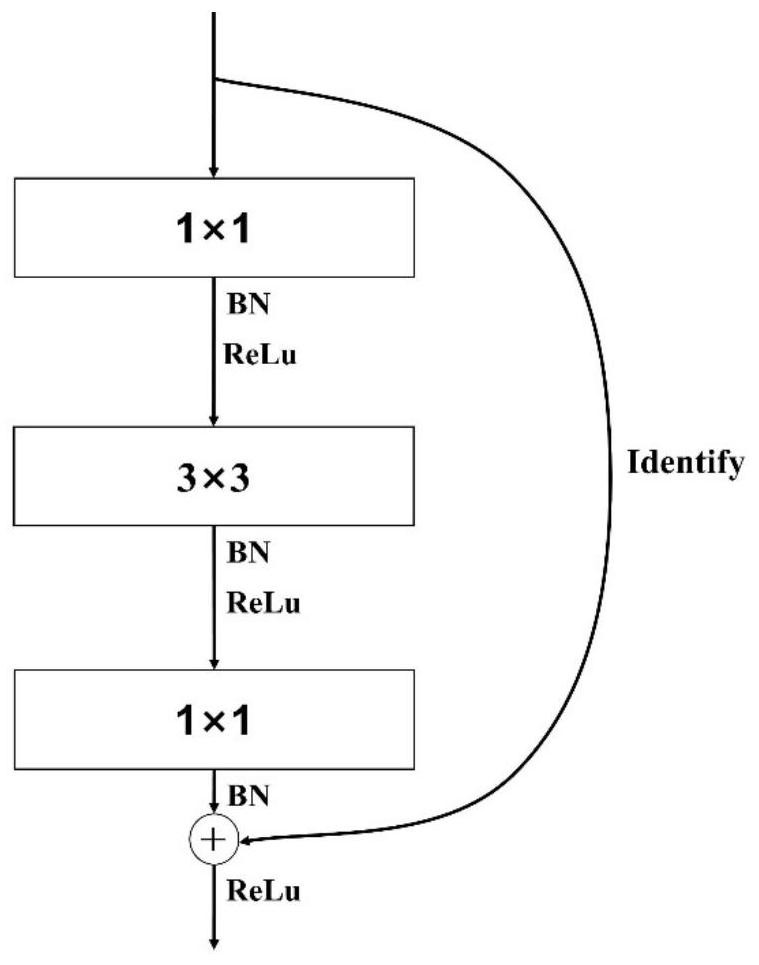

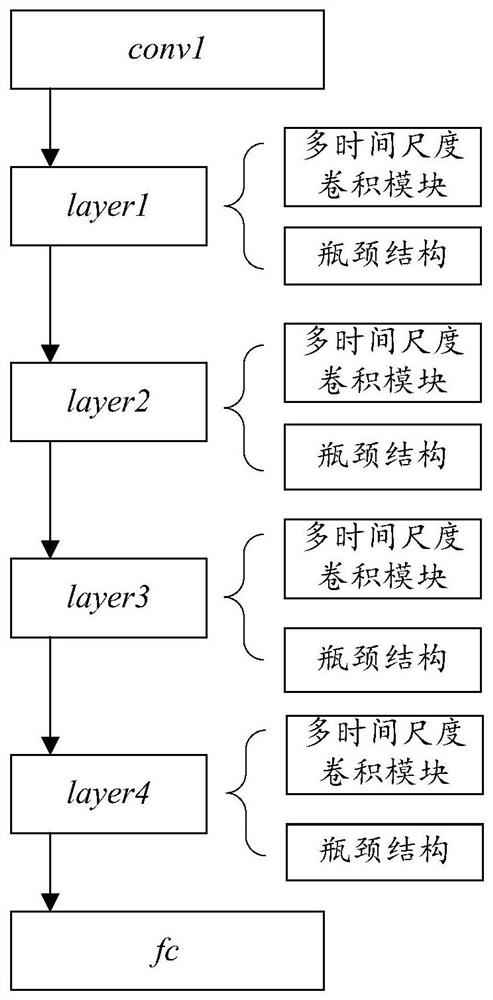

Method used

Image

Examples

Embodiment Construction

[0052] The technical solutions in the embodiments of the present invention will be apparent from the drawings in the embodiment of the present invention. Obviously, the described embodiments are merely the embodiments of the invention, not all of the embodiments. Based on the embodiments of the present invention, those skilled in the art do not have all other embodiments obtained by creative labor, all of which are protected by the present invention.

[0053] In the description of the present application embodiment, the meaning of "multiple" is two or more unless otherwise stated.

[0054]The "Examples" mentioned herein means that the specific features, structures, or characteristics described in connection with the embodiments may be included in at least one embodiment of the invention. This phrase is not necessarily a separate or alternative embodiment of the same embodiment in each position in the specification. Those skilled in the art is, and the embodiments described herein ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com