Pedestrian position detection and tracking method and system

A face detection and pedestrian technology, which is applied in the field of image processing, can solve the problems such as the inability to achieve the tracking effect of the target over time, the inability to meet the real-time requirements of subsequent target tracking, and the inability to balance real-time performance and robustness at the same time. Enhance self-adaptive capabilities, ensure accuracy, and achieve precise results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0060] like figure 1 As shown, a pedestrian position detection and tracking method in this embodiment includes:

[0061] S101: Obtain the video stream in the preset scene, input the frame-by-frame images in the video stream into the face detection model in sequence, and output the face position and face size target frame of the pedestrian in the video stream; wherein, the face detection model consists of The default neural network is pre-trained.

[0062] In a specific implementation, before sequentially inputting frame-by-frame images in the video stream to the face detection model, it also includes: preprocessing each frame of image in the video stream, and the process is:

[0063] Gray-scale transformation is performed on each frame of image, and then noise interference is removed through median filtering to reduce the influence of illumination on detection; finally, the region of interest is highlighted through image enhancement.

[0064] In this embodiment, the accuracy...

Embodiment 2

[0115] like image 3 As shown, the present embodiment provides a pedestrian position detection and tracking system, which includes:

[0116] (1) Face detection module, which is used to obtain the video stream in the preset scene, input the frame-by-frame images in the video stream into the face detection model in turn, and output the face position and face size target of pedestrians in the video stream Frame; wherein, the face detection model is trained in advance by the preset neural network;

[0117] In a specific implementation, before sequentially inputting frame-by-frame images in the video stream to the face detection model, it also includes: preprocessing each frame of image in the video stream, and the process is:

[0118] Gray-scale transformation is performed on each frame of image, and then noise interference is removed through median filtering to reduce the influence of illumination on detection; finally, the region of interest is highlighted through image enhance...

Embodiment 3

[0139]This embodiment provides a computer-readable storage medium, on which a computer program is stored, and when the program is executed by a processor, the steps in the pedestrian position detection and tracking method described in Embodiment 1 are implemented.

[0140] In this embodiment, the target frame is obtained according to the physical characteristics of the person and then fused with the detection result of the background subtraction method for correction, which improves the accuracy of the target pedestrian detection, enhances the adaptability of the method, and improves the accuracy of the target frame detection and segmentation.

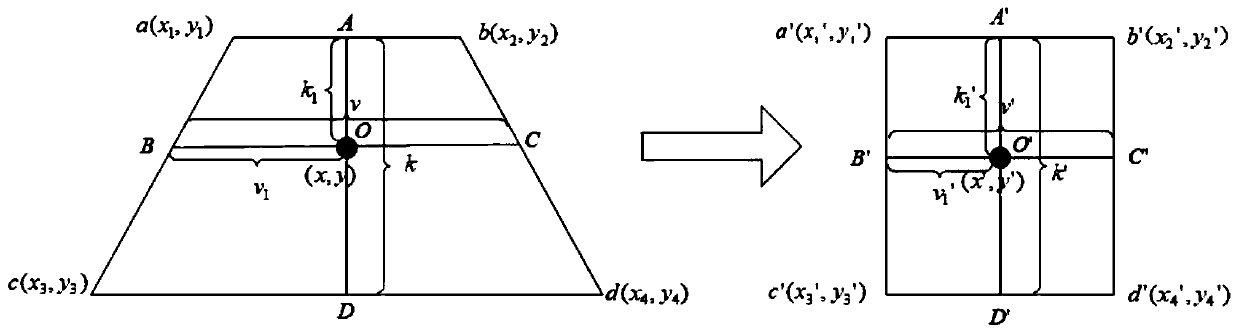

[0141] In this embodiment, according to the mapping relationship between the pedestrian target object and the preset scene geographical location, the corrected position corresponding to the pedestrian target frame is obtained, and the specific position of the pedestrian is determined by using the coordinate relationship between the pedes...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com