A method and system for intelligently entering addresses in express delivery scenarios

An address and intelligent technology, applied in speech analysis, speech recognition, instruments, etc., can solve problems such as high labor costs, low efficiency, and customers' inability to place orders, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

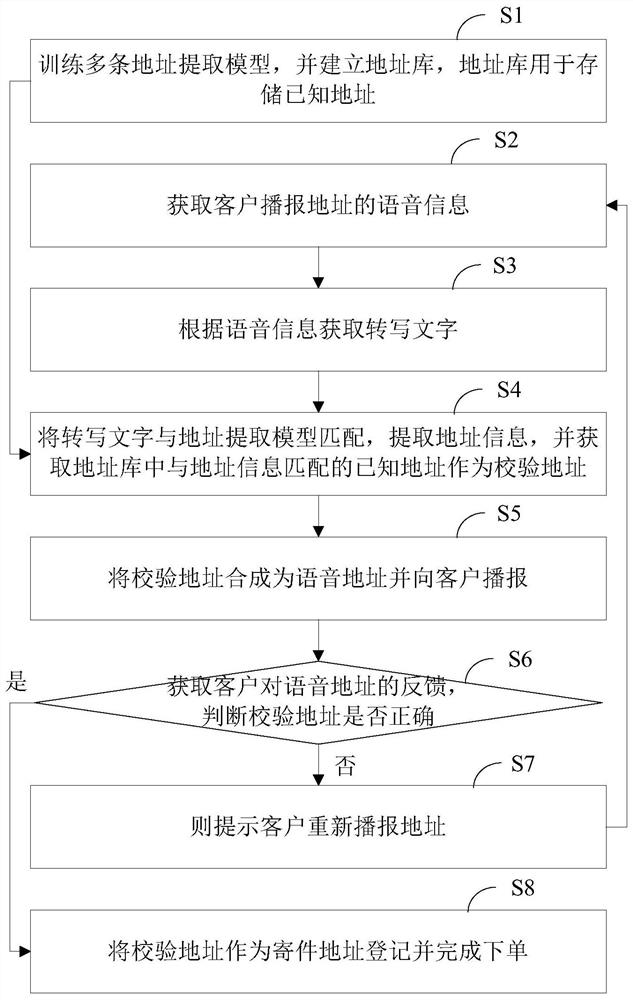

[0046] refer to figure 1 , a method for intelligently entering an address in an express delivery scene proposed by the present invention, comprising the following steps:

[0047] S1. Train multiple address extraction models, and establish an address library, which is used to store known addresses.

[0048] S2. Obtain the voice information of the address broadcast by the customer.

[0049] S3. Acquire the transcribed text according to the voice information.

[0050] S4. Match the transcribed text with the address extraction model, extract address information, and obtain a known address in the address database that matches the address information as a verification address.

[0051] In this way, through step S4, the finally obtained verified address has been trained by the address extraction model and verified by the address library, which greatly improves the accuracy of the verified address.

[0052] S5. Synthesize the verification address into a voice address and broadcast ...

Embodiment 2

[0059] In this embodiment, relative to Embodiment 1, step S4 specifically includes the following steps:

[0060] S41. Match the text information with the address extraction model to extract address information.

[0061] S42. Synthesize the extracted address information into voice information and broadcast it to the client.

[0062] S43. Obtain the customer's feedback on the voice information, and determine whether the address information is correct; if it is not correct, execute step S7.

[0063] S44. If it is correct, obtain a known address in the address database that matches the address information as the verification address.

[0064]In this way, in this embodiment, after the address information is extracted according to the address extraction model, the address information is confirmed to the customer through a voice call, which ensures the accuracy of the address information used for matching with the address database, which is conducive to improving the matching rate a...

Embodiment 3

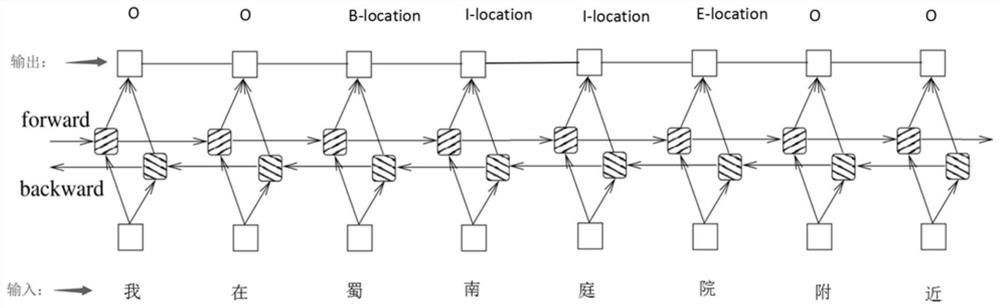

[0066] In this embodiment, compared with embodiment 1, in step S1, a bidirectional LSTM neural network is used to train the address extraction model. Step S1 is specifically as follows: first, a plurality of customer voice broadcast templates, and then extract known addresses from the address library to fill in each voice broadcast template to obtain training corpus. The training prediction is sent to the bidirectional LSTM neural network for training, and the parameters of each node on the bidirectional LSTM neural network are obtained as slot labels. Step S4 is specifically: input the obtained transcribed text into the bidirectional LSTM neural network, convert each text into a text vector, and then obtain the slot label of each text through the bidirectional LSTM neural network calculation.

[0067] Specifically, in this embodiment, the specific way to obtain the parameters of each node on the bidirectional LSTM neural network as the slot label is: send the training forecas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com