A method and a device for performing 3D fusion display on a real scene and a virtual object

A technology of real scenes and virtual objects, applied in the input/output process of data processing, input/output of user/computer interaction, image data processing, etc. and other problems, to achieve the effect of good fusion effect, strong authenticity and good user experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

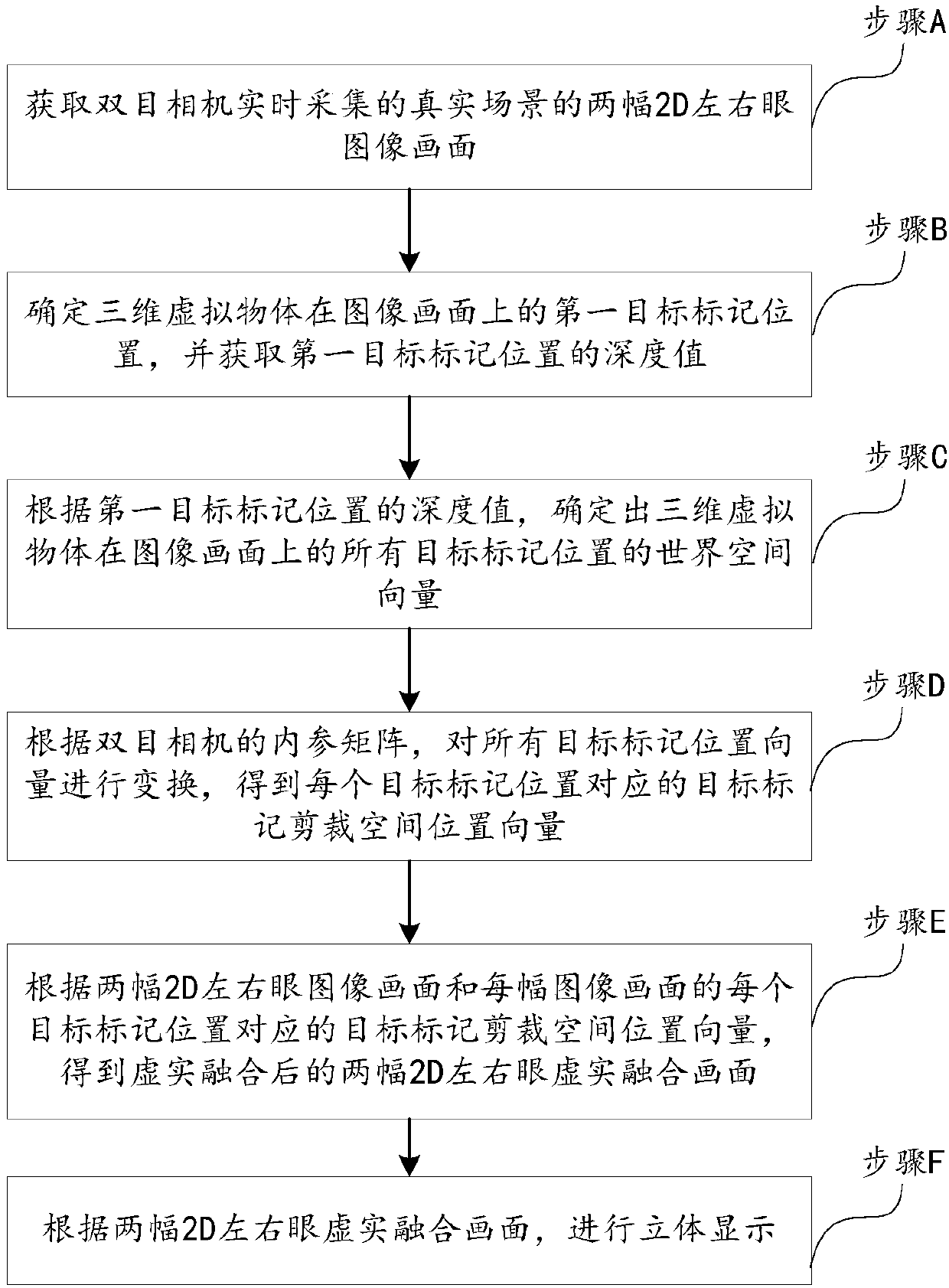

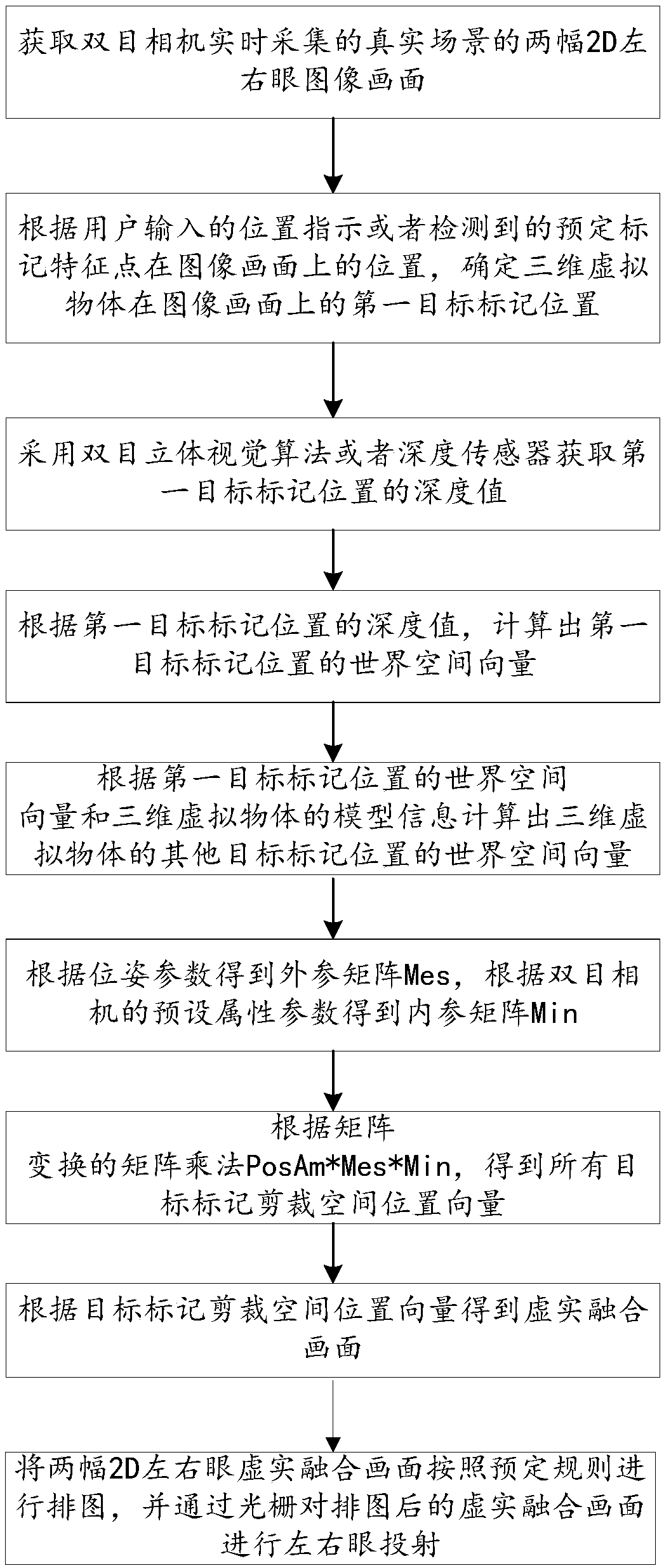

[0061] like figure 1 and figure 2 It is a flow chart of a method for 3D fusion displaying a real scene and a virtual object provided by Embodiment 1 of the present invention. like figure 1 As shown, the method includes the following steps:

[0062] In step A, two 2D left and right eye images of the real scene collected by the binocular camera in real time are acquired.

[0063] The fusion display method in this embodiment can be applied to a terminal with a binocular camera, for example, a smart phone, etc., and uses the binocular camera to collect real scene information in real time, wherein the binocular camera includes a left camera and a right camera. When shooting is required, the binocular camera is used to collect real scene information in real time, and the real scene information collected in real time includes the left-eye image frame captured by the left camera and the right-eye image frame captured by the right camera.

[0064] For each image frame of the left-...

Embodiment 2

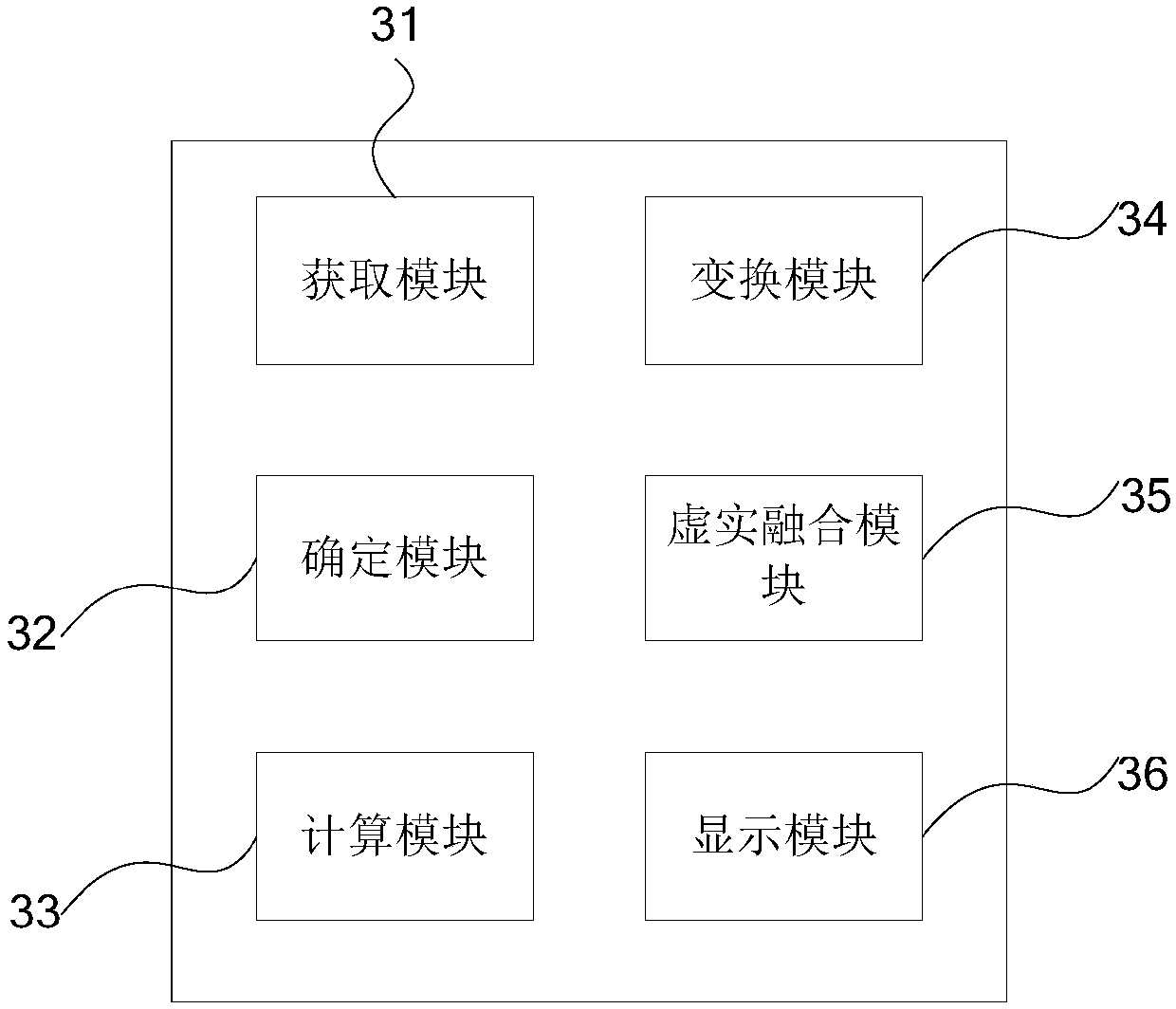

[0108] image 3 and Figure 4 It is a schematic diagram of a device for 3D fusion displaying a real scene and a virtual object provided in Embodiment 2 of the present invention, and the device includes:

[0109] Obtaining module 31, obtaining two 2D left and right eye image frames of the real scene collected by the binocular camera in real time;

[0110] The determining module 32 is configured to determine the first target mark position of the three-dimensional virtual object on the image frame, and obtain the depth value d of the first target mark position;

[0111] The calculation module 33, according to the depth value d of the first target mark position, determines the world space vectors of all target mark positions of the three-dimensional virtual object on the image screen;

[0112] Transformation module 34, according to the internal reference matrix M of the binocular camera in , transforming the world space vectors of all target marker positions to obtain a target ...

Embodiment 3

[0134] An embodiment of the present invention also provides an electronic device, including at least one processor; and,

[0135] a memory communicatively coupled to the at least one processor; wherein,

[0136] The memory stores instructions executable by the at least one processor, and the instructions are executed by the at least one processor, so that the at least one processor can execute the method described in Embodiment 1 above.

[0137] For the specific execution process of the processor, reference may be made to the description of Embodiment 1 of the present invention, and details are not repeated here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com