A language model optimization method and device

A technology of language model and optimization method, which is applied in speech analysis, speech recognition, semantic analysis, etc., can solve the problem of low probability of sentence formation, achieve the effect of optimizing language model and improving user experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

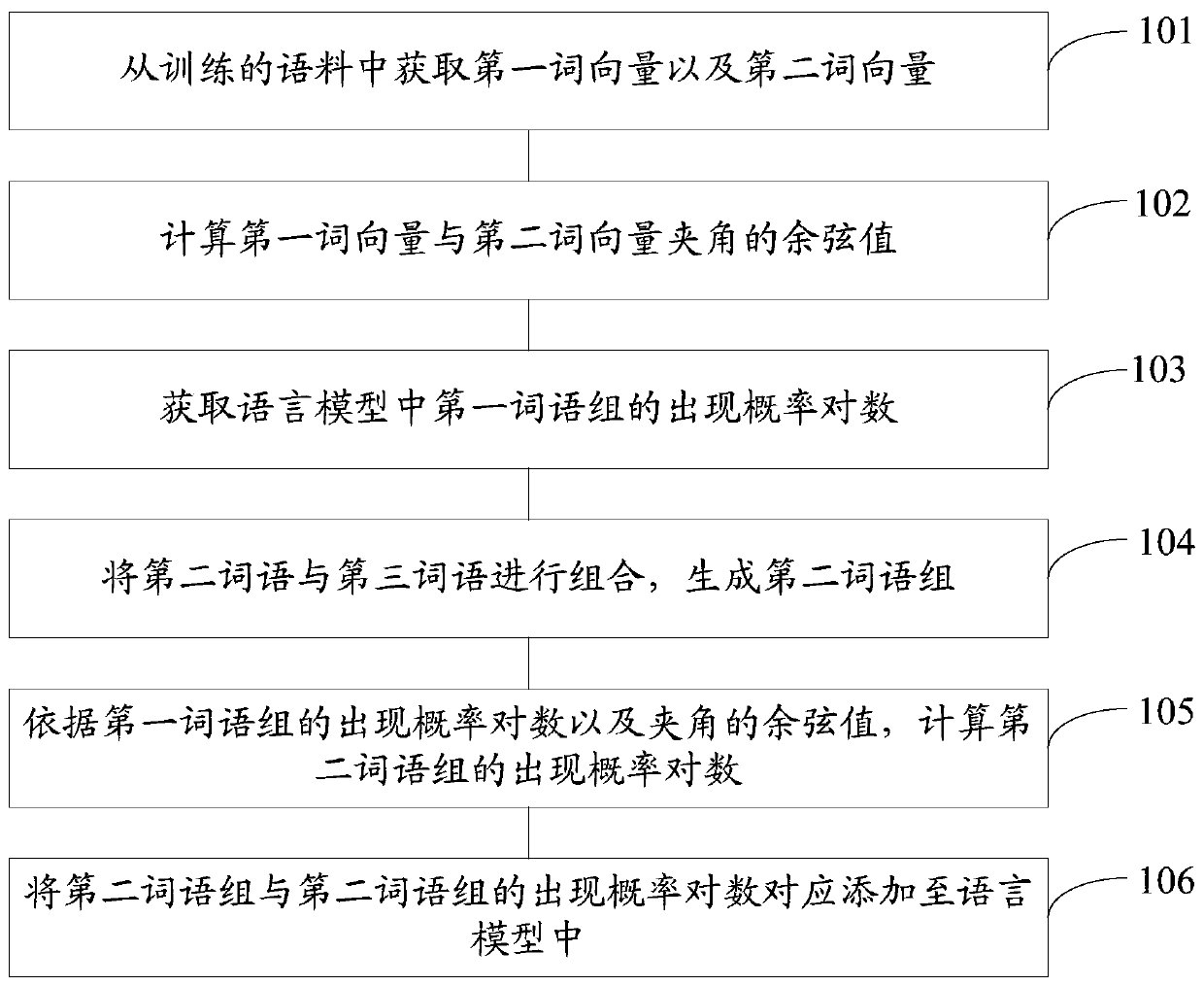

[0024] refer to figure 1 , shows a flow chart of steps of a language model optimization method according to Embodiment 1 of the present invention.

[0025] The language model optimization method provided by the embodiment of the present invention includes the following steps:

[0026] Step 101: Obtain a first word vector and a second word vector from the training corpus.

[0027] Among them, the first word vector is the vector of the first word, the second word vector is the vector of the second word, the probability of the second word appearing in the corpus is lower than the probability of the first word appearing in the corpus, the first word and the second The semantics of the words are similar.

[0028] Step 102: Calculate the cosine of the angle between the first word vector and the second word vector.

[0029] According to the obtained word vector, the formula of the cosine value of the angle between two vectors can be used for calculation.

[0030] The current comm...

Embodiment 2

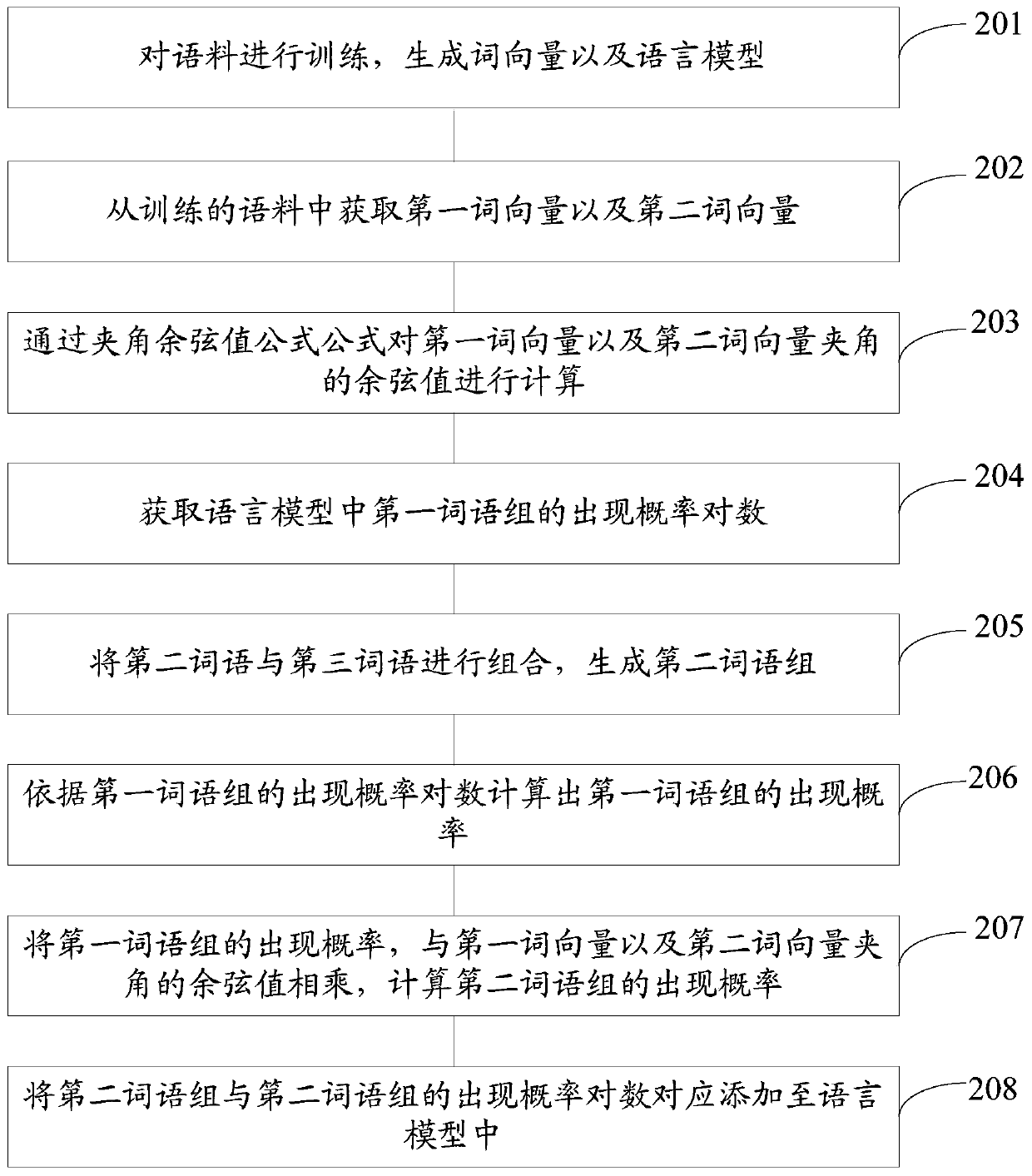

[0044] refer to figure 2 , shows a flowchart of steps of a method for optimizing a language model according to Embodiment 2 of the present invention.

[0045] The language model optimization method provided by the embodiment of the present invention includes the following steps:

[0046] Step 201: Train the corpus to generate word vectors and language models.

[0047] Wherein, the language model includes a plurality of words, the logarithm of the occurrence probability of each word, a plurality of word groups and the logarithm of the occurrence probability of each word group, and the word vector is a vector corresponding to each word. .

[0048] Step 202: Obtain the first word vector and the second word vector from the training corpus.

[0049] Wherein, the first word vector is the vector of the first word, the second word vector is the vector of the second word, the probability of the second word appearing in the corpus is lower than the probability of the first word appe...

Embodiment 3

[0072] refer to image 3 , shows a structural block diagram of an apparatus for optimizing a language model according to Embodiment 3 of the present invention.

[0073] The language model optimization device provided by the embodiment of the present invention includes: a first acquisition module 301, configured to acquire a first word vector and a second word vector from the training corpus, wherein the first word vector is a vector of the first word , the second word vector is the vector of the second word, the probability of the second word appearing in the corpus is lower than the probability of the first word appearing in the corpus, and the first word and the second word The semantics are similar; the first calculation module 302 is used to calculate the cosine value of the angle between the first word vector and the second word vector; the second acquisition module 303 is used to obtain the first word group in the language model Occurrence probability logarithm; Wherein...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com