Action simulation interactive method and device for intelligent device and intelligent device

A smart device and action simulation technology, which is applied in the input/output of user/computer interaction, mechanical mode conversion, character and pattern recognition, etc., can solve the problems of no action simulation process, low information dissemination efficiency, and limited information volume. Achieve the effects of vivid interactive forms, accurate information interaction, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

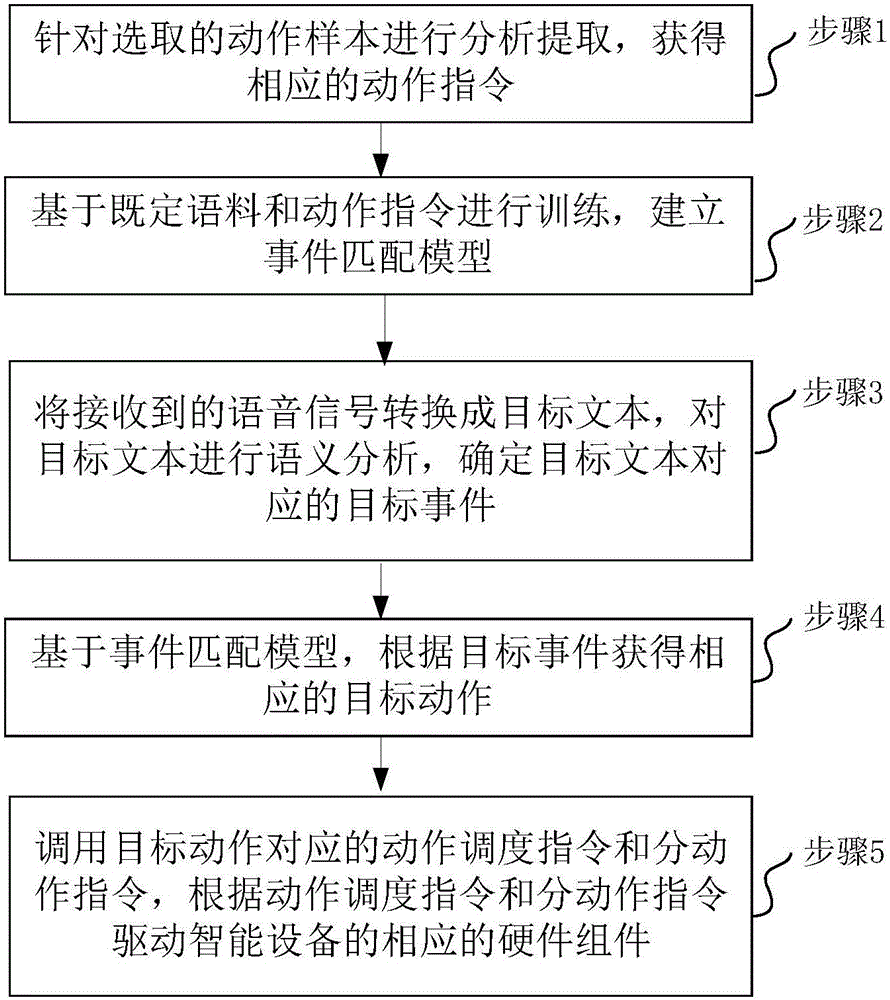

[0120] The principle and steps of the action simulation interaction method for smart devices of the present invention will be described below with reference to Embodiment 1.

[0121] Step 1: Analyze and extract the selected action samples to obtain corresponding action instructions

[0122] First, search the action videos of various animals through the Internet, or use the camera of the intelligent robot to capture the action videos of animals in real time. Action videos of various animals may include action videos of cats, action videos of dogs, and the like. For action videos, you can also customize more detailed action samples, such as cats sleeping, cats walking, dogs barking, etc. These action videos serve as action samples to be imitated.

[0123] Then, feature extraction is performed on the acquired action samples frame by frame, and recognition is performed based on the extracted features to obtain action instructions corresponding to the action samples. Then, split...

Embodiment 2

[0187] The principle and steps of the action simulation interaction method for smart devices of the present invention will be further described with reference to Embodiment 2 below.

[0188] In this embodiment, steps 1 and 2 are the same as in embodiment 1.

[0189] In step 3, the voice command issued by the user is "Imitate a dog barking", and the voice command is converted into the target text as "Imitate a dog barking". The verb phrase (that is, "imitate") and the object (that is, "a dog's barking") in the target text can be obtained through semantic analysis. Therefore, the target event corresponding to the target text is "imitating a dog's barking".

[0190] In step 4, since the event matching model established in step 2 cannot identify "dogs", the probability for all animals is 0:

[0191] p(dog barking|dog)=0, p(dog barking|tiger)=0, p(dog barking|cat)=0

[0192] In this case, the smart device asks the user: "What kind of animal is a dog?", and the user replies: "Dog"...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com