Model system and method for fusion of virtual scene and real scene

A technology of real scenes and virtual scenes, applied in the computer field, can solve problems such as object display overlap, destroy immersion effect, reduce priority and weight, etc., achieve strong compatibility, improve immersion effect, and improve coordination

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] The technical solutions of the present invention will be further specifically described below through examples.

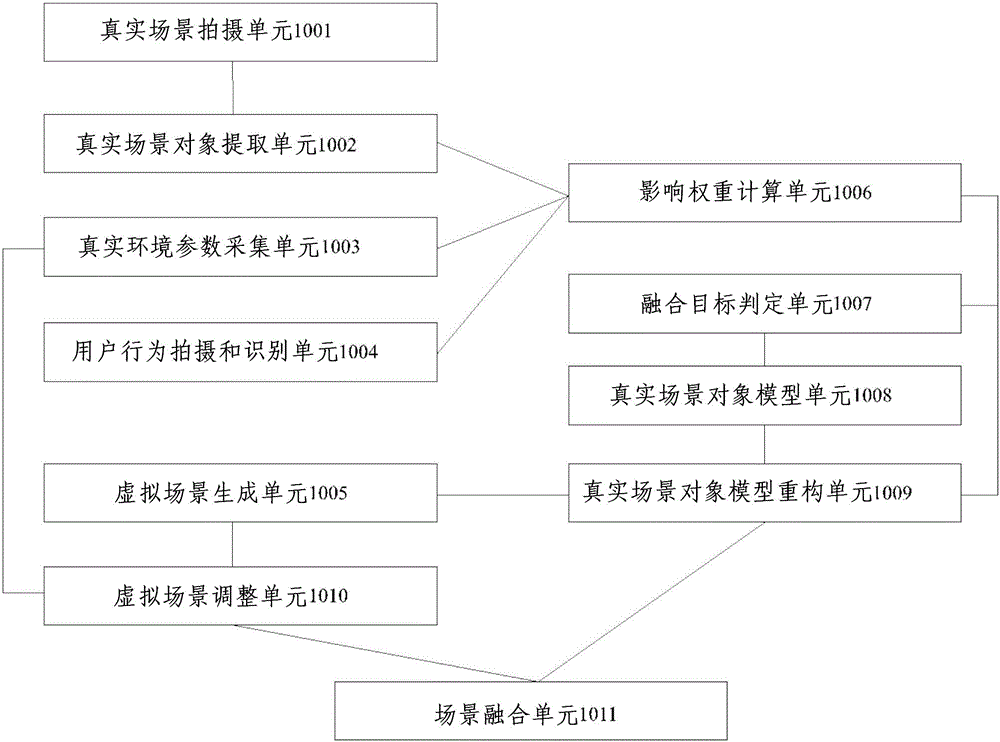

[0052] see figure 1 As shown, the present invention provides a model system for the fusion of virtual scene and real scene. The specific structure and function of the system will be introduced below.

[0053] The real scene shooting unit 1001 is configured to shoot a real scene image at a shooting angle close to the user's point of view, and provide parameters representing the shooting angle of the real scene image. The real scene shooting unit 1001 may include at least one camera, which is arranged near the user's actual position (such as the user's seat) in the virtual reality picture display system (which may be a 2D or 3D display system), and the camera is set to execute the picture according to the user's perspective. Shooting angle of view, the user angle of view mentioned here refers to the range of vision of the user's eyes when observing the virtua...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com