Inquiring term rewriting method merging term vector model and naive Bayes

A technology of query rewriting and query words, which is applied in the field of query word rewriting, and can solve the problems of not considering the connection between query words and search recall results, weak semantic correlation between query words and rewritten words, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

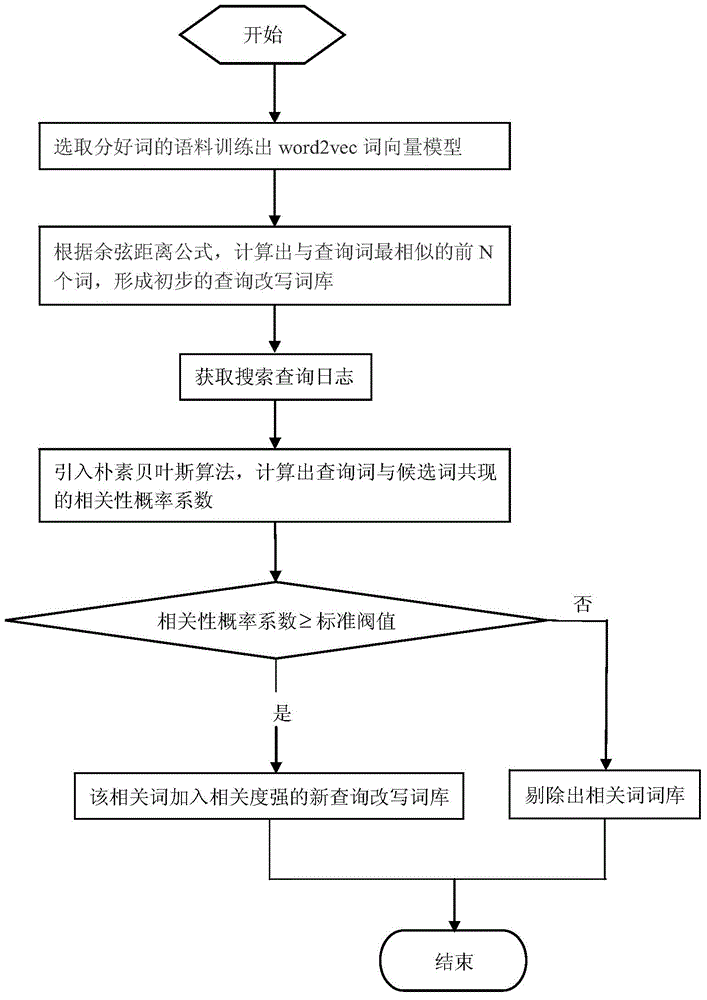

[0017] The present invention will be further described below in conjunction with the accompanying drawings:

[0018] After the word2vec word vector model is established, it is combined with the Naive Bayes algorithm. The specific implementation steps are as follows:

[0019] Step 1: Build and train the word2vec word vector model according to the obtained corpus, and calculate the candidate words for query rewriting.

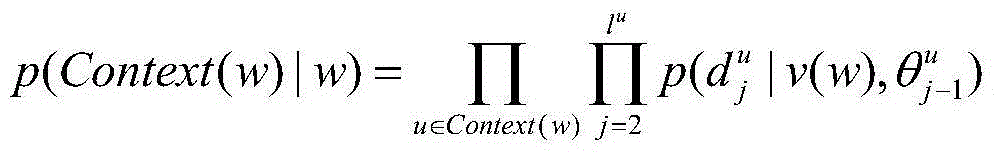

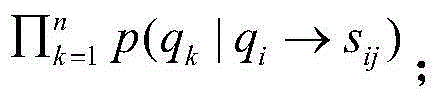

[0020]Using the Skip-gram model based on the Hierarchical Softmax algorithm in word2vec, the context-related words of the query words are predicted from the input user query words according to the model. For example, for each input query word, we can use word2vec to find its 50 related words. word. For example, if the number of related words of the query word is set to 50, the correlation between these related words and the input query word may be large or small, and some are even irrelevant, and the naive Bayes algorithm is further used to filter related words....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com