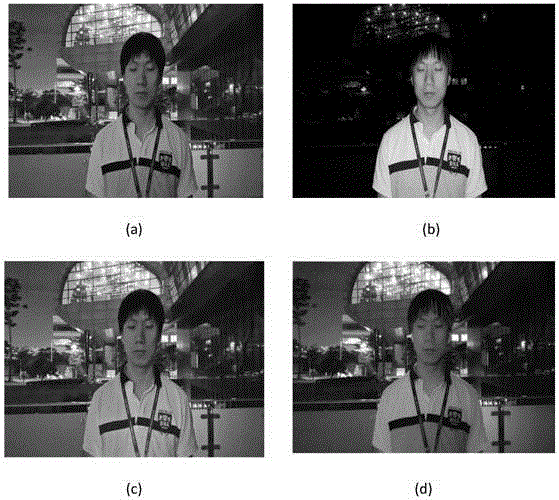

Two-frame Image Fusion Method under Different Illumination Based on Texture Information Reconstruction

A texture information and fusion method technology, applied in the field of image processing, can solve the problems of color cast in the fusion image, not considering the texture information components of the low-light image, and blurring of some areas of the fusion image, so as to improve the clarity and improve the brightness scene adaptability. , Overcome the effect of blurring in some areas

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be further described below in conjunction with the accompanying drawings.

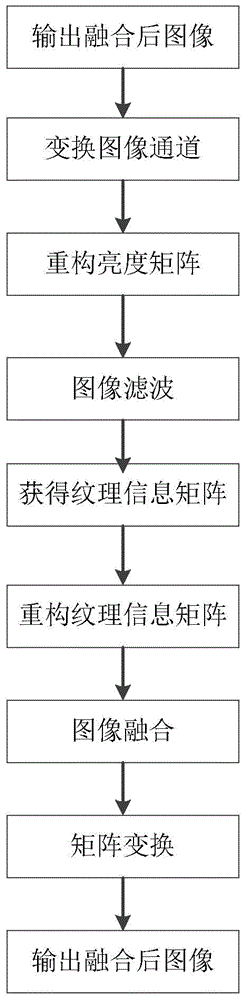

[0040] Reference attached figure 1 , further describe in detail the steps realized by the present invention.

[0041] Step 1, input the image to be fused.

[0042] Input one frame of images taken under flashlight and one frame without flashlight to be fused respectively.

[0043]Step 2, transform the channel of the image to be fused.

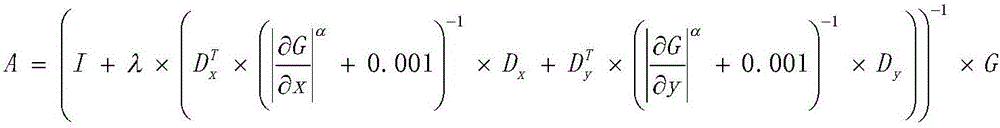

[0044] Use the following formula to transform a frame of image taken under flash conditions from the red, green and blue matrix into three matrices of brightness, red difference, and blue difference:

[0045] YF=0.2990×R f +0.5870×G f +0.1140×B f

[0046] CbF=-0.1687×R f -0.3313×G f +0.5000×B f +128

[0047] CrF=-0.5000×R f -0.4187×G f -0.0813×B f +128

[0048] Among them, YF, CbF, and CrF represent the brightness, red difference, and blue difference matrix of a frame of image captured under the flashlight condition after ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com