Teaching Video Annotation Method Based on Collaborative Filtering

A teaching video and collaborative filtering technology, applied in the field of image processing, can solve the problems of low labeling accuracy and inconspicuous differences in visual features, and achieve the effect of overcoming low labeling accuracy, low labeling accuracy, and high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

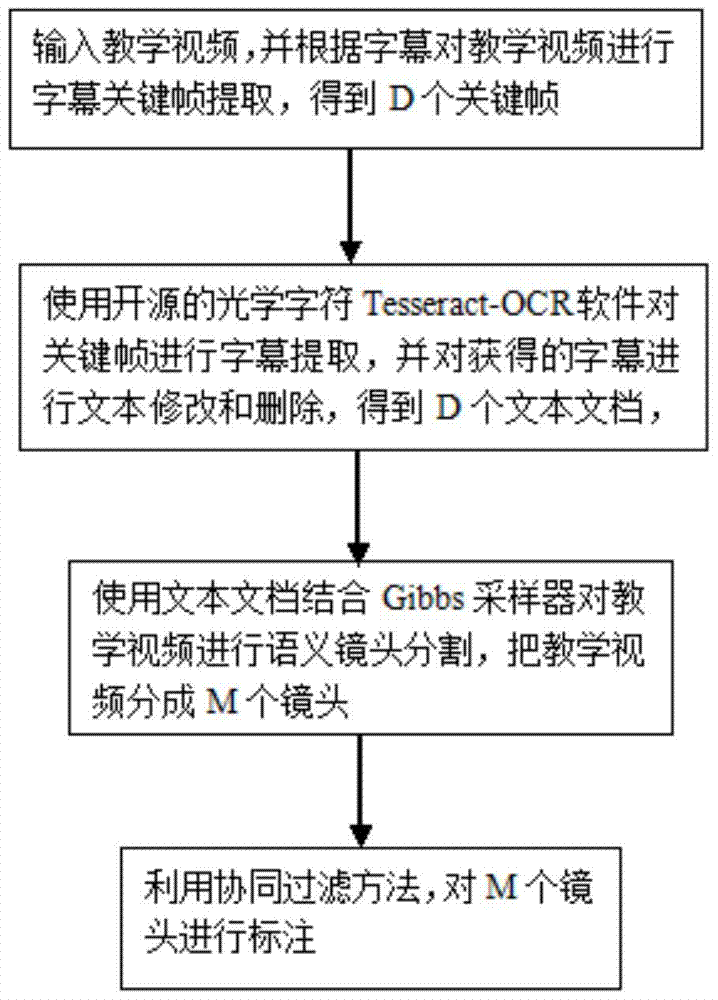

[0032] The present invention will be described in further detail below in conjunction with the accompanying drawings.

[0033] refer to figure 1 , the implementation steps of the present invention are as follows:

[0034] Step 1: Input the teaching video, and extract key frames of subtitles from the teaching video according to the subtitles, and obtain D key frames.

[0035] The instructional video input in this step is as follows: figure 2 as shown, figure 2 There are 12 screenshots of 2a-2l in total, and the following steps are used to realize the figure 2 Extraction of keyframes:

[0036] 1.1) Obtain an image in an educational video every 20 frames, and get Q frames of images, Q>0;

[0037] 1.2) Select the sub-region at the bottom 1 / 4 of each image frame, and calculate the sum Y of the absolute value of the pixel difference between the corresponding positions of the sub-region and other image frames a ;

[0038] 1.3) Set the threshold P a 1 / 10 of the number of pi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com