A method for caption area of positioning video

A region and subtitle technology, which is applied in the field of locating video subtitle regions, can solve problems such as limited adaptability, and achieve the effect of improving the recognition effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

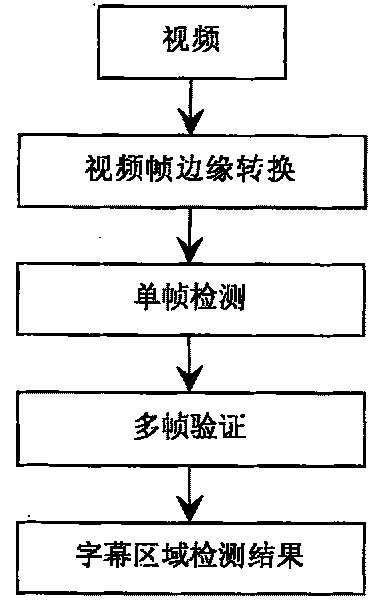

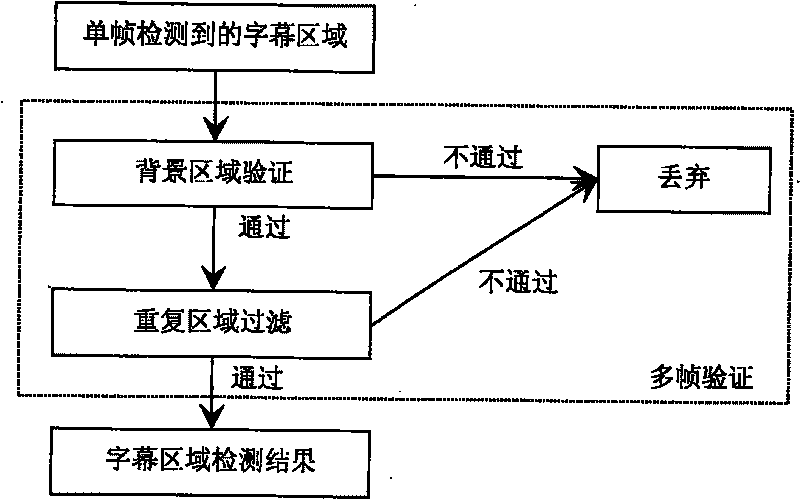

[0027] Such as figure 1 Shown, a kind of method for positioning video subtitle area of the present invention specifically comprises the following steps:

[0028] (1) Extract the video frame and convert the video frame into an edge intensity map.

[0029] Use the improved Sobel edge detection operator to calculate the edge intensity value of each pixel, the formula is as follows:

[0030] S=Max(|S H |, |S V |, |S LD |, |S RD |)

[0031] Among them, S H , S V , S LD , S RD Respectively represent the Sobel edge strength values in the four directions of horizontal, vertical, left diagonal, and right diagonal, and Max is the calculated maximum value.

[0032] (2) The segmentation scale is automatically adjusted according to the complexity of the background, and the subtitle area is segmented by applying the method of horizon...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com