A multimedia digital fusion method and device

A fusion method and multimedia data technology, applied in the field of multimedia digital fusion methods and devices, can solve the problems of lack of ease of use and practicability, inability to express, singleness, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

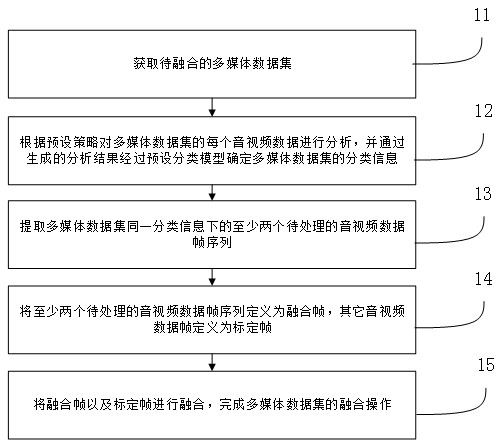

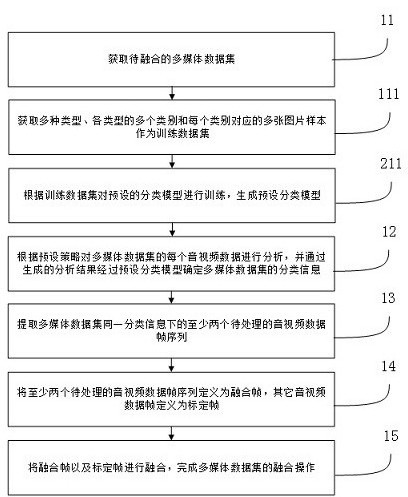

[0026] As shown in Figure 1(a)-(c), it is a schematic flowchart of a multimedia digital fusion method in an embodiment, which specifically includes the following steps:

[0027] Step 11, acquire the multimedia data set to be fused.

[0028] Step 12: Analyze each audio and video data of the multimedia data set according to a preset strategy, and determine the classification information of the multimedia data set through a preset classification model based on the generated analysis results.

[0029] In one embodiment, each audio and video data of the multimedia data set is analyzed according to a preset strategy, and before the classification information of the multimedia data set is determined through a preset classification model through the generated analysis result, it also includes:

[0030] Step 111, acquiring multiple types, multiple categories of each type, and multiple image samples corresponding to each category as a training data set.

[0031] Step 211: Train a prese...

Embodiment 2

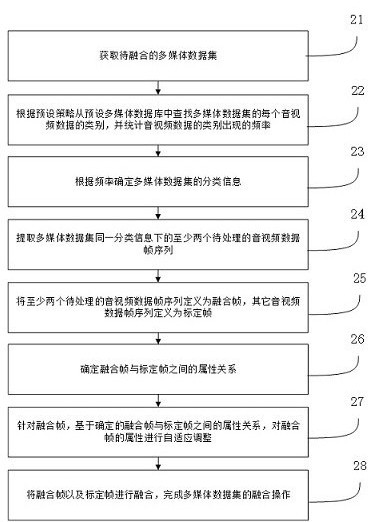

[0046] The following embodiments further consider the recognition performance of visual information in an acoustic noise environment, especially in a noisy environment, to further improve the accuracy of multimedia digital fusion and the applicability of operation.

[0047] As shown in Figure 2 (a)-(b), it is a schematic flow chart of a multimedia digital fusion method in another embodiment, which specifically includes the following steps:

[0048] Step 21, acquire the multimedia data set to be fused.

[0049] Step 22: Find the category of each audio and video data in the multimedia data set from the preset multimedia database according to the preset strategy, and count the frequency of occurrence of the category of the audio and video data.

[0050] In step 22, the preset policy may be pre-configured, and is used to find a policy for each category of audio and video data. The preset strategy includes: preset one or more keywords used to identify the category of each audio an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com