A dictation control method and device based on facial feature information

A technology of facial features and control methods, applied to teaching aids, computer parts, instruments, etc. operated by electricity, can solve problems such as limited dictation ability, reduced dictation effect, dictation accuracy rate, and dictation users can’t distinguish dictation content, etc., to achieve Improve the dictation experience, improve the effect of dictation and the effect of dictation accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

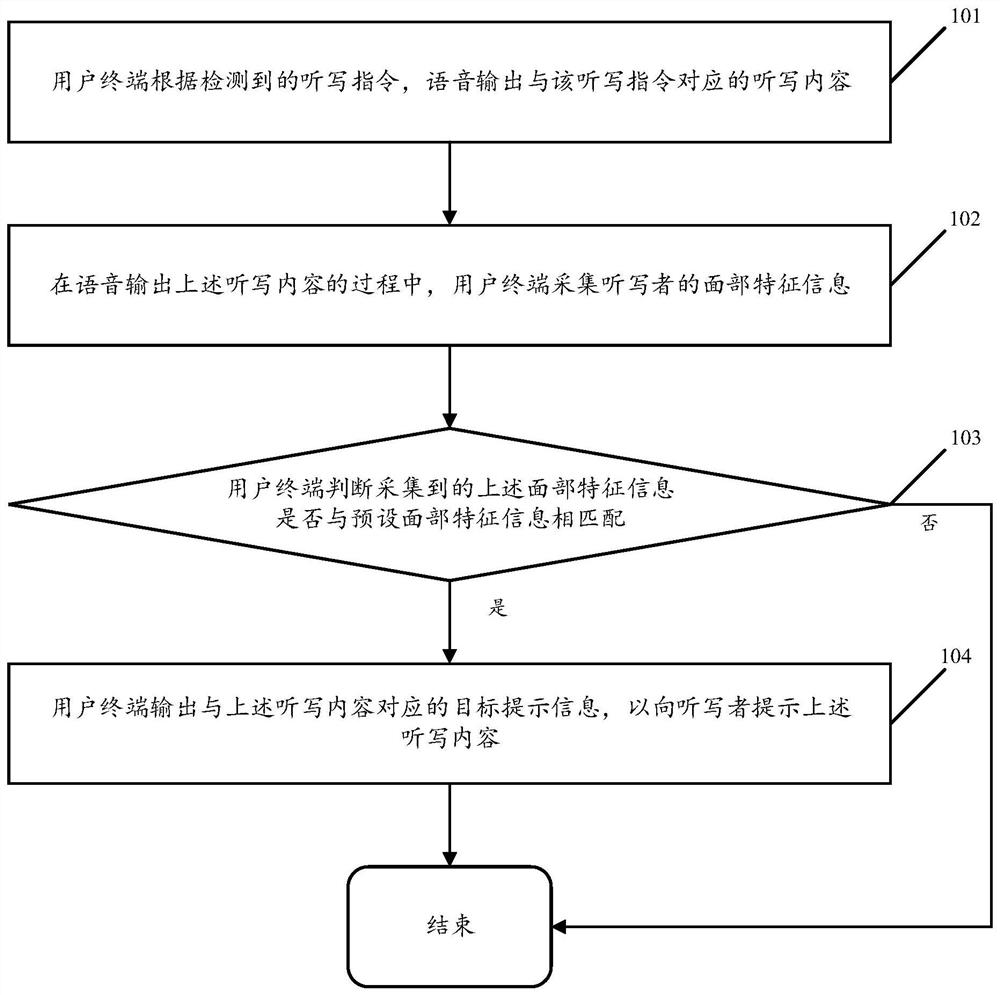

[0065] see figure 1 , figure 1 It is a schematic flowchart of a dictation control method based on facial feature information disclosed in an embodiment of the present invention. in, figure 1 The described method can be applied to any kind of user terminal with dictation control function, such as smart phones (Android phones, iOS phones, etc.), tablet computers, PDAs, smart wearable devices, and mobile Internet devices (Mobile Internet Devices, MID). , the embodiments of the present invention are not limited. like figure 1 As shown, the dictation control method based on facial feature information may include the following operations:

[0066] 101. According to the detected dictation instruction, the user terminal voice outputs the dictation content corresponding to the dictation instruction.

[0067] In this embodiment of the present invention, the dictation instruction may be triggered by the dictator according to his / her own dictation requirements, or may be triggered by...

Embodiment 2

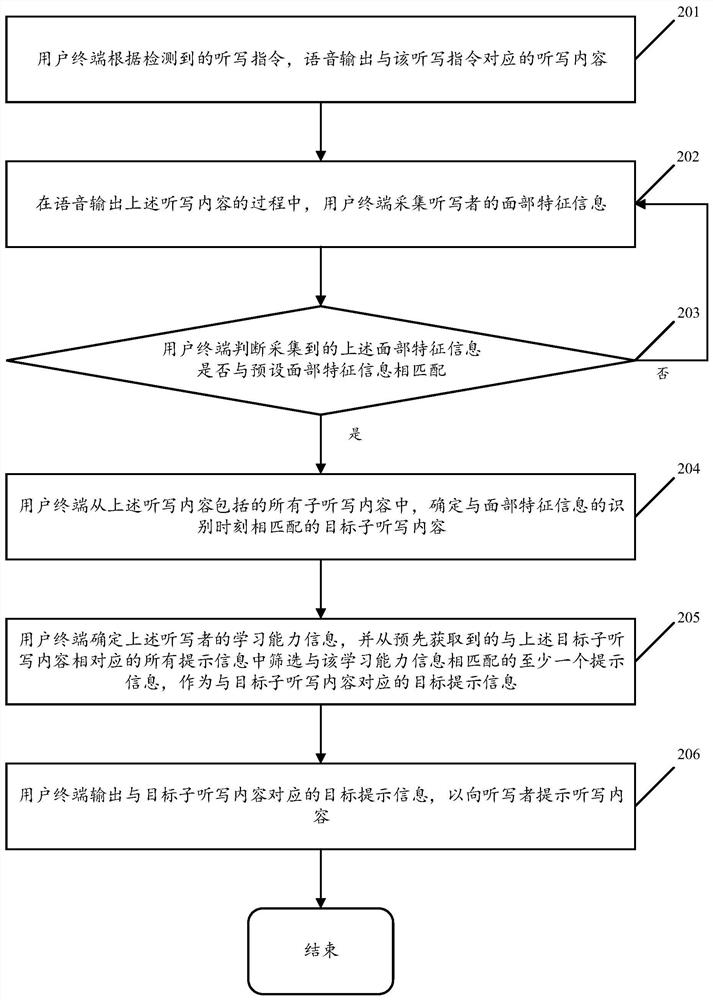

[0114] see figure 2 , figure 2 It is a schematic flowchart of another facial feature information-based dictation control method disclosed in an embodiment of the present invention. in, figure 2 The described method can be applied to any kind of user terminal with dictation control function, such as smart phones (Android phones, iOS phones, etc.), tablet computers, PDAs, smart wearable devices, and mobile Internet devices (Mobile Internet Devices, MID). , the embodiments of the present invention are not limited. like figure 2 As shown, the dictation control method based on facial feature information may include the following operations:

[0115] 201. According to the detected dictation instruction, the user terminal voice outputs the dictation content corresponding to the dictation instruction.

[0116] In this embodiment of the present invention, the dictation content includes at least one sub-dictation content.

[0117] 202. During the process of outputting the abov...

Embodiment 3

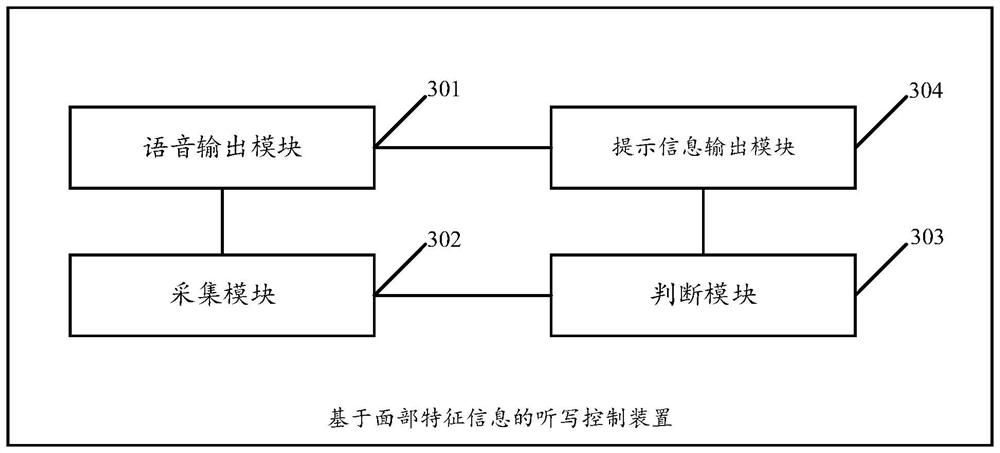

[0136] see image 3 , image 3 It is a schematic structural diagram of a dictation control device based on facial feature information disclosed in an embodiment of the present invention. in, image 3 The described device can be applied to any user terminal such as smart phones (Android phones, iOS phones, etc.), tablet computers, handheld computers, smart wearable devices, and Mobile Internet Devices (MID). Examples are not limited. like image 3 As shown, the dictation control device based on facial feature information may include:

[0137] The voice output module 301 is configured to, according to the detected dictation instruction, voice output the dictation content corresponding to the dictation instruction.

[0138] The collection module 302 is configured to collect the facial feature information of the dictator during the process of voice output of the above-mentioned dictation content by the voice output module 301 .

[0139] The judgment module 303 is configured ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com